I'm trying to make simple decision tree using C5.0 in R.

data has 3 columns(including target data) and 14 rows. This is my 'jogging' data. target variable is 'CLASSIFICATION'

WEATHER JOGGED_YESTERDAY CLASSIFICATION

C N

W Y -

Y Y -

C Y -

Y N -

W Y -

C N -

W N

C Y -

W Y

W N

C N

Y N -

W Y -

or as dput result:

structure(list(WEATHER = c("C", "W", "Y", "C", "Y", "W", "C",

"W", "C", "W", "W", "C", "Y", "W"), JOGGED_YESTERDAY = c("N",

"Y", "Y", "Y", "N", "Y", "N", "N", "Y", "Y", "N", "N", "N", "Y"

), CLASSIFICATION = c(" ", "-", "-", "-", "-", "-", "-", " ",

"-", " ", " ", " ", "-", "-")), class = "data.frame", row.names = c(NA,

-14L))

jogging <- read.csv("Jogging.csv")

jogging #training data

library(C50)

jogging$CLASSIFICATION <- as.factor(jogging$CLASSIFICATION)

jogging_model <- C5.0(jogging[-3], jogging$CLASSIFICATION)

jogging_model

summary(jogging_model)

plot(jogging_model)

but it does not make any decision tree. I thought that it should have made 2 nodes(because of 2 columns except target variables) I want to know what's wrong :(

CodePudding user response:

For this answer I will use a different tree building package partykit just for the reason that I am more used to it. Let's do the following:

jogging <- read.table(header = TRUE, text = "WEATHER JOGGED_YESTERDAY CLASSIFICATION

C N

W Y -

Y Y -

C Y -

Y N -

W Y -

C N -

W N

C Y -

W Y

W N

C N

Y N -

W Y -",

stringsAsFactors = TRUE)

library(partykit)

ctree(CLASSIFICATION ~ WEATHER JOGGED_YESTERDAY, data = jogging,

minsplit = 1, minbucket = 1, mincriterion = 0) |> plot()

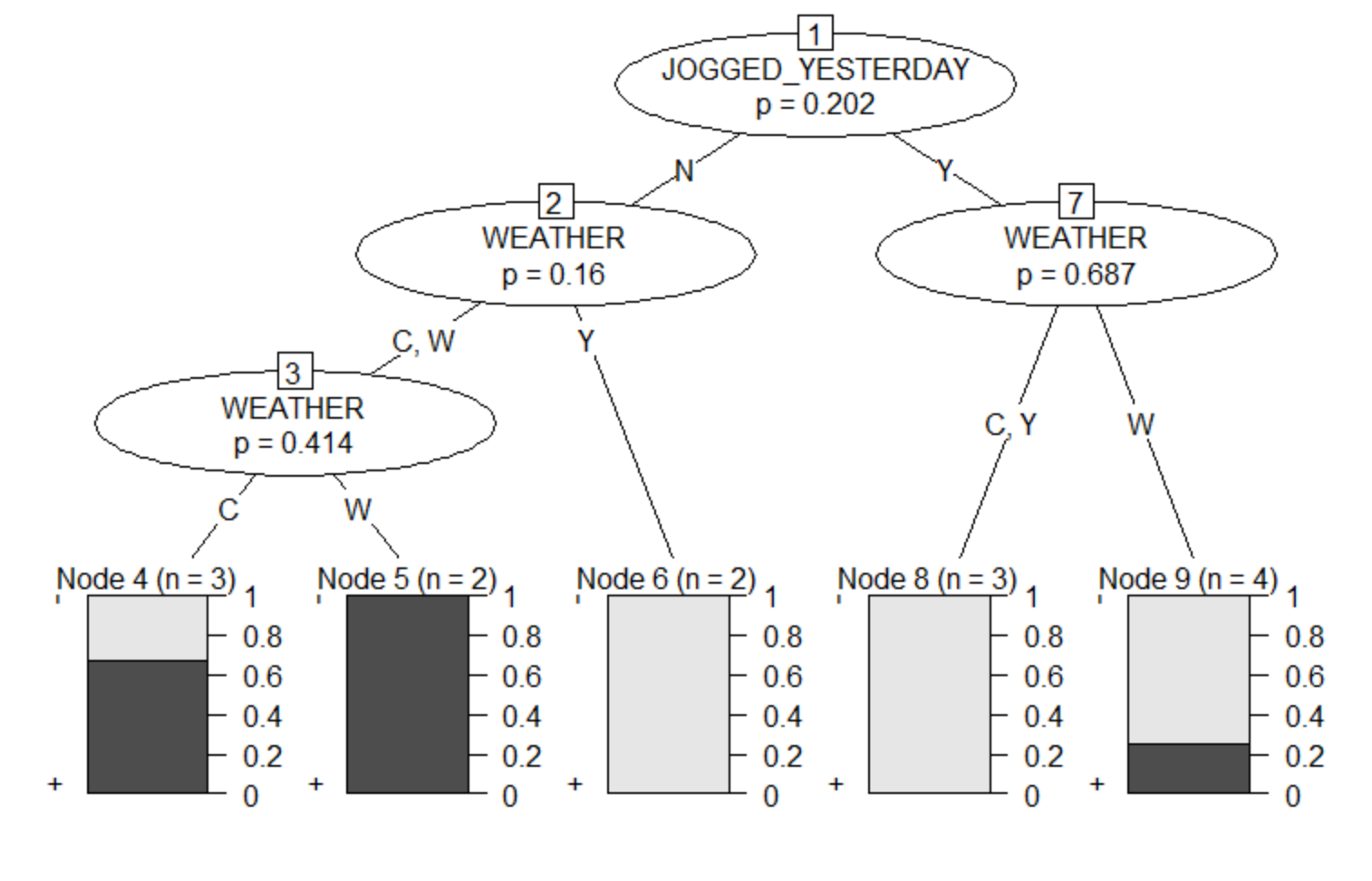

That will print the following tree:

That is a tree that uses up to three levels of splits and still does not find a perfect fit. The first split has a p-value of .2, indicating that there is not nearly enough data to justify even this first split, let alone those following it. This is a tree that is very likely to massively overfit the data and overfitting is bad. That is why usual tree algorithms come with measures to prevent overfitting and in your case, that prohibits growing a tree. I disabled those with the arguments in the ctree call.

So in short: You have not enough data. Just predicting - all the time is the most reasonable thing a classification tree can do.