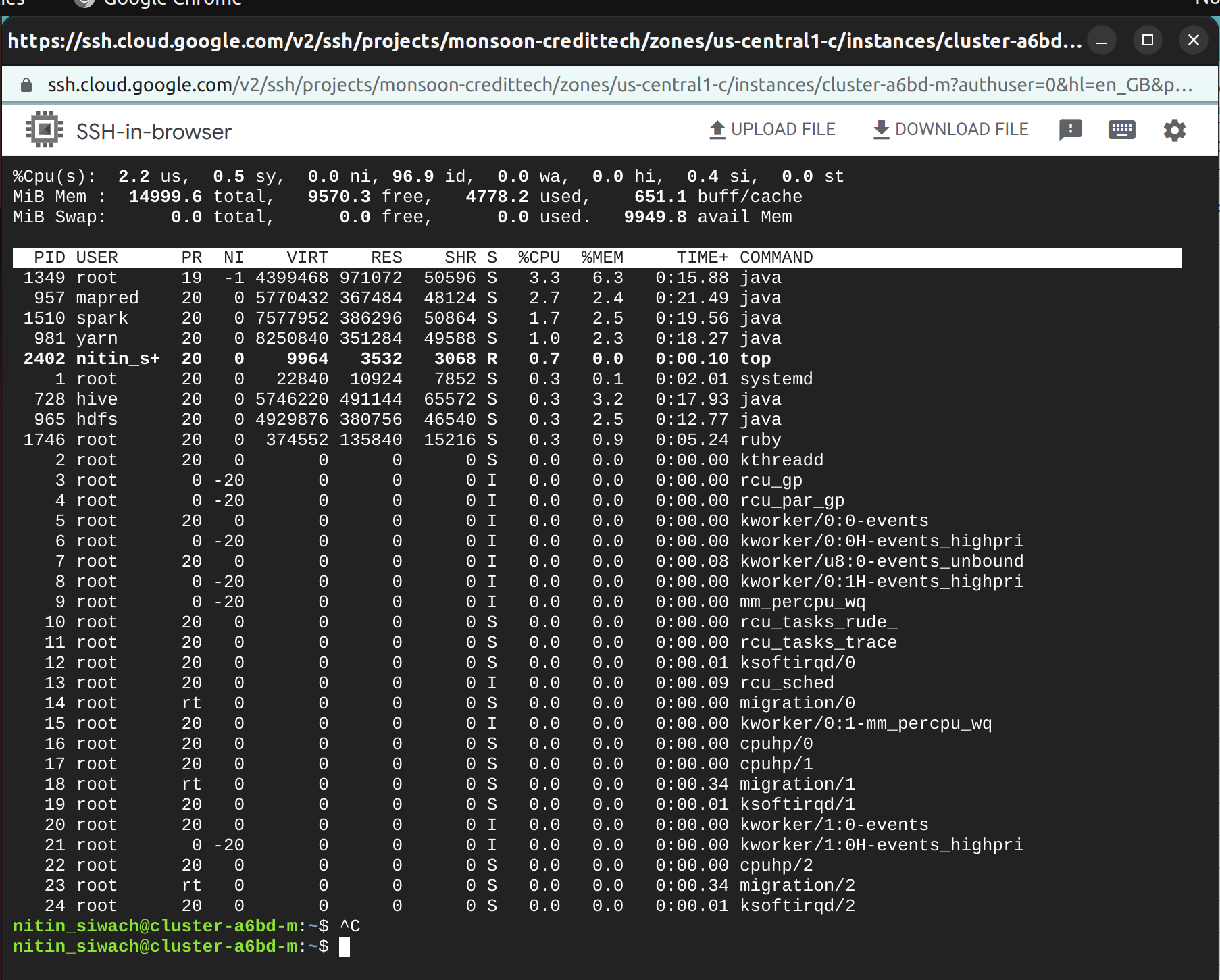

I have set up a dataproc cluster to run my spark jobs on. I have just set up the cluster and have not started any spark session yet. Still, I am seeing spark process, mapreduce process, yarn etc in my top command. What is that about? Should not the spark process start after I have started the SparkSession with configurations of my choice?

CodePudding user response:

These are all background processes and daemons running in the background, running and monitoring the hadoop and spark ecosystem, and waiting for you to submit a request or program, that can be run. They need to be up and running first before you can run a spark app. Pretty normal on Linux.