I have a serious issue with finding all possible paths in my csv file that looks like this :

| Source | Target | Source_repo | Target_repo |

|---|---|---|---|

| SOURCE1 | Target2 | repo-1 | repo-2 |

| SOURCE5 | Target3 | repo-5 | repo-3 |

| SOURCE8 | Target5 | repo-8 | repo-5 |

There a large amount of lines in the datasets, more than 5000 lines. I want to generate all possible paths like this in and return a list (Target5 is equal to SOURCE5):

- SOURCE1 Target2

- SOURCE8 Target5 Target3

I want to implement this solution without using recursive functions, since causes problems (maximum recursion depth exceeded).

This is the current code example :

def attach_co_changing_components(base_component):

co_changes = df_depends_on.loc[df_depends_on["Source_repo"] ==

base_component, "Target_repo"].values

result = {base_component: list(co_changes)}

return result

def dfs(data, path, paths):

datum = path[-1]

if datum in data:

for val in data[datum]:

new_path = path [val]

paths = dfs(data, new_path, paths)

else:

paths = [path]

return paths

def enumerate_paths(graph, nodes=[]):

nodes = graph.keys()

all_paths = []

for node in nodes:

node_paths = dfs(graph, [node], [])

all_paths = node_paths

return all_paths

if __name__ == "__main__":

df = pd.read_csv("clean_openstack_evolution.csv")

co_changing_components = df[["Source"]].copy()

co_changing_components = co_changing_components.drop_duplicates(

).reset_index(drop=True)

co_changing_components = co_changing_components["Source"].map(

attach_co_changing_components)

co_changing_components = co_changing_components.rename("Path")

co_changing_components = co_changing_components.reset_index(drop=True)

newdict = {}

for k, v in [(key, d[key]) for d in co_changing_components for key in d]:

if k not in newdict: newdict[k] = v

else: newdict[k].append(v)

graph_keys = df_depends_on["Source_repo"].drop_duplicates().to_dict(

).values()

graph_keys = {*graph_keys}

graph_keys = set([

k for k in graph_keys

if len(df_depends_on[df_depends_on["Target"] == k]) > 0

])

result = enumerate_paths(new_dict)

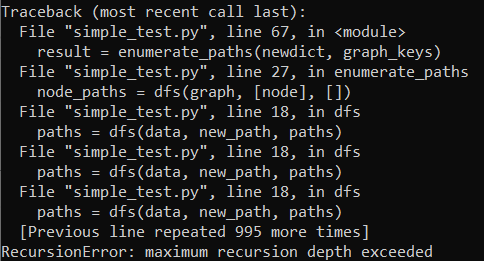

Here is the output after executing the preceding code :

Here is the data link Google drive

I tried to solve the problem using recursive function, but the code failed with the problem of depth exceeded. I aim to solve it without recursive functions.

CodePudding user response:

I'm not sure if you want all paths or paths specifically from node to another node. Either way this looks like a job for networkx.

Setup (nx.from_pandas_edgelist)

import networkx as nx

import pandas as pd

df = pd.read_csv("...")

graph = nx.from_pandas_edgelist(df, create_using=nx.DiGraph)

All paths (nx.all_simple_paths)

from itertools import chain, product, starmap

from functools import partial

roots = (node for node, d in graph.in_degree if d == 0)

leaves = (node for node, d in graph.out_degree if d == 0)

all_paths = partial(nx.all_simple_paths, graph)

paths = list(chain.from_iterable(starmap(all_paths, product(roots, leaves))))

From one node to another

source_node = "some_node_in_graph"

target_node = "some_other_node_in_graph"

list(nx.all_simple_paths(graph, source=source_node, target=target_node))