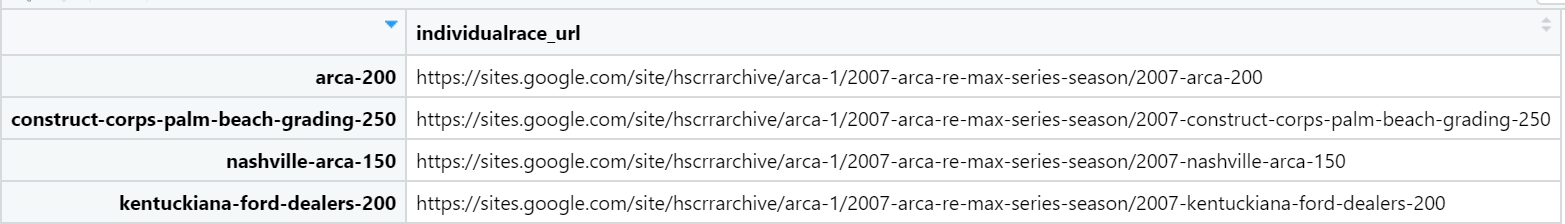

I scraped a website already and made a dataframe from it that only contains one column. The dataframe is called "urldataframe", while the column that contains all of the urls is called "individualrace_url".

Here is some of my data, the links are formatted as a character currently in the dataframe, urldataframe.

Here is the first two links: https://sites.google.com/site/hscrrarchive/arca-1/2007-arca-re-max-series-season/2007-arca-200 https://sites.google.com/site/hscrrarchive/arca-1/2007-arca-re-max-series-season/2007-construct-corps-palm-beach-grading-250

How do I create a scraper that goes through my dataframe of links one by one? I'm not sure if a for loop is the way to go about this or not. If I can use a for loop, what am I doing wrong?

res_all <- NULL

for (realtest.Event in racename) {

urlrunning = paste0(realtest.Event)

scrapinghere = read_html(urlrunning)

putithere <- tibble(

bubba = scrapinghere %>% html_nodes("#sites-canvas-main-content td:nth-child(2)") %>% html_text(),

bubba2 = scrapinghere %>% html_node("#sites-canvas-main-content td:nth-child(1)")) %>% html_text()

res_all <- bind_rows(res_all, putithere)

}

I'm hoping that it would go through the loop of each url that I have in the dataframe. Every url has the same nodes, I'm pretty sure my issue is setting up the loop itself.

CodePudding user response:

A for loop is ok, I think in your case the closing parentheses for the tibble are at the wrong place. Another pattern I like is to use purrr::map_dfr which returns a data.frame. Here my untested code as no data is provided:

library(purrr)

res_all <- set_names(racename) %>%

map_dfr(function(realtest.Event) {

scrapinghere = read_html(realtest.Event)

tibble(

bubba = scrapinghere %>% html_nodes("#sites-canvas-main-content td:nth-child(2)") %>% html_text(),

bubba2 = scrapinghere %>% html_node("#sites-canvas-main-content td:nth-child(1)") %>% html_text()

)

}, .id = "racename")

I've used the .id argument to provide an additional column to the returned data.frame with the value of realtest.Event so that you know to which url the results belong to.

CodePudding user response:

Scraping the tables from the two links without loop.

library(tidyverse)

library(rvest)

library(janitor)

df <- tibble(

links = c("https://sites.google.com/site/hscrrarchive/arca-1/2007-arca-re-max-series-season/2007-arca-200",

"https://sites.google.com/site/hscrrarchive/arca-1/2007-arca-re-max-series-season/2007-construct-corps-palm-beach-grading-250")

)

get_ARCA <- function(link) {

link %>%

read_html() %>%

html_table() %>%

pluck(4) %>%

row_to_names(1) %>%

clean_names()

}

map_dfr(df$links, get_ARCA)

# A tibble: 81 × 9

finish start car_number driver sponsor make laps led status

<chr> <chr> <chr> <chr> <chr> <chr> <chr> <chr> <chr>

1 1 2 5 Bobby Gerhart Lucas Oil Chevrolet 80 54 Running

2 2 20 93 Marc Mitchell Ergon Pontiac 80 5 Running

3 3 12 3 Jeremy Clements Harrison's Work Wear-1 Stop Conv-Saxon Chevrolet 80 0 Running

4 4 13 39 David Ragan AAA Ford 80 0 Running

5 5 3 46 Frank Kimmel Tri-State Motorsports-Pork Ford 80 0 Running

6 6 19 31 Timothy Peters Cometic Gaskets-Okuma Chevrolet 80 0 Running

7 7 31 16 Justin Allgaier AG Tech-Trashman-Hoosier Tire Midwest Chevrolet 80 0 Running

8 8 14 4 Scott Lagasse Jr. Cunningham Motorsports Dodge 80 0 Running

9 9 11 47 Phillip McGilton SI Performance-Gould's Electric Chevrolet 80 0 Running

10 10 17 2 Michael McDowell Hillcrest Capital Partners Dodge 80 0 Running

# … with 71 more rows

# ℹ Use `print(n = ...)` to see more rows