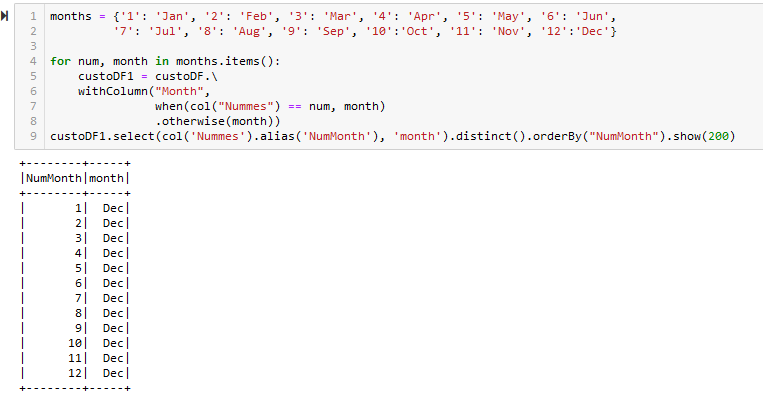

I'm avoiding repeating the .when function 12 times, so I thought about a dictionary. I don't know if it's a limitation of the Spark function or a logic error. Does the function allow this concatenation?

months = {'1': 'Jan', '2': 'Feb', '3': 'Mar', '4': 'Apr', '5': 'May', '6': 'Jun',

'7': 'Jul', '8': 'Aug', '9': 'Sep', '10':'Oct', '11': 'Nov', '12':'Dec'}

for num, month in months.items():

custoDF1 = custoDF.\

withColumn("Month",

when(col("Nummes") == num, month)

.otherwise(month))

custoDF1.select(col('Nummes').alias('NumMonth'), 'month').distinct().orderBy("NumMonth").show(200)

CodePudding user response:

You can use the replace method of the DataFrame class:

import pyspark.sql.functions as F

months = {'1': 'Jan', '2': 'Feb', '3': 'Mar', '4': 'Apr', '5': 'May', '6': 'Jun',

'7': 'Jul', '8': 'Aug', '9': 'Sep', '10':'Oct', '11': 'Nov', '12':'Dec'}

df = (df.withColumn('month', F.col('NumMonth').cast('string'))

.replace(months, subset=['month']))

df.show()

-------- -----

|NumMonth|month|

-------- -----

| 1| Jan|

| 2| Feb|

| 3| Mar|

| 4| Apr|

| 5| May|

| 6| Jun|

| 7| Jul|

| 8| Aug|

| 9| Sep|

| 10| Oct|

| 11| Nov|

| 12| Dec|

-------- -----

Here I had to cast NumMonth to string because your mapping in months dictionary had string keys; alternatively, you can change them to integer and avoid casting to string.