Before I get dinged for a dup, I've actually gone through many of the other posts on soft memory limit, and they never really explain what the common causes are. My question here is about what could be causing this, and whether it's just a function or if it could by my yaml settings or being slammed by bots.

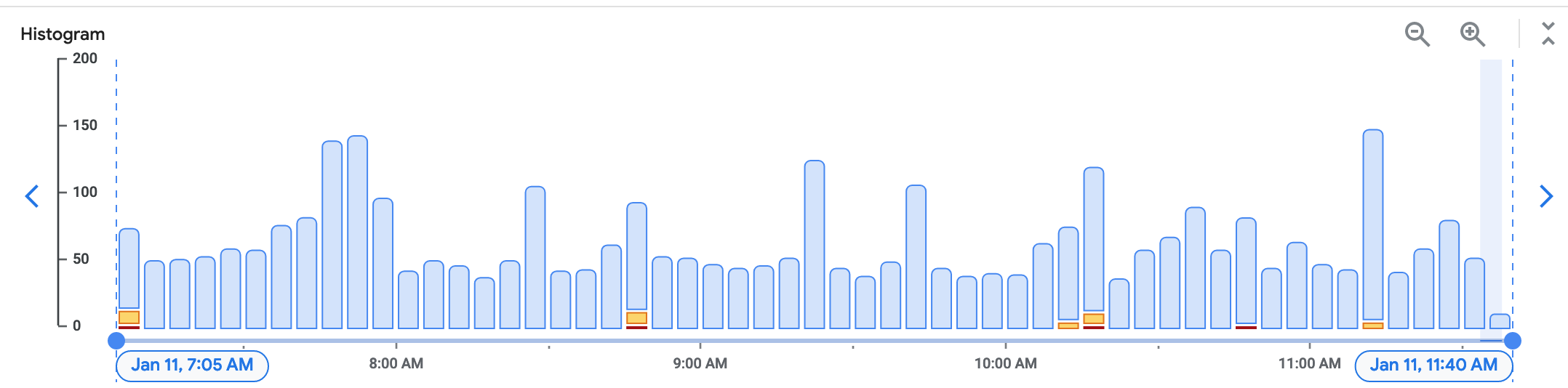

Here are my logs histogram:

As you can see I'm getting an error of this type about once an hour, and some intermittent warnings, but it's not the lions share of the service. I recently learned that this has been happening since early December, and increasingly so. I figured it was just an issue of inefficient code (Python/Flask), refactored my index page, but it's still happening and not significantly diminishing even after a serious refactor:

Exceeded soft memory limit of 256 MiB with 280 MiB after servicing 956 requests total. Consider setting a larger instance class in app.yaml.

293 MiB after servicing 1317 requests

260 MiB after servicing 35 requests

The strange thing is that it's happening on pages like

/apple-touch-icon.png

that should just 404.

Here are some other things that may be causing the problem. First my app.yaml page has settings that I added before my site was as popular that are extremely lean to say the least:

# instance_class: F1 (default)

automatic_scaling:

max_instances: 3

min_pending_latency: 5s

max_pending_latency: 8s

#max_concurent_requests: 20

target_cpu_utilization: 0.75

target_throughput_utilization: 0.9

The small instances, min and max latency, and cpu utilization are all obviously set for slower service, but I'm not made of money, and the site isn't generating revenue.

Secondly, looking at the logs recently, I'm getting absolutely slammed by webcrawlers. I've added them to robots.txt:

User-Agent: MJ12bot

Crawl-Delay: 20

User-Agent: AhrefsBot

Crawl-Delay: 20

User-Agent: SemrushBot

Crawl-Delay: 20

It looks like all but Semrush have died down a bit.

Anyway, thoughts? Do I just need to upgrade to F2, or is there something in the settings that I've definitely got wrong.

Again, I've very seriously refactored the main pages that trigger the alert, but it seems not to have helped. The real issue is that I'm just a coder without a networking background, so I honestly don't even know what's happening.

CodePudding user response:

In my experience, only really simple apps will fit in an F1 without periodic memory errors. I don't know if the cause is Python or GAE, but memory cleanup does not work well on Python/GAE.

Though GAE automatically restarts instances when there is a memory error so you can probably ignore it unless occasional slow responses to end users is a deal breaker for you.

I would just upgrade to F2 unless you are really on a budget.