I don't know how to merge multiple .txt files with datas into one .csv file each of the .txt file seperated into columns.

This is my code so far,

$location = (Get-Location).Path

$files = Get-ChildItem $location -Filter "*.asd.txt"

$data = @()

foreach ($file in $files) {

$fileData = Get-Content $file.FullName

foreach ($line in $fileData) {

$lineData = $line -split "\t"

$data = $lineData[1]

Add-Content -Path "$location\output.csv" -Value $data

}

}

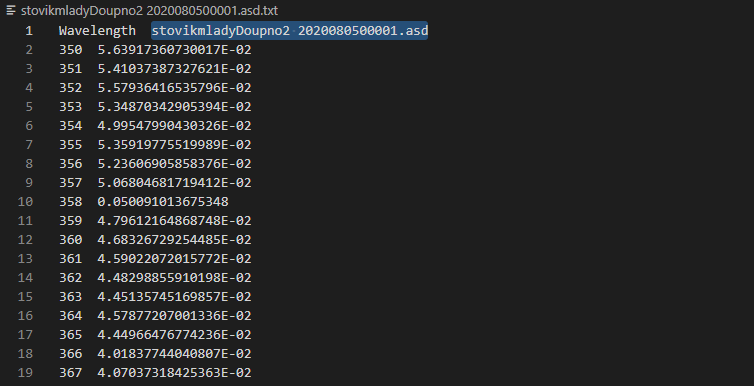

Each of the file looks like this

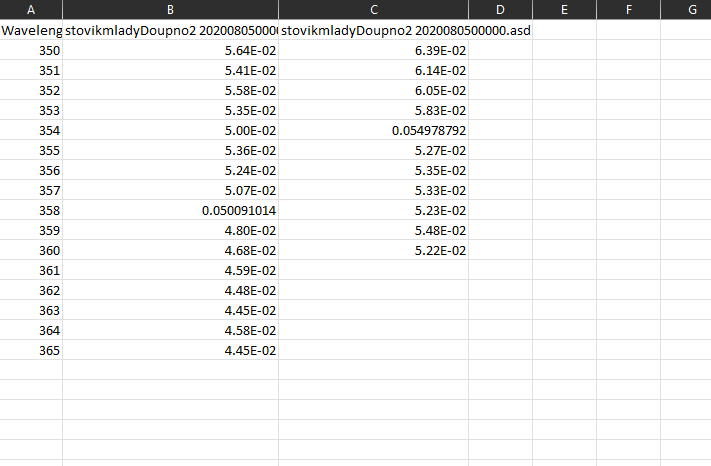

I want to keep the first column "WaveLength" and put the second columns next to each other from all the files in the folder. The header will start with the exac name "stovikmladyDoupno2 2020080500001.asd" or "stovikmladyDoupno2 2020080500002.asd" and so on ....

I have tried to look for information over two days and still don't know. I have tried to put "," on the end of the file, I though excel will handle with that, but nothing helped.

Here I provide few files as test data https://mega.nz/folder/zNhTzR4Z#rpc-BQdRfm3wxl87r9XUkw

few lines of data

Wavelength stovikmladyDoupno2 2020080500000.asd

350 6.38961399706465E-02

351 6.14107911262903E-02

352 6.04866108251357E-02

353 5.83485359067184E-02

354 0.054978792413247

355 5.27014859356317E-02

356 5.34849237528764E-02

357 5.32841277775603E-02

358 5.23466655229364E-02

359 5.47595002186027E-02

360 5.22061034631109E-02

361 4.90149806042666E-02

362 4.81633530421385E-02

363 4.83974076557941E-02

364 4.65219929658367E-02

365 0.044800930294557

366 4.47830287392802E-02

367 4.46947539436297E-02

368 0.043756926558447

369 4.31725380363072E-02

370 4.36867609723618E-02

371 4.33227601805265E-02

372 4.29978664449687E-02

373 4.23860463187361E-02

374 4.12183604375401E-02

375 4.14306521081773E-02

376 4.11760903772502E-02

377 4.06421127128478E-02

378 4.09771489689262E-02

379 4.10083126746385E-02

380 4.05161601354181E-02

381 3.97904564387456E-02

CodePudding user response:

I assumed a location since I'm not fond of declaring file paths without a literal path. Please adjust path as needed.

$Files = Get-ChildItem J:\Test\*.txt -Recurse

$Filecount = 0

$ObjectCollectionArray = @()

#Fist parse and collect each row in an array.. While keeping the datetime information from filename.

foreach($File in $Files){

$Filecount

Write-Host $Filecount

$DateTime = $File.fullname.split(" ").split(".")[1]

$Content = Get-Content $File.FullName

foreach($Row in $Content){

$Split = $Row.Split("`t")

if($Split[0] -ne 'Wavelength'){

$Object = [PSCustomObject]@{

'Datetime' = $DateTime

'Number' = $Split[0]

'Wavelength' = $Split[1]

}

$ObjectCollectionArray = $Object

}

}

}

#Match by number and create a new object with relation to the number and different datetime.

$GroupedCollection = @()

$Grouped = $ObjectCollectionArray | Group-Object number

foreach($GroupedNumber in $Grouped){

$NumberObject = [PSCustomObject]@{

'Number' = $GroupedNumber.Name

}

foreach($Occurance in $GroupedNumber.Group){

$NumberObject | Add-Member -NotePropertyName $Occurance.Datetime -NotePropertyValue $Occurance.wavelength

}

$GroupedCollection = $NumberObject

}

$GroupedCollection | Export-Csv -Path J:\Test\result.csv -NoClobber -NoTypeInformation

CodePudding user response:

What you're looking to do is quite a hard task, there are a few ways to do it. This method requires that all files are in memory to process them. You can definitely treat these files as TSVs, so Import-Csv -Delimiter "`t" is an option so you can deal with objects instead of plain text.

# using this temp dictionary to create objects for each line of each tsv

$tmp = [ordered]@{}

# get all files and enumerate

$csvs = Get-ChildItem $location -Filter *.asd.txt | ForEach-Object {

# get their content as objects

$content = $_ | Import-Csv -Delimiter "`t"

# get their property Name that is not `Wavelength`

$property = $content[0].PSObject.Properties.Where{ $_.Name -ne 'Wavelength' }.Name

# output an object holding the total lines of this csv,

# its content and the property name of interest

[pscustomobject]@{

Lines = $content.Count

Content = $content

Property = $property

}

}

# use a scriptblock to allow streaming so `Export-Csv` starts exporting as

# output is going through the pipeline

& {

# for loop used for each line of the Tsv having the highest number of lines

for($i = 0; $i -lt [System.Linq.Enumerable]::Max([int[]] $csvs.Lines); $i ) {

# this boolean is used to preserve the "Wavelength" value of the first Tsv

$isFirstCsv = $true

foreach($csv in $csvs) {

# if this is the first object

if($isFirstCsv) {

# add the value of "Wavelength"

$tmp['Wavelength'] = $csv.Content[$i].Wavelength

# and set the bool to false, since we are only using this once

$isFirstCsv = $false

}

# then add the value of each property of each Tsv to the temp dictionary

$tmp[$csv.Property] = $csv.Content[$i].($csv.Property)

}

# then output this object

[pscustomobject] $tmp

# clear the temp dictionary

$tmp.Clear()

}

} | Export-Csv path\to\result.csv -NoTypeInformation

CodePudding user response:

Here is a much more efficient approach that treats the files as plain text, this method is much faster and memory efficient however not as reliable. It uses StreamReader to read the file contents line-by-line and a StringBuilder to construct each line.

& {

# get all files and enumerate

$readers = Get-ChildItem $location -Filter *.asd.txt | ForEach-Object {

# create a stream reader for each file

[System.IO.StreamReader] $_.FullName

}

# this StringBuilder is used to construct each line

$sb = [System.Text.StringBuilder]::new()

# while any of the readers has more content

while($readers.EndOfStream -contains $false) {

# signals this is our first Tsv

$isFirstReader = $true

# enumerate each reader

foreach($reader in $readers) {

# if this is the first Tsv

if($isFirstReader) {

# append the line as-is, only trimming exces white space

$sb = $sb.Append($reader.ReadLine().Trim())

$isFirstReader = $false

# go to next reader

continue

}

# if this is not the first Tsv,

# split on Tab and exclude the first token (Wavelength)

$null, $line = $reader.ReadLine().Trim() -split '\t'

# append a Tab this line

$sb = $sb.Append("`t$line")

}

# append a new line and output the constructed string

$sb.AppendLine().ToString()

# and clear it for next lines

$sb = $sb.Clear()

}

# dispose all readers when done

$readers | ForEach-Object Dispose

} | Set-Content path\to\result.tsv -NoNewline