I am trying to feed small patches of satellite image data (landsat-8 Surface Reflectance Bands) into neural networks for my project. However the downloaded image values range from 1 to 65535.

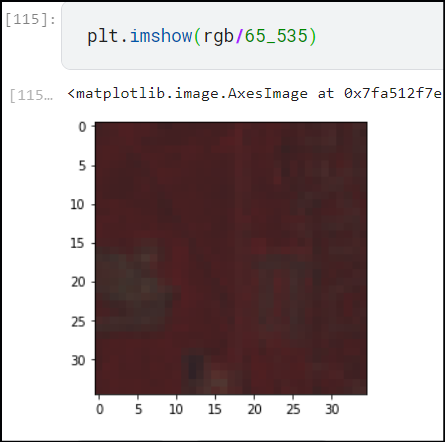

So I tried dividing images by 65535(max value) but plotting them shows all black/brown image like this!

But most of the images do not have values near 65535

Without any normalization the image looks all white.

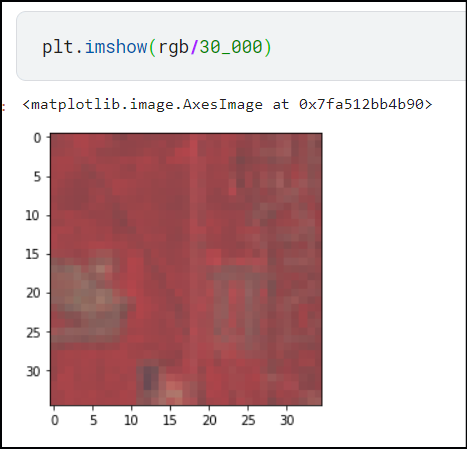

Dividing the image with 30k looks like this.

If the images are too dark or too light my network may not perform as intended.

My question: Is dividing the image with max value possible (65535) is the only solution or are there any other ways to normalize images especially for satellite data.

Please help me with this.

CodePudding user response:

To answer your question, though. There are other ways to normalize images. Standardization is the most common way (subtract the mean and divide by the standard deviation).

Using numpy...

image = (image - np.mean(image)) / np.std(image)

As I mentioned in a clarifying comment, you want the normalization method to match how the NN training set.

CodePudding user response:

Here is a blogpost dealing with normalization of satellite data.

In the article, a few methods have been tested. I hope this helps.