I have a requirement where I have to select a city in two columns from the JSON where city exists with the key "City". The first value in the table is coming up as null, not able to find a possible solution

val jsonString="""{"Zipcode":704,"ZipCodeType":"STANDARD","City":"PARC PARQUE","State":"PR"}"""

val data = Seq((1, jsonString))

import spark.implicits._

val df=data.toDF("id","value")

df.show(false)

import org.apache.spark.sql.types.{StringType, StructType}

val schema = new StructType()

.add("Zipcode", StringType, true)

.add("ZipCodeType", StringType, true)

.add("City", StringType, false)

.add("City", StringType, true)

val df4=df.withColumn("value",from_json(col("value"),schema))

df4.printSchema()

df4.show(false)

val df5=df4.select(col("id"),col("value.*"))

df5.printSchema()

df5.show()

Actual Result:

| id|Zipcode|ZipCodeType|City| City|

| 1| 704| STANDARD|null|PARC PARQUE|

Expected Result:

| id|Zipcode|ZipCodeType|City| City|

| 1| 704| STANDARD|PARC PARQUE|PARC PARQUE|

CodePudding user response:

I think you are overcomplicating your problem here. Just use the spark inbuilt functions and you should be fine.

I would recommend using spark.read.json function to do your processing.

Here, is what i did.

val jsonStr = """{"Zipcode":704,"ZipCodeType":"STANDARD","City":"PARC PARQUE","State":"PR"}"""

import spark.implicits._

val df = spark.read.json(Seq(jsonStr).toDS) // this function has overloaded implementation as well so that you can also read json files directly from HDFS/S3

// if you do df.printSchema, it wonn't have a duplicate columns

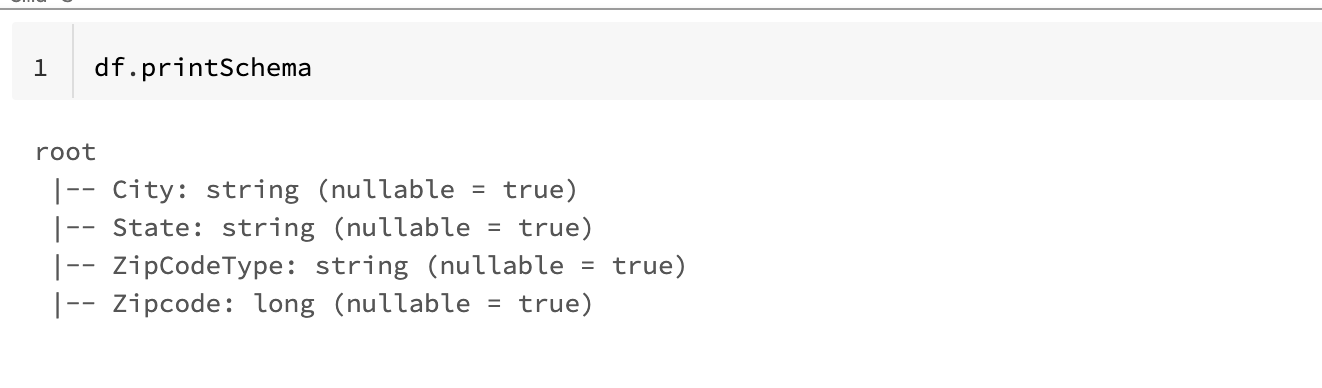

df.printSchema

The output will look something like this. As you can see there is no duplicate City field