I know that on databricks we get the following cluster logs.

- stdout

- stderr

- log4j

Just like how we have sl4j logging in java, I wanted to know how I could add my logs in the scala notebook.

I tried adding the below code in the notebook. But the message doesn't get printed in the log4j logs.

import com.typesafe.scalalogging.Logger

import org.slf4j.LoggerFactory

val logger = Logger(LoggerFactory.getLogger("TheLoggerName"))

logger.debug("****************************************************************** Useful message....")

CodePudding user response:

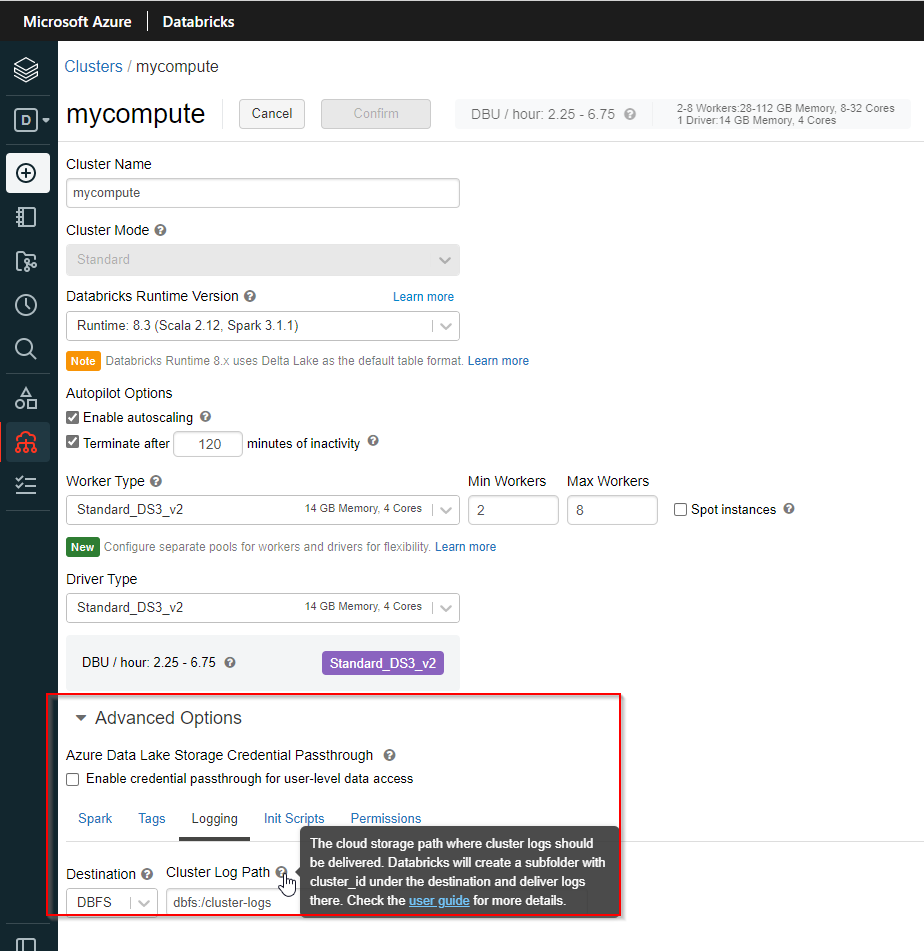

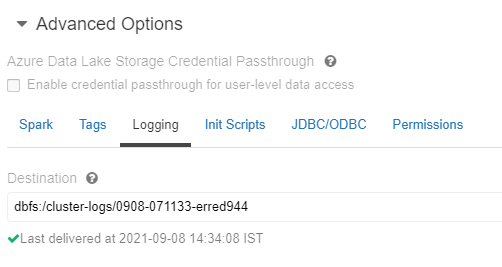

when you create your cluster in databricks, there is a tab where you can specify the log directory (empty by default).

Logs are written on DBFS, so you just have to specify the directory you want.

You can use like the code below in Databricks Notebook.

// creates a custom logger and log messages

var logger = Logger.getLogger(this.getClass())

logger.debug("this is a debug log message")

logger.info("this is a information log message")

logger.warn("this is a warning log message")

logger.trace("this is a TRACE log message")

See How to overwrite log4j configurations on Azure Databricks clusters