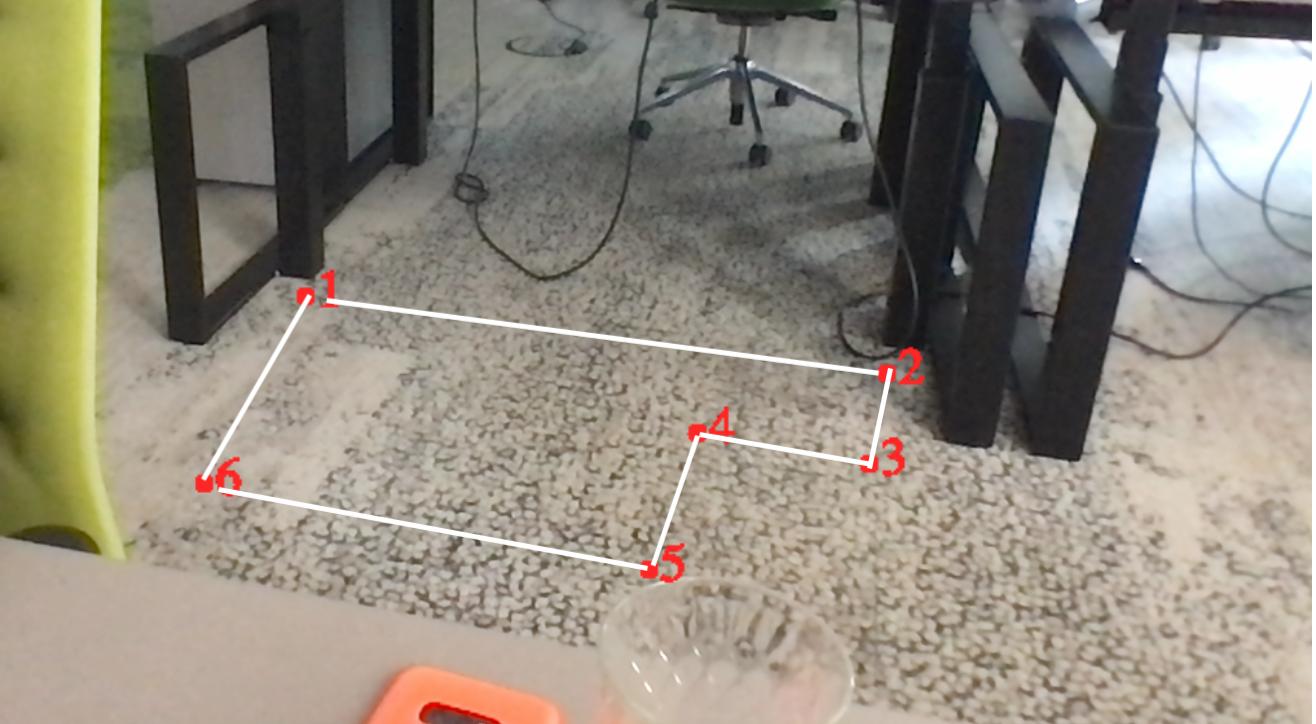

I have an array of coordinates that mark an area on the floor.

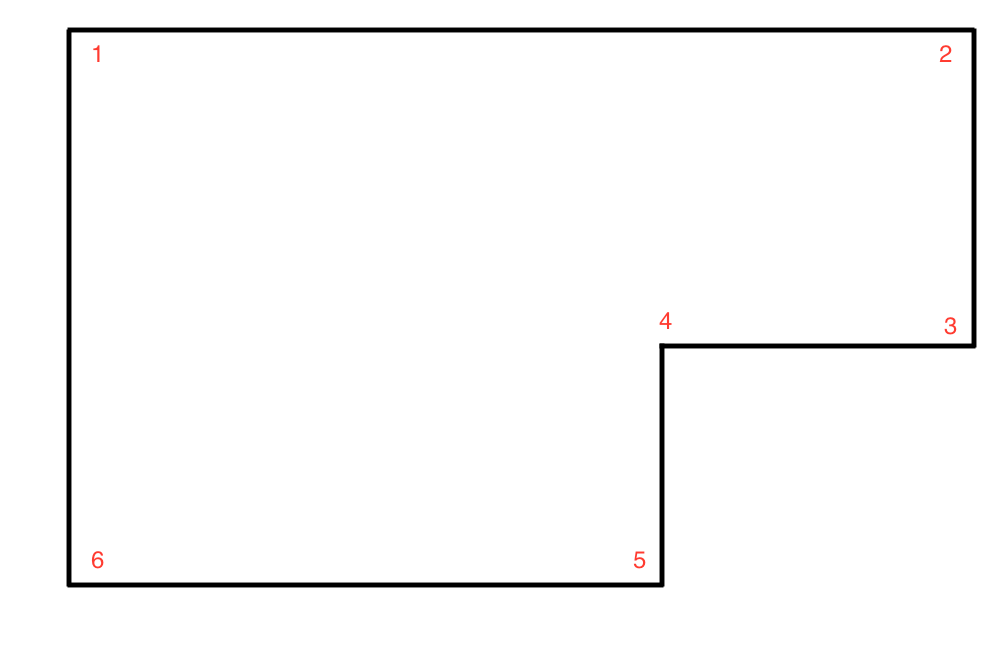

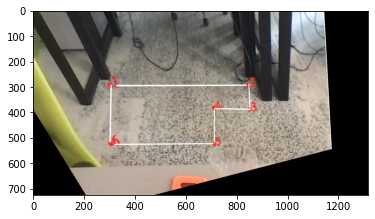

I want to generate a new array where all the coordinates are transformed, so that I get a warped array. The points should look like the following image. Please note that I want to generate the graphic using the new array. It does not exist yet. It gets generated after having to new array.

I know the distance between all coordinates if it helps. The coordinates json looks like this, where distance_to_next contains the distance to the next point in cm:

[

{

"x": 295,

"y": 228,

"distance_to_next": 200

},

{

"x": 559,

"y": 263,

"distance_to_next": 30

},

{

"x": 551,

"y": 304,

"distance_to_next": 50

},

{

"x": 473,

"y": 290,

"distance_to_next": 70

},

{

"x": 451,

"y": 352,

"distance_to_next": 150

},

{

"x": 249,

"y": 313,

"distance_to_next": 100

}

]

The first point is always in the top left.

I'm using python and opencv2 and I know about various functions like cv.warpAffine,

cv.warpPerspective, cv.findHomography, cv.perspectiveTransform, etc, but I'm not sure which one to use here.

Can someone point me in the right direction? Am I missing something obvious?

CodePudding user response:

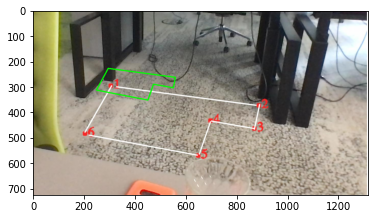

The points in your coordinates json do not align with the white polygon. If I use them I get the green polygon as shown below:

import cv2

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

# Load the image

img = cv2.imread('./input.jpg')

# Create a copy of the image

img_copy = np.copy(img)

img_copy = cv2.cvtColor(img_copy,cv2.COLOR_BGR2RGB)

pts = np.array([[295,228],[559,263],[551,304],[473,290],[451,352],[249,313]], np.int32)

pts = pts.reshape((-1,1,2))

img_copy2 = cv2.polylines(img_copy,[pts],True,(0,255,0), thickness=3)

plt.imshow(img_copy2)

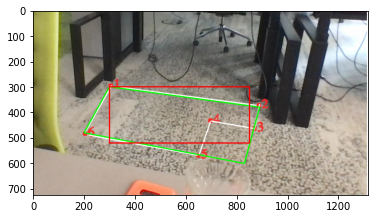

So I manually found the approx coordinates of your white polygon and overlayed them on white polygon in green. And you need the coordinates of desired polygon. I displayed them in red.

img = cv2.imread('./input.jpg')

img_copy = np.copy(img)

img_copy = cv2.cvtColor(img_copy,cv2.COLOR_BGR2RGB)

input_pts = np.array([[300,300],[890,380],[830,600],[200,480]], np.int32)

pts = input_pts.reshape((-1,1,2))

img_copy3 = cv2.polylines(img_copy,[pts],True,(0,255,0), thickness=3)

output_pts= np.array([[300,300],[850,300],[850,520],[300,520]], np.int32)

pts = output_pts.reshape((-1,1,2))

img_copy3 = cv2.polylines(img_copy3,[pts],True,(255,0,0), thickness=3)

plt.imshow(img_copy3)

We need to use cv2.getPerspectiveTransform here. But it takes only 4 points as input, so discard 2 points.

img = cv2.imread('./input.jpg')

img_copy = np.copy(img)

img_copy = cv2.cvtColor(img_copy,cv2.COLOR_BGR2RGB)

input_pts = np.float32(input_pts)

output_pts = np.float32(output_pts)

# Compute the perspective transform M

M = cv2.getPerspectiveTransform(input_pts,output_pts)

# Apply the perspective transformation to the image

out = cv2.warpPerspective(img_copy,M,(img_copy.shape[1], img_copy.shape[0]),flags=cv2.INTER_LINEAR)

# Display the transformed image

plt.imshow(out)