I was told that HDFS comprises files split into several blocks, size of which is 128M.

Since the replication factor is 3, in my view, the size of each file should be no more than 128M * 3 = 384M.

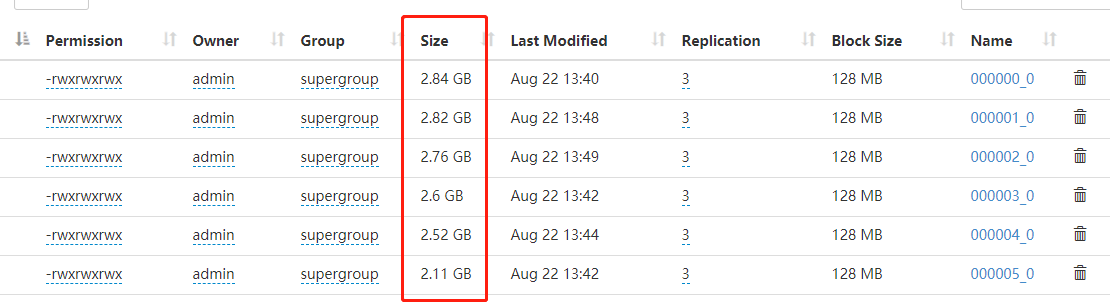

However, when the NN website show files generated by Hive are almost 3GB. Some files generated by impala could event be over 30GB.

Could anyone help me understand this... Thanks for your help in advance.

CodePudding user response:

You don't really have to worry about blocks and where they're stored unless you're really optimising things; Hadoop manages all of this stuff for you. That size column that you've highlighted is the size of all blocks combined, excluding replication.