I have data that I have to classify as either 0 or 1. The data is loaded from an .npz file. It gives me training, validation, and test data. This is what they look like:

x_train = [[[ 0 0 0 ... 0 1 4]

[ 0 0 0 ... 4 25 2]

[ 6 33 15 ... 33 0 0]

...

[ 0 23 4 ... 9 31 0]

[ 4 0 0 ... 0 0 12]

[ 5 0 0 ... 3 0 0]]

[[ 88 71 59 ... 61 62 62]

[ 74 88 73 ... 59 70 60]

[ 69 61 85 ... 60 58 82]

...

[ 68 85 58 ... 55 75 72]

[ 69 69 70 ... 81 76 83]

[ 74 68 76 ... 60 74 72]]

[[ 87 134 146 ... 108 116 157]

[108 117 144 ... 102 58 122]

[124 148 106 ... 97 135 146]

...

[ 96 153 111 ... 104 129 154]

[129 140 100 ... 74 114 97]

[119 115 160 ... 172 84 148]]

...

[[ 92 96 64 ... 69 83 83]

[ 85 44 89 ... 115 94 76]

[ 93 103 91 ... 92 81 75]

...

[ 16 109 81 ... 84 95 20]

[100 27 89 ... 66 107 48]

[ 24 67 144 ... 104 115 123]]

[[ 69 70 74 ... 72 73 75]

[ 72 72 76 ... 73 75 76]

[ 74 75 72 ... 72 69 73]

...

[ 72 72 69 ... 72 76 72]

[ 70 72 73 ... 72 76 67]

[ 69 72 72 ... 72 71 71]]

[[ 65 137 26 ... 134 57 174]

[ 91 76 123 ... 39 63 124]

[ 81 203 134 ... 192 63 143]

...

[ 1 102 96 ... 33 63 169]

[ 82 32 108 ... 151 75 151]

[ 12 97 164 ... 101 125 60]]]

y_train:

[0 0 0 ... 0 0 0]

These are my input shapes:

x_train.shape = (5000, 128, 128)

y_train.shape = (5000,)

As you can see, y is just the label and x is just 3D data. Because its a binary classifier, I wanted to build a simple NN with 3 dense layers. This is what I have:

model = Sequential()

model.add(Dense(12, input_dim = 8, activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.fit(x_train, y_train, epochs=150, batch_size=8)

But due to my input, I am getting this error:

ValueError: Input 0 of layer sequential_4 is incompatible with the layer: expected axis -1 of input shape to have value 8 but received input with shape (8, 128, 128)

How can I fix this problem? Is my NN too simplistic for this type of problem?

CodePudding user response:

There are multiple issues with your code. I have tried adding separate sections to explain them. Please go through all of them and do try out the code examples I have shown below.

1. Passing the samples/batch channel as the input dimension

You are passing the batch channel as the input shape for the dense layer. That is incorrect. Instead what you need to do is to pass the shape of each sample that the model should expect, in this case (128,128). The model automatically adds a channel in front for the batches to flow through the computation graph as (None, 128, 128), as shown in model.summary() below

2. 2D inputs for Dense layer.

Each of your samples (in this case total of 5000 samples) is a 2D matrix of shape 128,128. A dense layer can not directly consume it without flattening it first. (or using a different layer to be more suited to work with 2D/3D inputs as discussed later).

from tensorflow.keras import Sequential

from tensorflow.keras.layers import Dense, Flatten

model = Sequential()

model.add(Flatten(input_shape=(128,128)))

model.add(Dense(12, activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

Model: "sequential_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten_3 (Flatten) (None, 16384) 0

_________________________________________________________________

dense_5 (Dense) (None, 12) 196620

_________________________________________________________________

dense_6 (Dense) (None, 8) 104

_________________________________________________________________

dense_7 (Dense) (None, 1) 9

=================================================================

Total params: 196,733

Trainable params: 196,733

Non-trainable params: 0

_________________________________________________________________

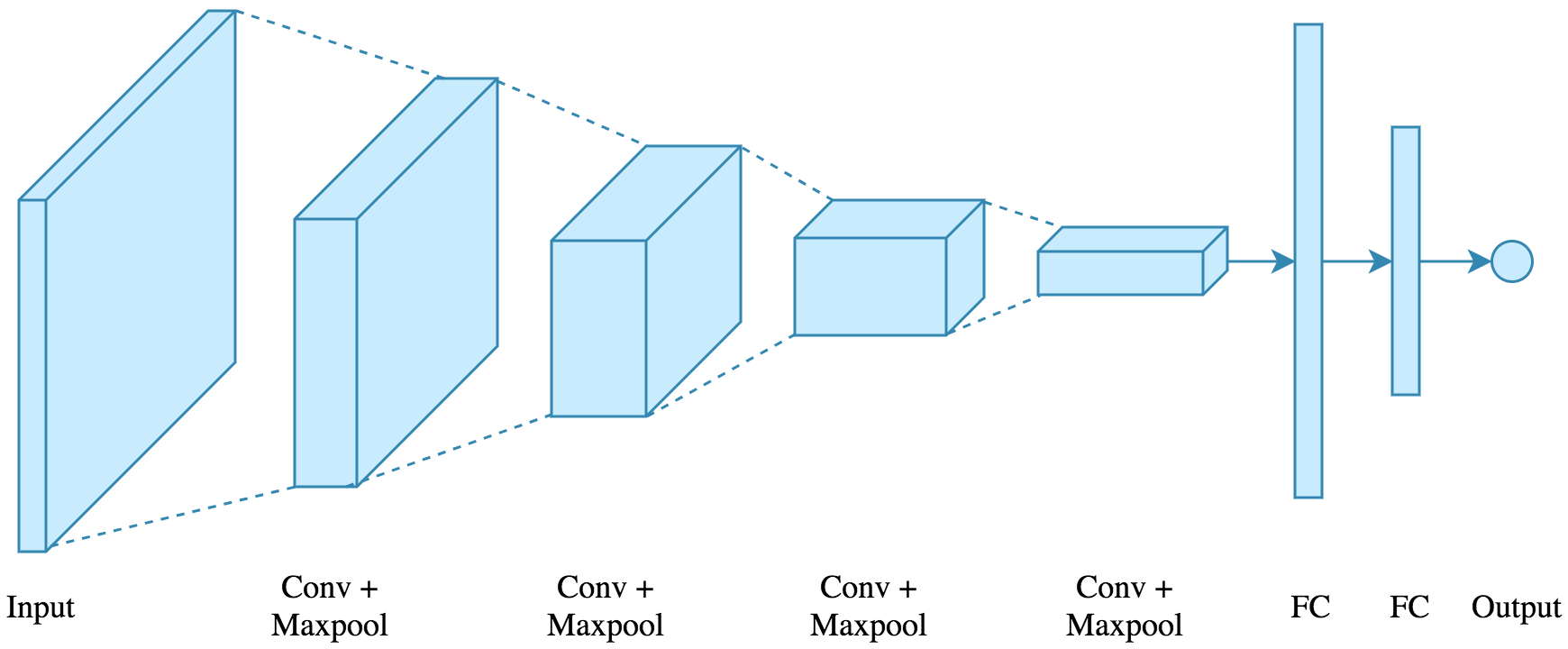

3. Using a different architecture for your problem.

"Is my NN too simplistic for this type of problem?"

It's not about the complexity of the architecture, but more about the type of layers that can process a certain type of data. In this case, you have images with single channels (128,128), which is a 2D input. Usually, color images have R, G, B channels, which end up as (128,128,3) shaped inputs.

The general practice is to use CNN layers for this.

An example of that is shown below -

from tensorflow.keras import Sequential

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPooling2D, Reshape

model = Sequential()

model.add(Reshape((128,128,1), input_shape=(128,128)))

model.add(Conv2D(5, 5, activation='relu'))

model.add(MaxPooling2D((2,2)))

model.add(Conv2D(10, 5, activation='relu'))

model.add(MaxPooling2D((2,2)))

model.add(Conv2D(20, 5, activation='relu'))

model.add(MaxPooling2D((2,2)))

model.add(Conv2D(30, 5, activation='relu'))

model.add(MaxPooling2D((2,2)))

model.add(Flatten())

model.add(Dense(12, activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

Model: "sequential_13"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

reshape_5 (Reshape) (None, 128, 128, 1) 0

_________________________________________________________________

conv2d_16 (Conv2D) (None, 124, 124, 5) 130

_________________________________________________________________

max_pooling2d_16 (MaxPooling (None, 62, 62, 5) 0

_________________________________________________________________

conv2d_17 (Conv2D) (None, 58, 58, 10) 1260

_________________________________________________________________

max_pooling2d_17 (MaxPooling (None, 29, 29, 10) 0

_________________________________________________________________

conv2d_18 (Conv2D) (None, 25, 25, 20) 5020

_________________________________________________________________

max_pooling2d_18 (MaxPooling (None, 12, 12, 20) 0

_________________________________________________________________

conv2d_19 (Conv2D) (None, 8, 8, 30) 15030

_________________________________________________________________

max_pooling2d_19 (MaxPooling (None, 4, 4, 30) 0

_________________________________________________________________

flatten_9 (Flatten) (None, 480) 0

_________________________________________________________________

dense_23 (Dense) (None, 12) 5772

_________________________________________________________________

dense_24 (Dense) (None, 8) 104

_________________________________________________________________

dense_25 (Dense) (None, 1) 9

=================================================================

Total params: 27,325

Trainable params: 27,325

Non-trainable params: 0

_________________________________________________________________

To understand what Conv2D layers and MaxPooling layers do, do check out my