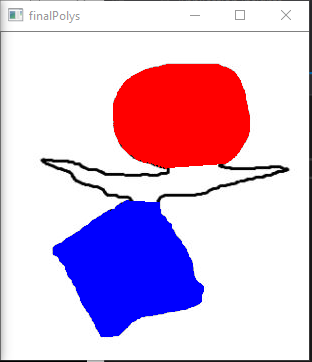

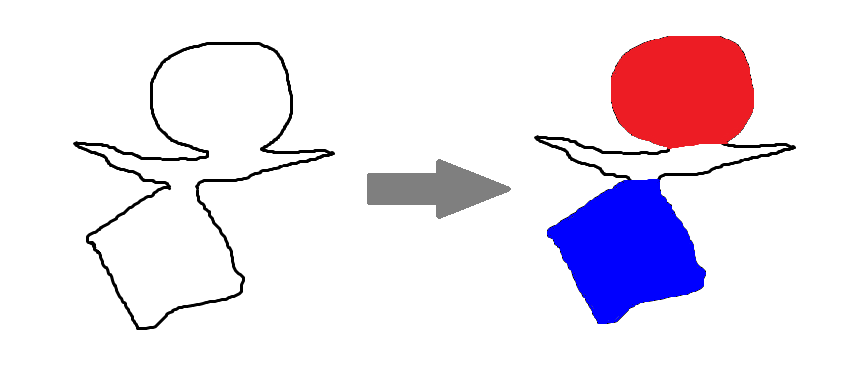

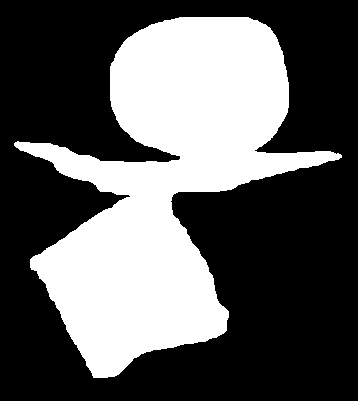

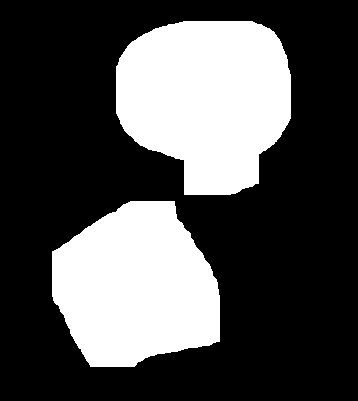

How can we transform the left diagram to the right diagram as per the reference image?

CodePudding user response:

Here's an idea:

- Load image, convert to grayscale, and Otsu's threshold for a binary image

- Find the contour and fill it in with white

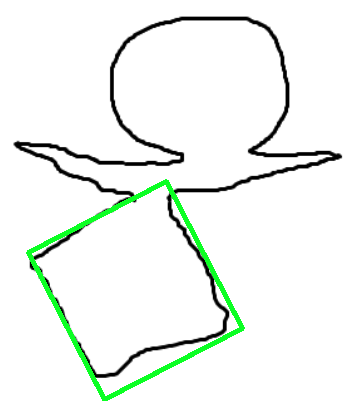

- Now that we have a binary image, we can perform morphological operations. Depending on the object that you're trying to extract, we can create different structing kernels. For the rectangle we can use

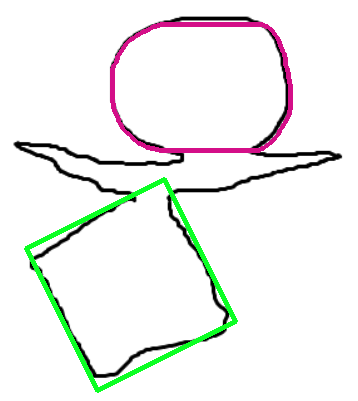

cv2.MORPH_RECT, for the ellipse we can remove the horizontal section with a larger kernel size and usecv2.MORPH_ELLIPSE. - We then filter for the remaining contours and find a rotated bounding box for the rectangle and ellipse

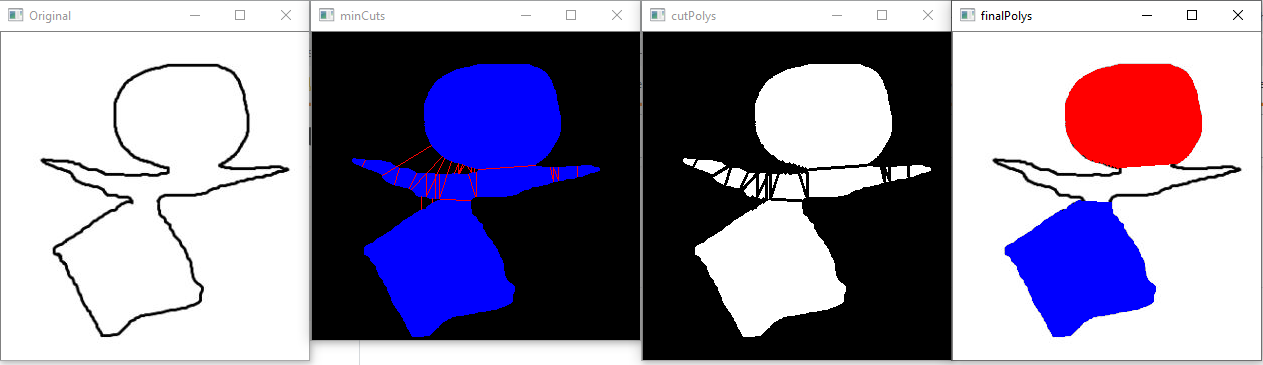

Here's a visualization of the process

For the ellipse

Since you didn't specify a language, here's an implementation with Python

import cv2

import numpy as np

# Load image, convert to grayscale, Otsu's threshold for binary image

image = cv2.imread('1.png')

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV cv2.THRESH_OTSU)[1]

# Find contours and fill in contour with white

cnts = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

cv2.drawContours(thresh, [c], 0, 255, -1)

# Rectangle ----------------------------------------

# Morph open to separate rectangular contour

rectangular_kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (20, 20))

rect = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, rectangular_kernel, iterations=4)

# Find contours and draw rotated rectangle onto image

cnts = cv2.findContours(rect, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

isolated_rect = cv2.minAreaRect(cnts[0])

box = np.int0(cv2.boxPoints(isolated_rect))

cv2.drawContours(image, [box], 0, (36,255,12), 3)

# Rectangle ----------------------------------------

# Ellipse ----------------------------------------

# Morph open to separate elliptical contour

horizontal_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (50,10))

ellipse = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, horizontal_kernel, iterations=2)

# Find contours and filter for ellipse

cnts = cv2.findContours(ellipse, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

# Filter using contour area, could also use Aspect Ratio or contour approximation

for c in cnts:

area = cv2.contourArea(c)

if area > 20000:

cv2.drawContours(image, [c], 0, (136,15,212), 3)

# Ellipse ----------------------------------------

# Display

cv2.imshow('image', image)

cv2.imshow('thresh', thresh)

cv2.imshow('rect', rect)

cv2.imshow('ellipse', ellipse)

cv2.waitKey()

CodePudding user response:

Using a gradient decent of point pairings (minimizing distance), you can get a numerical min cut list. Applying those min cuts to the original object allows it to be segmented into separate objects. The only parameter that you need to set is minObjectRadius which specifies the minimum radius of a shape that you care about (it is used to determine limits of min cut and for final shape filtration).

Code:

#include <stdio.h>

#include <opencv2/opencv.hpp>

#include <Windows.h>

#include <string>

using namespace std;

using namespace cv;

vector<tuple<int, int>> generatePointPairs(int totalIndicies, int stride = 1)

{

vector<tuple<int, int>> pointPairs;

for (int i = 0; i < totalIndicies; i =stride)

{

for (int ii = 0; ii < totalIndicies; ii =stride)

{

tuple<int, int> pair(i, ii);

pointPairs.push_back(pair);

}

}

return pointPairs;

}

double distSq(Point p1, Point p2)

{

return pow(p1.x - p2.x, 2) pow(p1.y - p2.y,2);

}

tuple<int, int> gradDecentPair(vector<Point> contour, tuple<int, int> pair)

{

int index0 = get<0>(pair);

int index1 = get<1>(pair);

bool flip = false;

double lastDist = distSq(contour[get<0>(pair)], contour[get<1>(pair)]);

int flipCounter = 0;

while (true)

{

bool improvementFound = false;

int staticIndex = index1;

if (flip) { staticIndex = index0; }

double bestDist = -1;

int bestIndex = -1;

for (int i = -5; i <= 5; i =1)

{

if (i == 0) { continue; }

int testIndex = index0 i;

if (flip) { testIndex = index1 i; }

if (testIndex < 0)

{ testIndex = contour.size(); }

else if (testIndex >= contour.size())

{ testIndex -= contour.size(); }

double testDist = distSq(contour[staticIndex], contour[testIndex]);

if (bestDist == -1 || testDist < bestDist)

{

bestIndex = testIndex;

bestDist = testDist;

}

}

if (bestDist < lastDist)

{

if (flip) { index1 = bestIndex; }

else { index0 = bestIndex; }

lastDist = bestDist;

improvementFound = true;

}

if (index0 == index1) { break; }

if (improvementFound) { continue; }

else

{

flipCounter ;

flip = !flip;

if (flipCounter > 10) { break; } //pretty sure this can be better, but lazy atm

}

}

return tuple<int, int>(index0, index1);

}

int main(int argc, char** argv)

{

int minObjectRadius = 75;

std::string fileName = "C:/Local Software/voyDICOM/resources/images/ShapeBlob.JPG";

Mat original = imread(fileName, cv::IMREAD_GRAYSCALE);

imshow("Original", original);

Mat bwImg;

cv::threshold(original, bwImg, 0, 255, cv::THRESH_OTSU);

bitwise_not(bwImg, bwImg);

vector<vector<Point> > contours;

findContours(bwImg, contours, RETR_EXTERNAL, CHAIN_APPROX_NONE);

Mat minCuts(original.cols, original.rows, CV_8UC3);

fillPoly(minCuts, contours, Scalar(255,0,0));

vector<Point> cuts;

for (int i = 0; i < contours.size(); i )

{

std::cout << contours[i].size() << std::endl;

vector<tuple<int, int>> pointPairs = generatePointPairs(contours[i].size(), 25);

for (int ii = 0; ii < pointPairs.size(); ii )

{

tuple<int, int> minCut = gradDecentPair(contours[i], pointPairs[ii]);

Point p1 = contours[i][get<0>(minCut)];

Point p2 = contours[i][get<1>(minCut)];

double tempDist = distSq(p1, p2);

if (tempDist > 0 && tempDist <= pow(minObjectRadius, 2))

{

line(minCuts, contours[i][get<0>(minCut)], contours[i][get<1>(minCut)], Scalar(0, 0, 255));

cuts.push_back(p1);

cuts.push_back(p2);

}

}

std::cout << i << " , " << contours.size() << std::endl;

}

imshow("minCuts", minCuts);

fillPoly(bwImg, contours, 255);

for (int i = 0; i < cuts.size(); i = 2)

{

line(bwImg, cuts[i],cuts[i 1], 0,2);

}

imshow("cutPolys", bwImg);

Mat finalShapes = imread(fileName, cv::IMREAD_COLOR);

int colorIndex = 0;

vector<Scalar> colors = { Scalar(255,0,0),Scalar(0,0,255),Scalar(0,0,0) };

vector<vector<Point> > contoursFinal;

findContours(bwImg, contoursFinal, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

for (int i = 0; i < contoursFinal.size(); i )

{

double tempArea = contourArea(contoursFinal[i]);

if (tempArea < pow(minObjectRadius, 2)) { continue; }

vector<vector<Point>> singleContour;

singleContour.push_back(contoursFinal[i]);

fillPoly(finalShapes, singleContour, colors[colorIndex]);

colorIndex ;

if (colorIndex >= colors.size()) { colorIndex = 0; }

}

imshow("finalPolys", finalShapes);

waitKey(0);

}