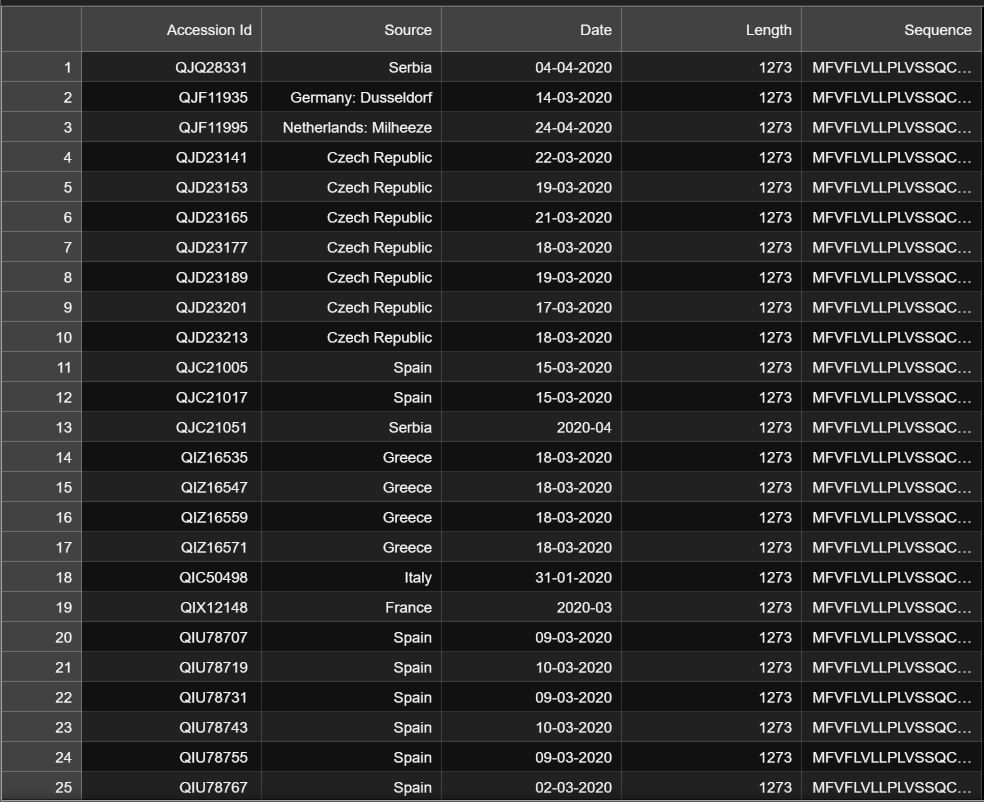

I have a CSV file that looks like this

I want to choose the last column and make character level one-hot-encode matrices of every sequence, I use this code and it doesn't work

data = pd.read_csv('database.csv', usecols=[4])

alphabet = ['A', 'C', 'D', 'E', 'F', 'G','H', 'I', 'K', 'L', 'M', 'N', 'P', 'Q', 'R', 'S', 'T', 'V', 'W', 'Y']

charto = dict((c,i) for i,c in enumerate(alphabet))

iint = [charto[char] for char in data]

onehot2 = []

for s in iint:

lett = [0 for _ in range(len(alphabet))]

lett[s] = 1

onehot2.append(lett)

What do you suggest doing for this task? (by the way, I want to use this dataset for a PyTorch model)

CodePudding user response:

I think it would be best to keep pd.DataFrame as is and do the transformation "on the fly" within PyTorch Dataset.

First, dummy data similar to yours:

df = pd.DataFrame(

{

"ID": [1, 2, 3],

"Source": ["Serbia", "Poland", "Germany"],

"Sequence": ["ABCDE", "EBCDA", "AAD"],

}

)

After that, we can create torch.utils.data.Dataset class (example alphabet is shown, you might change it to anything you want):

class Dataset(torch.utils.data.Dataset):

def __init__(self, df: pd.DataFrame):

self.df = df

# Change alphabet to anything you need

alphabet = ["A", "B", "C", "D", "E", "F"]

self.mapping = dict((c, i) for i, c in enumerate(alphabet))

def __getitem__(self, index):

sample = df.iloc[index]

sequence = sample["Sequence"]

target = torch.nn.functional.one_hot(

torch.tensor([self.mapping[letter] for letter in sequence]),

num_classes=len(self.mapping),

)

return sample.drop("Sequence"), target

def __len__(self):

return len(self.df)

This code simply transforms indices of letters to their one-hot encoding via torch.nn.functional.one_hot function.

Usage is pretty simple:

ds = Dataset(df)

ds[0]

which returns (you might want to change how your sample is created though as I'm not sure about the format and only focused on hot-encoded targets) the following targets (ID and Source omitted):

tensor([ [1., 0., 0., 0., 0., 0.],

[0., 1., 0., 0., 0., 0.],

[0., 0., 1., 0., 0., 0.],

[0., 0., 0., 1., 0., 0.],

[0., 0., 0., 0., 1., 0.]]))