I'm trying to run an automated process in a Jupyter Notebook (from deepnote.com) every single day, but after running the very first iteration of a while loop and starting the next iteration (at the for loop inside the while loop), the virtual machine crashes throwing the message below:

KernelInterrupted: Execution interrupted by the Jupyter kernel

Here's the code:

.

.

.

while y < 5:

print(f'\u001b[45m Try No. {y} out of 5 \033[0m')

#make the driver wait up to 10 seconds before doing anything.

driver.implicitly_wait(10)

#values for the example.

#Declaring several variables for looping.

#Let's start at the newest page.

link = 'https...'

driver.get(link)

#Here we use an Xpath element to get the initial page

initial_page = int(driver.find_element_by_xpath('Xpath').text)

print(f'The initial page is the No. {initial_page}')

final_page = initial_page 120

pages = np.arange(initial_page, final_page 1, 1)

minimun_value = 0.95

maximum_value = 1.2

#the variable to_place is set as a string value that must exist in the rows in order to be scraped.

#if it doesn't exist it is ignored.

to_place = 'A particular place'

#the same comment stated above is applied to the variable POINTS.

POINTS = 'POINTS'

#let's set a final dataframe which will contain all the scraped data from the arange that

#matches with the parameters set (minimun_value, maximum value, to_place, POINTS).

df_final = pd.DataFrame()

dataframe_final = pd.DataFrame()

#set another final dataframe for the 2ND PART OF THE PROCESS.

initial_df = pd.DataFrame()

#set a for loop for each page from the arange.

for page in pages:

#INITIAL SEARCH.

#look for general data of the link.

#amount of results and pages for the execution of the for loop, "page" variable is used within the {}.

url = 'https...page={}&p=1'.format(page)

print(f'\u001b[42m Current page: {page} \033[0m ' '\u001b[42m Final page: ' str(final_page) '\033[0m ' '\u001b[42m Page left: ' str(final_page-page) '\033[0m ' '\u001b[45m Try No. ' str(y) ' out of ' str(5) '\033[0m' '\n')

driver.get(url)

#Here we order the scrapper to try finding the total number of subpages a particular page has if such page IS NOT empty.

#if so, the scrapper will proceed to execute the rest of the procedure.

try:

subpages = driver.find_element_by_xpath('Xpath').text

print(f'Reading the information about the number of subpages of this page ... {subpages}')

subpages = int(re.search(r'\d{0,3}$', subpages).group())

print(f'This page has {subpages} subpages in total')

df = pd.DataFrame()

df2 = pd.DataFrame()

print(df)

print(df2)

#FOR LOOP.

#search at each subpage all the rows that contain the previous parameters set.

#minimun_value, maximum value, to_place, POINTS.

#set a sub-loop for each row from the table of each subpage of each page

for subpage in range(1,subpages 1):

url = 'https...page={}&p={}'.format(page,subpage)

driver.get(url)

identities_found = int(driver.find_element_by_xpath('Xpath').text.replace('A total of ','').replace(' identities found','').replace(',',''))

identities_found_last = identities_found%50

print(f'Página: {page} de {pages}') #AT THIS LINE CRASHED THE LAST TIME

.

.

.

#If the particular page is empty

except:

print(f'This page No. {page} IT'S EMPTY ¯\_₍⸍⸌̣ʷ̣̫⸍̣⸌₎_/¯, ¡NEXT! ')

.

.

.

y = 1

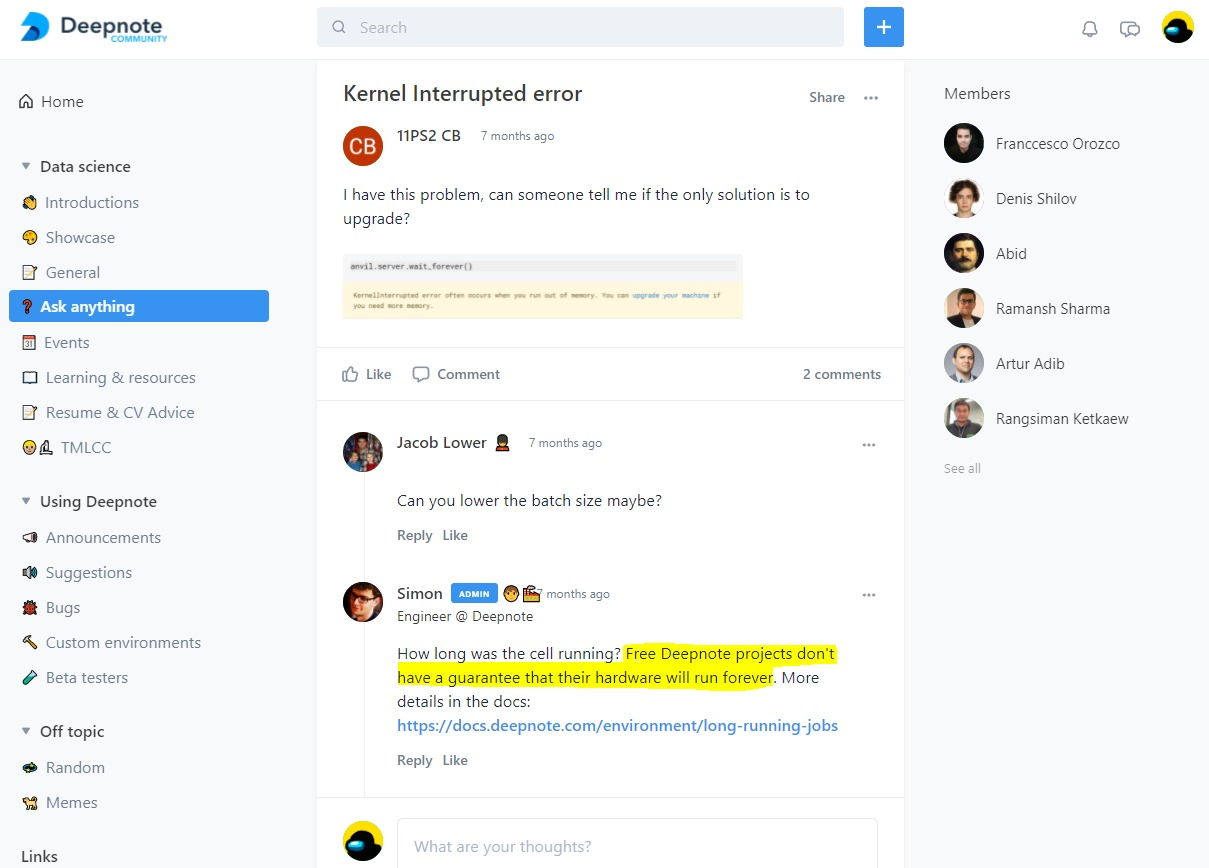

Initially I thought the KernelInterrupted Error was thrown due to the lack of virtual memory my virtual machine had at the moment of running the second iteration...

But after several tests I figured out that my program isn't RAM-consuming at all because the virtual RAM wasn't changing that much during all the process 'til the Kernel crashed. I can guarantee that.

So now I think that maybe the virtual CPU of my virtual machine is the one that's causing the crashing of the Kernel, but if that was the case I just don't understand why, this is the first time I have to deal with such situation, this program runs perfectly in my PC.

Is any data scientist or machine learning engineer here that could assist me? Thanks in advance.

CodePudding user response:

I have found the answer in the Deepnote community forum itself, simply the "Free Tier" machines of this platform do not guarantee a permanent operation (24h / 7) regardless of the program executed in their VM.

That's it. Problem is solved.