I have a S3 URL to a public file similar to the following URL example: s3://test-public/new/solution/file.csv (this is not the actual link . just a close example to the one i'm using)

I am able to read the file using s3fs module in a python script, without placing any AWS key ID, nor AWS Secret Key, as follows:

with fs.open('test-public/new/solution/file.csv'):

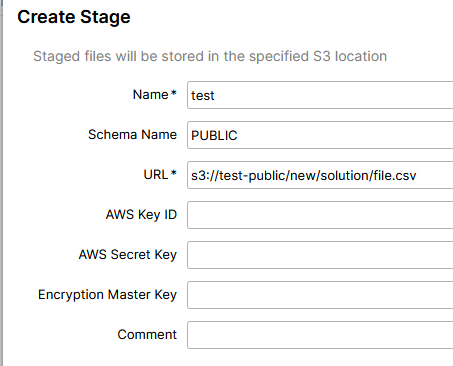

however, when i try to read the same file from S3 to Snowflake stage or from Table -> Load table, Snowlake reports that AWS key ID, and AWS Secret Key are missing, though these fields are optional

Is there a way i can read a public file from S3 into a snowflake table without any AWS credentials (as with s3fs) ?

CodePudding user response:

This approach works to import data from S3 into a snowgflake Table:

COPY INTO SNOW_SCHEMA.table_name FROM 's3://test-public/new/solution/file.csv'

CodePudding user response:

STORAGE_INTEGRATION or CREDENTIALS are required only if the storage location is private not required for public buckets.

Make sure you have defined the bucket policy appropriately.

{

"Version": "2012-10-17",

"Id": "Policy16361",

"Statement": [

{

"Sid": "Pub Bucket Policy",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:ListBucket",

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::pubucket",

"arn:aws:s3:::pubucket/*"

]

}

]

}

Once you have the above policy defined for a s3 bucket you can either create the stage or load directly into a table.

--create stage, list files, view file content

create or replace stage my_s3_stage url='s3://pubucket/test.csv';

ls @my_s3_stage;

select $1 from @my_s3_stage;

--or even load directly from file

create or replace table mytable (cola varchar);

copy into mytable from 's3://pubucket/test.csv';

select * from mytable;