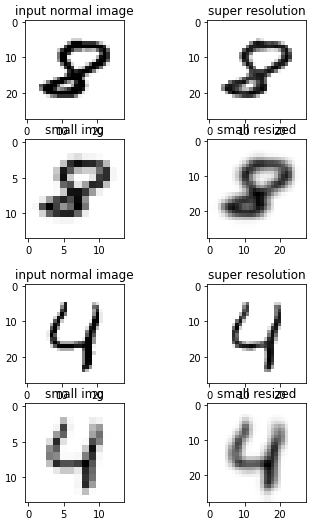

I am new to Deep Learning and I made a model that pretends to upscale a 14x14 image to a 28x28. For that, I trained the newtork using MNIST repository as a first attempt to solve this problem.

For making the model structure I followed this paper:

Here is the code to plot the figures:

def plot_images(num_img):

fig, axs = plt.subplots(2, 2)

my_normal_image = test_normal_array[num_img, :, :, 0]

axs[0, 0].set(title='input normal image')

axs[0, 0].imshow(my_normal_image, cmap=plt.cm.binary)

axs[1, 0].set(title = 'small img')

my_resized_image = resize(my_normal_image, anti_aliasing=True, output_shape=(14, 14))

axs[1, 0].imshow(my_resized_image, cmap=plt.cm.binary)

axs[0, 1].set(title='super resolution')

my_super_res_image = model.predict(my_resized_image[np.newaxis, :, :, np.newaxis])[0, :, :, 0]

axs[0, 1].imshow(my_super_res_image, cmap=plt.cm.binary)

axs[1, 1].set(title='small resized')

my_rr_image = resize(my_resized_image, output_shape=(28, 28), anti_aliasing=True)

axs[1, 1].imshow(my_rr_image, cmap=plt.cm.binary)

plt.show()

index = 8

plot_images(np.argwhere(y_test==index)[0][0])

index = 4

plot_images(np.argwhere(y_test==index)[0][0])

In addition, here is also how I build the dataset :

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

train_normal_array = np.expand_dims(x_train, axis=3)

test_normal_array = np.expand_dims(x_test, axis=3)

train_small_array = np.zeros((train_normal_array.shape[0], 14, 14, 1))

for i in tqdm.tqdm(range(train_normal_array.shape[0])):

train_small_array[i, :, :] = resize(train_normal_array[i], (14, 14), anti_aliasing=True)

test_small_array = np.zeros((test_normal_array.shape[0], 14, 14, 1))

for i in tqdm.tqdm(range(test_normal_array.shape[0])):

test_small_array[i, :, :] = resize(test_normal_array[i], (14, 14), anti_aliasing=True)

training_data = []

training_data.append([train_small_array.astype('float32'), train_normal_array.astype('float32') / 255])

testing_data = []

testing_data.append([test_small_array.astype('float32'), test_normal_array.astype('float32') / 255])

Note that I do not divide train_small_array and test_small_array by 255 as resize does the job.

CodePudding user response:

I think your data is unbalanced, so if the model only predict white space he will eventually have a good score, so the accuracy is not suitable to judge the performance of the model in this case.