I have created a local spark environment in docker. I intend to use this as part of a CICD pipeline for unit testing code executed in the spark environment. I have two scripts which I want to use: 1 will create a set of persistent spark databases and tables and the other will read those tables. Even though the tables should be persistent, they only persist in that specific spark session. If I create a new spark session, I cannot access the tables, even though it is visible in the file system. Code examples are below:

Create db and table

Create_script.py

from pyspark.sql import SparkSession

def main():

spark = SparkSession.builder.appName('Example').getOrCreate()

columns = ["language", "users_count"]

data = [("Java", "20000"), ("Python", "100000"), ("Scala", "3000")]

rdd = spark.sparkContext.parallelize(data)

df = rdd.toDF(columns)

spark.sql("create database if not exists schema1")

df.write.mode("ignore").saveAsTable('schema1.table1')

Load Data

load_data.py

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('example').getOrCreate()

df = spark.sql("select * from schema1.table1")

I know there is a problem as when I run this command: print(spark.catalog.listDatabases()) It can only find database default. But if I import Create_script.py then it will find schema1 db.

How do I make persistent tables across all spark sessions?

CodePudding user response:

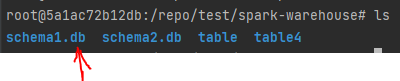

These files in /repo/test/spark-warehouse is only data of the tables, without meta info of database/table/column.

If you don't enable Hive, Spark use an InMemoryCatalog, which is ephemeral and only for testing, only available in same spark context. This InMemoryCatalog doesn't provide any function to load db/table from file system.

So there is two way:

Columnar Format

spark.write.orc(), write data into orc/parquet format in yourCreate_script.pyscript. orc/parquet format store column info aside with data.val df = spark.read.orc(), thencreateOrReplaceTempViewif you need use it in sql.

Use Embed Hive

You don't need to install Hive, Spark can work with embed hive, just two steps.

- add spark-hive dependency. (I'm using Java which use pom.xml to manage dependencies, I don't know how to do it in pyspark)

SparkSession.builder().enableHiveSupport()

Then data will be

/repo/test/spark-warehouse/schema1.db, meta info will be/repo/test/metastore_db, which contains files of Derby db. You can read or write tables across all spark sessions.