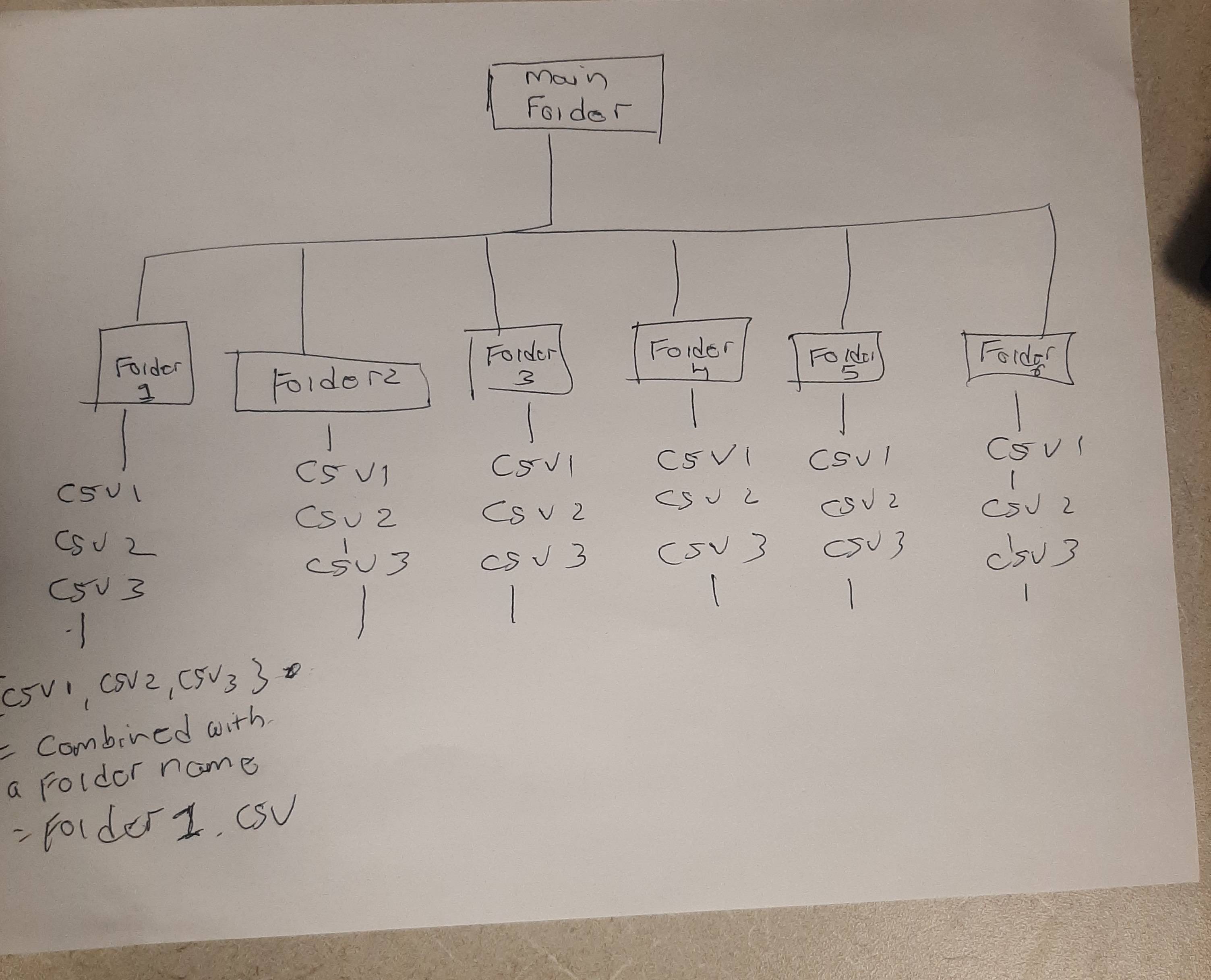

I wish to loop through a number of folders and concatenate all the .csv files, and output a combined[folder name].csv for EACH folder, via a batch file. For example, for Folder1, the output concatenated file in that folder will be combinedFolder1.csv.How would i go about doing that? i can do it for each individual folder but would like to do batch processing Thanks i have attached a picture and am also adding a code for individual folder

joined_files = os.path.join("C:/Users/user/Desktop/Main_folder/folder1/", "*.csv")

joined_list = glob.glob(joined_files)

df = pd.concat(map(pd.read_csv, joined_list), ignore_index=True)

df.to_csv("folder1.csv",index=False)

CodePudding user response:

You can use something like this:

import pandas as pd

import pathlib

main_folder = pathlib.Path('data')

data_folders = [d for d in main_folder.iterdir() if d.is_dir()]

for data_folder in data_folders:

data = [pd.read_csv(csvfile) for csvfile in data_folder.glob('*.csv')]

pd.concat(data).to_csv(data_folder / f"{data_folder.name}.csv")

Folder structure:

data

├── Folder1

│ ├── file1.csv

│ ├── file2.csv

│ └── Folder1.csv

└── Folder2

├── file1.csv

├── file2.csv

└── Folder2.csv

2 directories, 6 files

CodePudding user response:

You can use this, it works perfectly fine with what you want to do:

import glob, os

import pandas as pd

for i in my_list:

directory_name="C:/Users/user/Desktop/Main_folder/{0}".format(i)

os.chdir(directory_name)

extension = 'csv'

all_csvs = [i for i in glob.glob('*.{}'.format(extension))]

combined_csv = pd.concat([pd.read_csv(f) for f in all_csvs ])

combined_csv.to_csv( ("{0}.csv".format(i)), index=False, encoding='utf-8-sig')