I'm unable to get OpenGL (with GLFW) to render content to the screen. I'm not even able set a clear color and have that be displayed when I run my application, I'm just consistently presented with a black screen.

I have installed requisite dependencies on my system and set up the build environment such that I'm able to successfully compile my applications (and dependencies) without error. Here is a snippet of the problematic code... You will note much of the rendering code has actually been commented out. For now it will be sufficient to just have the Clear Color I chose displayed to verify that everything is set up correctly:

// Include standard headers

#include <stdio.h>

#include <stdlib.h>

//Include GLEW. Always include it before gl.h and glfw3.h, since it's a bit magic.

#include <GL/glew.h>

// Include GLFW

#include <GLFW/glfw3.h>

// Include GLM

#include <glm/glm.hpp>

#include <GL/glu.h>

#include<common/shader.h>

#include <iostream>

using namespace glm;

int main()

{

// Initialise GLFW

glewExperimental = true; // Needed for core profile

if( !glfwInit() )

{

fprintf( stderr, "Failed to initialize GLFW\n" );

return -1;

}

// Open a window and create its OpenGL context

GLFWwindow* window; // (In the accompanying source code, this variable is global for simplicity)

window = glfwCreateWindow( 1024, 768, "Tutorial 02", NULL, NULL);

if( window == NULL ){

fprintf( stderr, "Failed to open GLFW window. If you have an Intel GPU, they are not 3.3 compatible. Try the 2.1 version of the tutorials.\n" );

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window); // Initialize GLEW

//glewExperimental=true; // Needed in core profile

if (glewInit() != GLEW_OK) {

fprintf(stderr, "Failed to initialize GLEW\n");

return -1;

}

//INIT VERTEX ARRAY OBJECT (VAO)...

//create Vertex Array Object (VAO)

GLuint VertexArrayID;

//Generate 1 buffer, put the resulting identifier in our Vertex array identifier.

glGenVertexArrays(1, &VertexArrayID);

//Bind the Vertex Array Object (VAO) associated with the specified identifier.

glBindVertexArray(VertexArrayID);

// Create an array of 3 vectors which represents 3 vertices

static const GLfloat g_vertex_buffer_data[] = {

-1.0f, -1.0f, 0.0f,

1.0f, -1.0f, 0.0f,

0.0f, 1.0f, 0.0f,

};

//INIT VERTEX BUFFER OBJECT (VBO)...

// This will identify our vertex buffer

GLuint VertexBufferId;

// Generate 1 buffer, put the resulting identifier in VertexBufferId

glGenBuffers(1, &VertexBufferId);

//Bind the Vertex Buffer Object (VBO) associated with the specified identifier.

glBindBuffer(GL_ARRAY_BUFFER, VertexBufferId);

// Give our vertices to OpenGL.

glBufferData(GL_ARRAY_BUFFER, sizeof(g_vertex_buffer_data), g_vertex_buffer_data, GL_STATIC_DRAW);

//Compile our Vertex and Fragment shaders into a shader program.

/**

GLuint programId = LoadShaders("../tutorial2-drawing-triangles/SimpleVertexShader.glsl","../tutorial2-drawing-triangles/SimpleFragmentShader.glsl");

if(programId == -1){

printf("An error occured whilst attempting to load one or more shaders. Exiting....");

exit(-1);

}

//glUseProgram(programId); //use our shader program

*/

// Ensure we can capture the escape key being pressed below

glfwSetInputMode(window, GLFW_STICKY_KEYS, GL_TRUE);

do{

// Clear the screen. It's not mentioned before Tutorial 02, but it can cause flickering, so it's there nonetheless.

glClearColor(8.0f, 0.0f, 0.0f, 0.3f);

//glClearColor(1.0f, 1.0f, 0.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

// DRAW OUR TRIANGE...

/**

glBindBuffer(GL_ARRAY_BUFFER, VertexBufferId);

glEnableVertexAttribArray(0); // 1st attribute buffer : vertices

glVertexAttribPointer(

0, // attribute 0. No particular reason for 0, but must match the layout in the shader.

3, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

0, // stride

(void*)0 // array buffer offset

);

// plot the triangle !

glDrawArrays(GL_TRIANGLES, 0, 3); // Starting from vertex 0; 3 vertices total -> 1 triangle

glDisableVertexAttribArray(0); //clean up attribute array

*/

// Swap buffers

glfwSwapBuffers(window);

//poll for and process events.

glfwPollEvents();

} // Check if the ESC key was pressed or the window was closed

while( glfwGetKey(window, GLFW_KEY_ESCAPE ) != GLFW_PRESS &&

glfwWindowShouldClose(window) == 0 );

}

Again, pretty straight forward as far as OpenGL goes, all rendering logic, loading of shaders,etc has been commented out I'm just trying to set a clear color and have it displayed to be sure my environment is configured correctly. To build the application I'm using QTCreator with a custom CMAKE file. I can post the make file if you think it may help determine the problem.

CodePudding user response:

So I managed to solve the problem. I'll attempt to succinctly outline the source of the problem and how I arrived at a resolution in the hope that it may be useful to others that encounter the same issue:

In a nutshell, the source of the problem was a driver issue, I neglected to mention that I was actually running OpenGL inside an Ubuntu Mate 18.0 VM (via Parallels 16) on a MacBook Pro (with dedicated graphics) Therein, lies the problem; up until very recently both Parallels and Ubuntu simply did not support more modern, OpenGL 3.3 and upwards. I discovered this by adding the following lines to the posted code in order to force a specific OpenGL version:

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

On doing this the application immediately begin to crash and glGetError() reported that I needed to downgrade to an earlier version of OpenGL as 3.3 was not compatible with my system.

The solution was two-fold:

- Update Parallels to version 17 which now includes a dedicated, third-party virtual GPU (virGL) that is capable of running OpenGL 3.3 code.

- Update Ubuntu or at the very least the kernel as virGL only works with linux kernel versions 5.10 and above. (Ubuntu Mate 18 only ships with kernel version 5.04.)

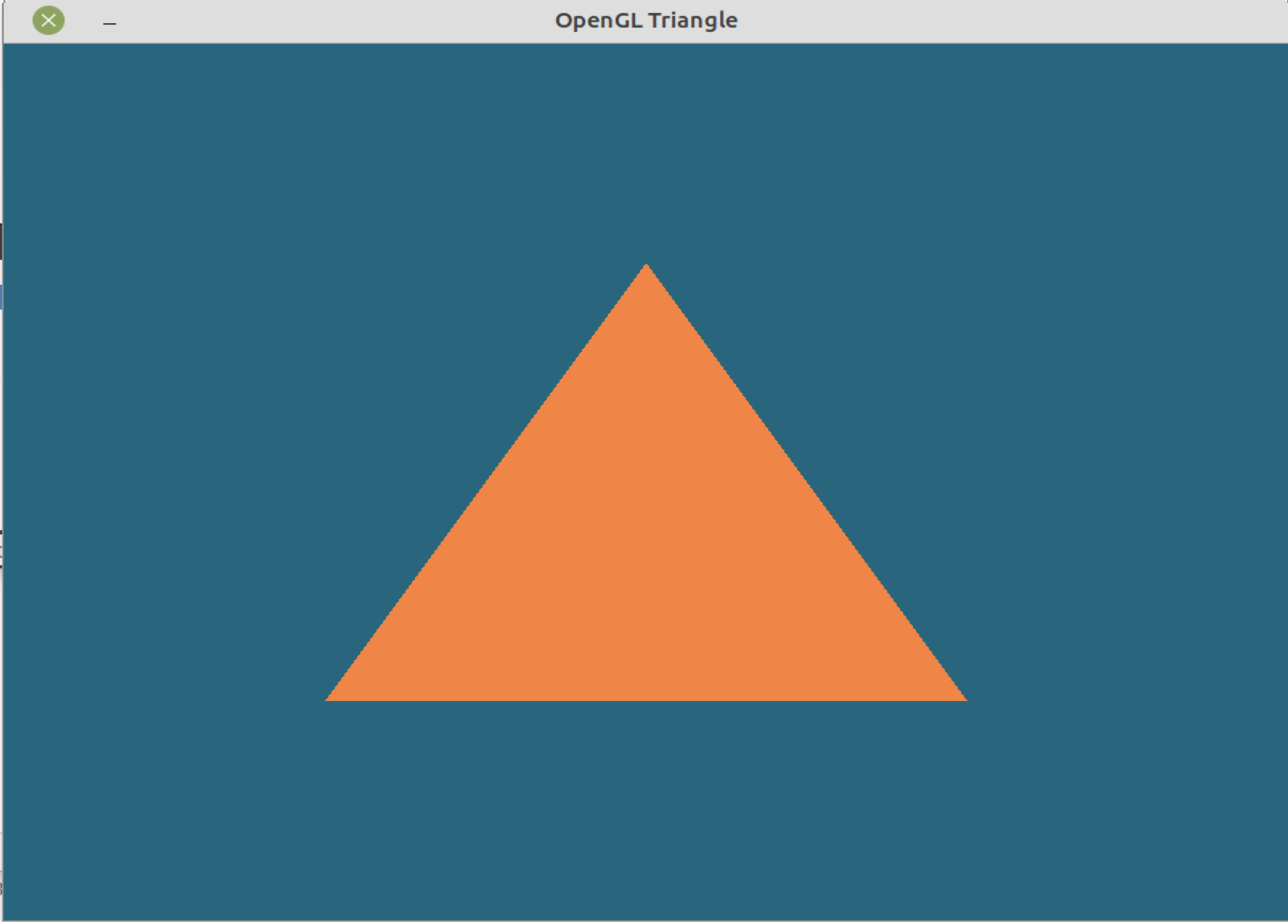

Thats it, making the changes, as described, enabled me to run the code exactly as posted and successfully render a basic triangle to the screen.