I'm looking through some different neural network architectures and trying to piece together how to recreate them on my own.

One issue I'm running into is the functional difference between the Concatenate() and Add() layers in Keras. It seems like they accomplish similar things (combining multiple layers together), but I don't quite see the real difference between the two.

Here's a sample keras model that takes two separate inputs and then combines them:

inputs1 = Input(shape = (32, 32, 3))

inputs2 = Input(shape = (32, 32, 3))

x1 = Conv2D(kernel_size = 24, strides = 1, filters = 64, padding = "same")(inputs1)

x1 = BatchNormalization()(x1)

x1 = ReLU()(x1)

x1 = Conv2D(kernel_size = 24, strides = 1, filters = 64, padding = "same")(x1)

x2 = Conv2D(kernel_size = 24, strides = 1, filters = 64, padding = "same")(inputs2)

x2 = BatchNormalization()(x2)

x2 = ReLU()(x2)

x2 = Conv2D(kernel_size = 24, strides = 1, filters = 64, padding = "same")(x2)

add = Concatenate()([x1, x2])

out = Flatten()(add)

out = Dense(24, activation = 'softmax')(out)

out = Dense(10, activation = 'softmax')(out)

out = Flatten()(out)

mod = Model([inputs1, inputs2], out)

I can substitute out the Add() layer with the Concatenate() layer and everything works fine, and the models seem similar, but I have a hard time understanding the difference.

For reference, here's the plot of each one with keras's plot_model function:

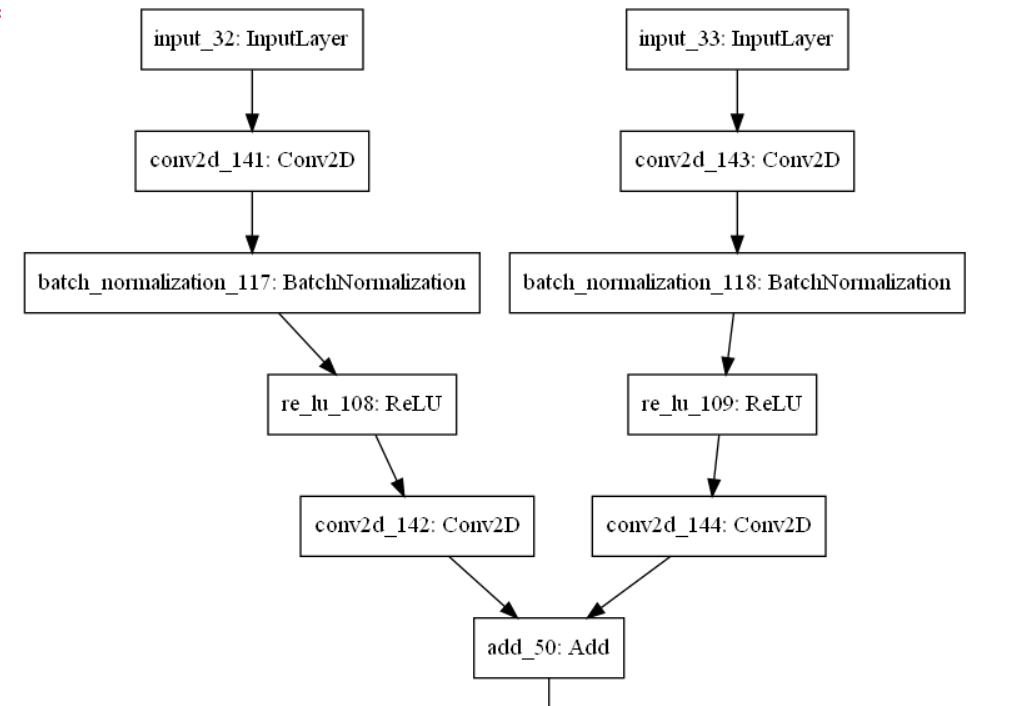

KERAS MODEL WITH ADDED LAYERS:

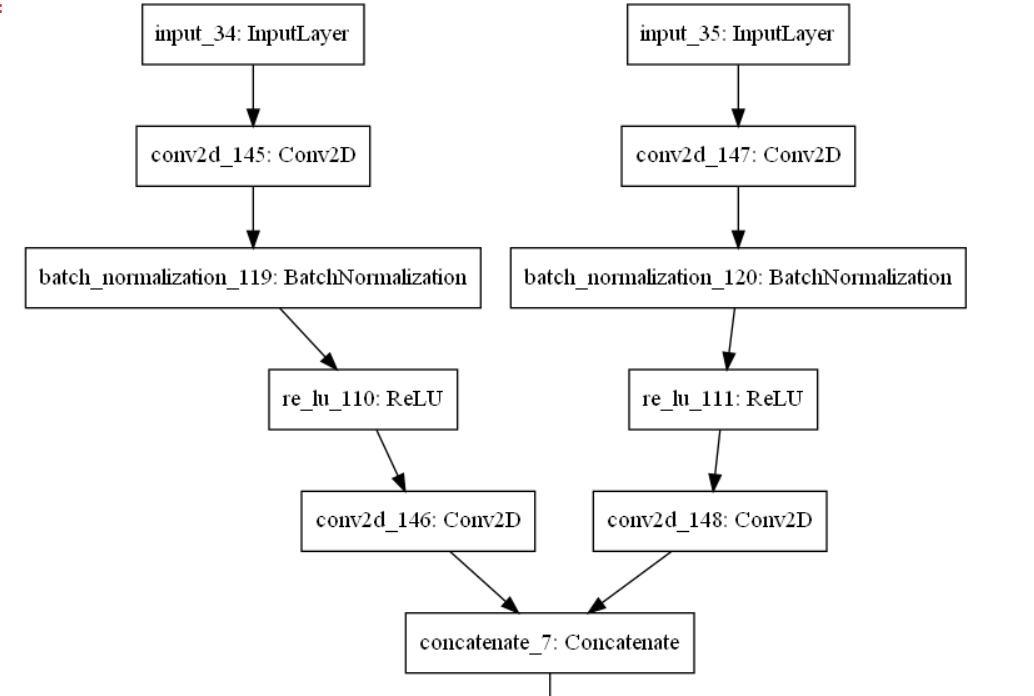

KERAS MODEL WITH CONCATENATED LAYERS:

I notice when you concatenate your model size is bigger vs adding layers. Is it the case with a concatenation you just stack the weights from the previous layers on top of each other and with an Add() you add together the values?

It seems like it should be more complicated, but I'm not sure.

CodePudding user response:

As you said, both of them combine input, but they combine in a different way. their name already suggest their usage

Add() inputs are added together,

For example (assume batch_size=1)

x1 = [[0, 1, 2]]

x2 = [[3, 4, 5]]

x = Add()([x1, x2])

then x should be [[3, 5, 7]], where each element is added

notice that the input shape is (1, 3) and (1, 3), the output is also (1, 3)

Concatenate() concatenates the output,

For example (assume batch_size=1)

x1 = [[0, 1, 2]]

x2 = [[3, 4, 5]]

x = Concatenate()([x1, x2])

then x should be [[0, 1, 2, 3, 4, 5]], where the inputs are horizontally stacked together,

notice that the input shape is (1, 3) and (1, 3), the output is also (1, 6),

even when the tensor has more dimensions, similar behaviors still apply.

Concatenate creates a bigger model for an obvious reason, the output size is simply the size of all inputs summed, while add has the same size with one of the inputs

For more information about add/concatenate, and other ways to combine multiple inputs, see this