I am trying to host an application in AWS Elastic Kubernetes Service(EKS). I have configured the EKS cluster using the AWS Console. Configured the Node Group and added a Node to the EKS Cluster and everything is working fine.

In order to connect to the cluster, I had spin up an EC2 instance (Centos7) and configured the following:

1. Installed docker, kubeadm, kubelet and kubectl.

2. Installed and configured AWS Cli V2.

To authenticate to the EKS Cluster, I had attached an IAM role to the EC2 Instance having the following AWS managed policies:

1. AmazonEKSClusterPolicy

2. AmazonEKSWorkerNodePolicy

3. AmazonEC2ContainerRegistryReadOnly

4. AmazonEKS_CNI_Policy

5. AmazonElasticContainerRegistryPublicReadOnly

6. EC2InstanceProfileForImageBuilderECRContainerBuilds

7. AmazonElasticContainerRegistryPublicFullAccess

8. AWSAppRunnerServicePolicyForECRAccess

9. AmazonElasticContainerRegistryPublicPowerUser

10. SecretsManagerReadWrite

After this, I ran the following commands to connect to the EKS Cluster:

1. aws sts get-caller-identity

2. aws eks update-kubeconfig --name eks-cluster --region ap-south-1

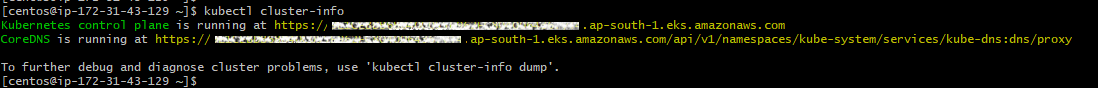

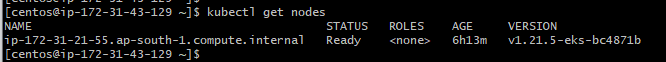

When I ran kubectl cluster-info and kubectl get nodes, I got the following:

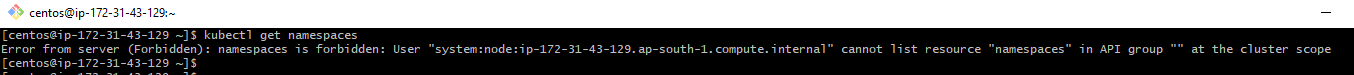

However, when I try to run kubectl get namespaces I am getting the following error:

I am getting the same kind of error when I try to create Namespaces in the EKS cluster. Not sure what I'm missing here.

Error from server (Forbidden): error when creating "namespace.yml": namespaces is forbidden: User "system:node:ip-172-31-43-129.ap-south-1.compute.internal" cannot create resource "namespaces" in API group "" at the cluster scope

As an alternative, I tried to create a user with Administrative permission in IAM. Created AWS_ACCESS_KEY and AWS_SECRET_KEY_ID. Used aws configure to configure credentials within the EC2 Instance.

Ran the following commands:

1. aws sts get-caller-identity

2. aws eks update-kubeconfig --name eks-cluster --region ap-south-1

3. aws eks update-kubeconfig --name eks-cluster --region ap-south-1 --role-arn arn:aws:iam::XXXXXXXXXXXX:role/EKS-Cluster-Role

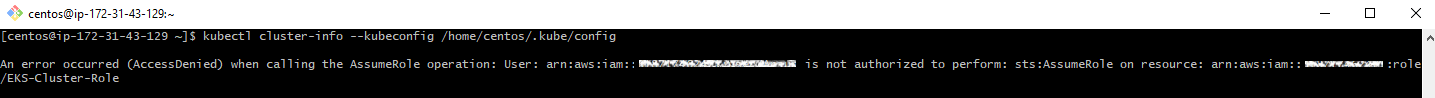

After running kubectl cluster-info --kubeconfig /home/centos/.kube/config, I got the following error:

An error occurred (AccessDenied) when calling the AssumeRole operation: User: arn:aws:iam::XXXXXXXXXXXX:user/XXXXX is not authorized to perform: sts:AssumeRole on resource: arn:aws:iam::XXXXXXXXXXXX:role/EKS-Cluster-Role

Does anyone know how to resolve this issue??

CodePudding user response:

Check your cluster role binding or user access to EKS cluster

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: eks-console-dashboard-full-access-clusterrole

rules:

- apiGroups:

- ""

resources:

- nodes

- namespaces

- pods

verbs:

- get

- list

- apiGroups:

- apps

resources:

- deployments

- daemonsets

- statefulsets

- replicasets

verbs:

- get

- list

- apiGroups:

- batch

resources:

- jobs

verbs:

- get

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: eks-console-dashboard-full-access-binding

subjects:

- kind: Group

name: eks-console-dashboard-full-access-group

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: eks-console-dashboard-full-access-clusterrole

apiGroup: rbac.authorization.k8s.io

Check the config map inside the cluster having proper user IAM mapping

kubectl get configmap aws-auth -n kube-system -o yaml

Read more at :https://aws.amazon.com/premiumsupport/knowledge-center/eks-kubernetes-object-access-error/

CodePudding user response:

I found the solution to this issue. Earlier I created the AWS EKS Cluster using the root user of my AWS account (Think that could have been the initial problem). This time, I created the EKS Cluster using an IAM user with Administrator privileges, created a Node Group in the cluster and added a worker node.

Next, I configured AWS Cli using the AWS_ACCESS_KEY_ID and AWS_SECRET_KEY_ID of the IAM user within the Centos EC2 instance.

Just like before, I logged into the Centos EC2 instance and ran the following commands:

1. aws sts get-caller-identity

2. aws eks describe-cluster --name eks-cluster --region ap-south-1 --query cluster.status (To check the status of the Cluster)

3. aws eks update-kubeconfig --name eks-cluster --region ap-south-1

After this, when I ran kubectl get pods, kubectl get namespaces, There were no more issues. In fact, I was able to deploy to the cluster as well.

So, as a conclusion, I think we should not be creating an EKS cluster using the root user in AWS.

Thanks!