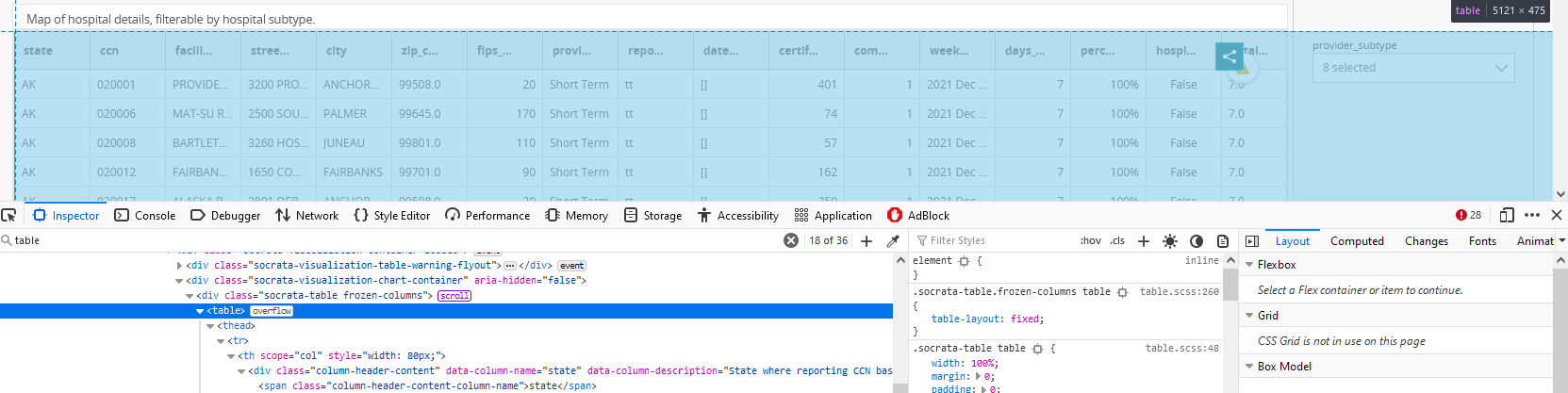

I hacked together the code below to try to scrape data from an HTML table, to a data frame, and then click a button to move to the next page, but it's giving me an error tat says 'invalid selector'.

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

from bs4 import BeautifulSoup

import time

from time import sleep

import pandas as pd

browser = webdriver.Chrome("C:/Utility/chromedriver.exe")

wait = WebDriverWait(browser, 10)

url = 'https://healthdata.gov/dataset/Hospital-Detail-Map/tagw-nk32'

browser.get(url)

for x in range(1, 5950, 13):

time.sleep(3) # wait page open complete

df = pd.read_html(browser.find_element_by_xpath("socrata-table frozen-columns").get_attribute('outerHTML'))[0]

submit_button = browser.find_elements_by_xpath('pager-button-next')[0]

submit_button.click()

I see the table, but I can't reference it.

Any idea what's wrong here?

CodePudding user response:

I've managed to find button with find_elements_by_css_selector

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

from bs4 import BeautifulSoup

import time

from time import sleep

import pandas as pd

browser = webdriver.Chrome("C:/Utility/chromedriver.exe")

wait = WebDriverWait(browser, 10)

url = 'https://healthdata.gov/dataset/Hospital-Detail-Map/tagw-nk32'

browser.get(url)

for x in range(1, 5950, 13):

time.sleep(3) # wait page open complete

df = pd.read_html(

browser.find_element_by_xpath("socrata-table frozen-columns").get_attribute(

'outerHTML'))[0]

submit_button = browser.find_elements_by_css_selector('button.pager-button-next')[1]

submit_button.click()

Sometimes pagination hangs, and submit_button.click() ends with an error

selenium.common.exceptions.ElementClickInterceptedException:

Message: element click intercepted:

Element <button >...</button>

is not clickable at point (182, 637).

Other element would receive the click: <span >...</span>

So consider to increase timeout. For example, you can use this approach

def click_timeout(element, timeout: int = 60):

for i in range(timeout):

time.sleep(1)

try:

element.click()

except WebDriverException:

pass

element.click()

So, you click an element as fast as it will be ready