I have a Google Spreadsheet where I have the following information on specific cells in the sheet:

- Cell B1: Has the URL

http://www.google.com.co/search?q=NASA watching now: site:www.youtube.com - Cell B2: has the following formula:

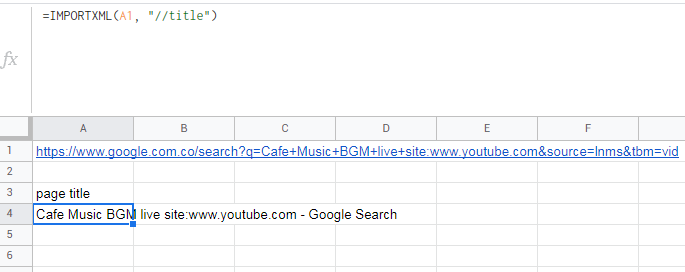

=IMPORTXML(B1,"//title")

The previous code returns the title of the given URL - in this case, the URL stored in the B1 cell.

It was working without problems (since 12/02/2022 - dd/MM/yyyy) until today (13/02/2022 - dd/mm/yyyy).

I checked the Chrome console "F12 Developer tools" and I get this error:

This document requires 'TrustedScript' assignment.

injectIntoContentWindow @ VM364:27

By clicking the @ VM364:27 line, the following code is shown:

function injectIntoContentWindow(contentWindow)

{

if (contentWindow && !injectedFramesHas(contentWindow))

{

injectedFramesAdd(contentWindow);

try

{

contentWindow[eventName] = checkRequest;

contentWindow.eval( /* ERROR with and (X) is shown here. */

"(" injectedToString() ")('" eventName "', true);"

);

delete contentWindow[eventName];

}

catch (e) {}

}

}

Searching on the internet, I barely could get the causes of this error:

- Google Chrome update - making security stricter.

- Chrome extensions - try to disable such extensions and try again.

- CPS (Content-Security-Policy) - must be honest = I don't understand this point; it's from the website to scrap the data OR from Google Sheets the CPS is the root cause?

- The solutions given to this problem are in Python - with the use of DOMPurify - as is

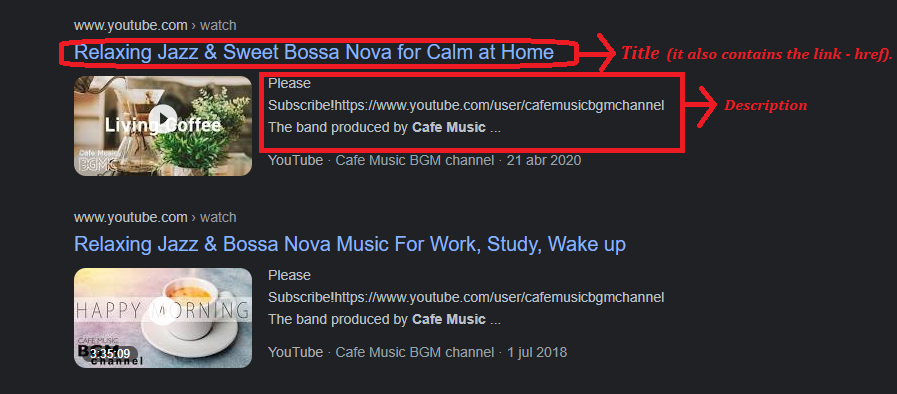

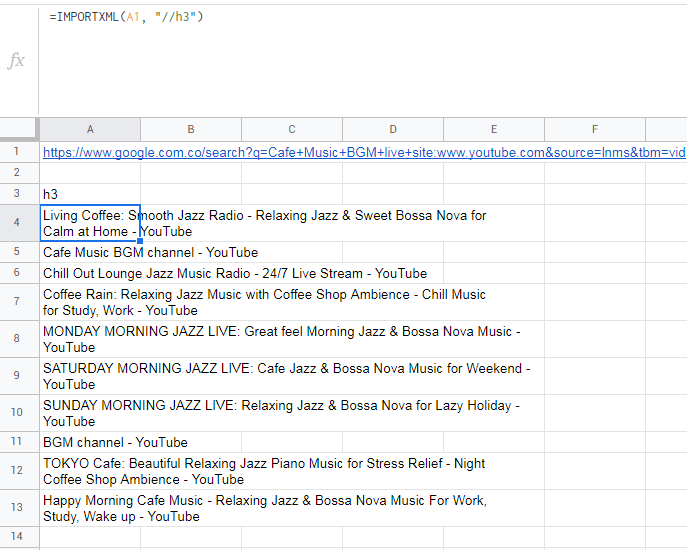

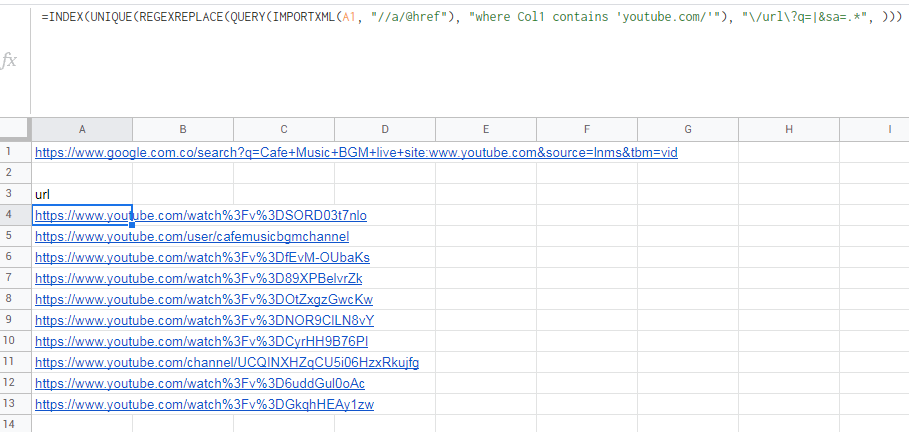

=IMPORTXML(A1, "//title")=IMPORTXML(A1, "//h3")CodePudding user response:

TL;DR:

This document requires 'TrustedScript' assignmenterror is not the root cause of the delay of theIMPORTXMLfunction - probably there is other cause(s) (outside of the developer's handling), but, after all, the code works - see working google spreadsheet - just, wait until the results are shown or use another way to web-scrapping the desired data.

Since the

This document requires 'TrustedScript' assignmentmessage stays appearing in the Console of the browser, but, the code I posted on my question (and the code posted by SO user player0 in their answer) works, it seems to me that the delay of the response usingIMPORTXMLmight be due its buggy functionality and/or some restriction Google detected by doing multiple requests.So, here are my tips about this:

- Check very closely how the page where the data will be extracted from and its structured before doing excessive requests - or you might face a significant delay in the response of the

IMPORTXMLfunction - as I experienced it. - Get more familiar with XPath and check if the data is dynamically generated in the page - this makes evebn harder to get the data using this way of scrapping.

This is the spreadsheet with the desired results - if anyone is interesed1.

1 Check the "Results-mix" sheet (which contains both the code I manage to create and the code provided by player0 in their answer).

If you really want to get similar results in a less convoluted way, consider use another strategy for web-scrapping or use official APIs - when available.

- Check very closely how the page where the data will be extracted from and its structured before doing excessive requests - or you might face a significant delay in the response of the