I have a CSV file in Azure data lake that , when opened with notepad looks something like this:

a,b,c

d,e,f

g,h,i

j,"foo

bar,baz",l

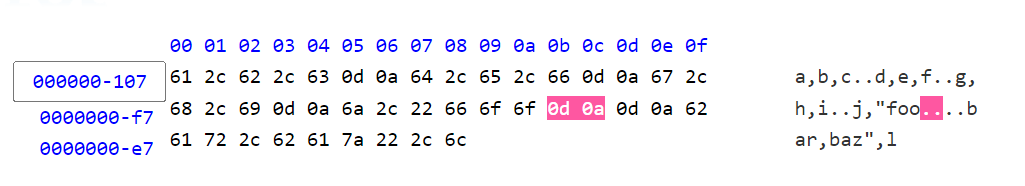

Upon inspection in notepad (vew all symbols) it shows me this:

a,b,c[CR][LF]

d,e,f[CR][LF]

g,h,i[CR][LF]

j,"foo[LF]

[LF]

bar,baz",l[CR][LF]

That is to say normal Windows Carriage Return and Line Feed stuff after each row. With the exceptions that for one of the columns someone inserted a fancy story like such:

foo

bar, baz

My TSQL code to injest the CSV looks like this:

COPY INTO

dbo.SalesLine

FROM

'https://mydatalakeblablabla/folders/myfile.csv'

WITH (

ROWTERMINATOR = '0x0d', -- Tried \n \r\n , 0x0d0a here

FILE_TYPE = 'CSV',

FIELDQUOTE = '"',

FIELDTERMINATOR = ',',

CREDENTIAL = (IDENTITY = 'Managed Identity') --Used to access datalake

)

But the query doesn't work. The common error message in SSMS is:

Bulk load data conversion error (type mismatch or invalid character for the specified codepage) for row 4, column 2 (NAME) in data file

I have no option to correct the faulty rows in the data lake or modify the CSV in any way. Obviously it is much larger file with real data, but I took a simple example.

How can I modify or rescript the TSQL code to correct the CSV when it is being read?

CodePudding user response:

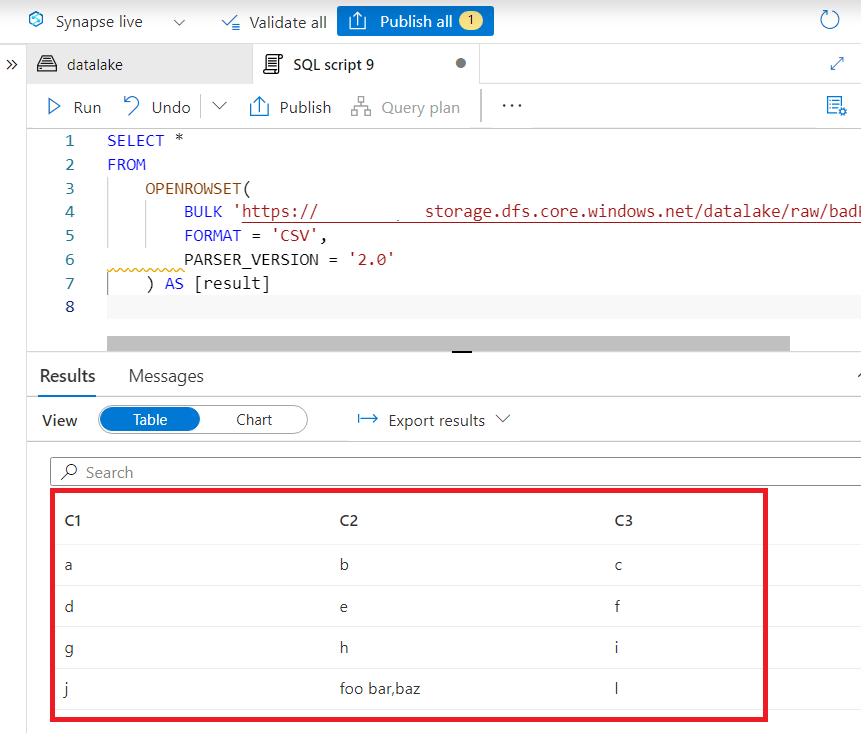

I recreated a similar file and uploaded it to my datalake and serverless SQL pool seemed to manage just fine:

SELECT *

FROM

OPENROWSET(

BULK 'https://somestorage.dfs.core.windows.net/datalake/raw/badFile.csv',

FORMAT = 'CSV',

PARSER_VERSION = '2.0'

) AS [result]

My results:

It probably seems like a bit of a workaround but if the improved parser in serverless is making light work of problems like this, then why not make use of the whole suite that is Azure Synapse Analytics. You could use serverless query as a source in a Copy activity in a Synapse Pipeline and load it to your dedicated SQL pool and that would have the same outcome as using the COPY INTO command.

In the past I've done stuff like written special parsing routines, loaded up the file as one column and split it in the db or used Regular Expressions but if there's a simple solution why not use it.