Context: We have multiple processes that are watching the same feature flag to determine whether a restart is required, and trying to determine if we need to ensure that exactly one process calls kubectl rollout restart.

Suppose n processes simultaneously call kubectl rollout restart on the same deployment, for n > 3.

Which of the following behaviors is expected to happen?

- The deployment goes down entirely as the overlapping restarts cause different pods to be deleted.

- All the restarts eventually run, but they run serially.

- Some number of restarts m, where m < n will run serially.

- Something else.

I have searched around but haven't found documentation about this behavior, so a pointer would be greatly appreciated.

CodePudding user response:

I didn't find the official documentation explaining how Kubernetes will behave as presented in your question.

However, I wrote a script that will spawn the 5 rollout restart command in parallel and used the deployment.yaml below for testing, with rollingUpdate as strategy and maxSurge = maxUnavailable = 1.

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp1

spec:

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

replicas: 10

selector:

matchLabels:

app: webapp1

template:

metadata:

labels:

app: webapp1

spec:

containers:

- name: webapp1

image: katacoda/docker-http-server:latest

ports:

- containerPort: 80

script.sh

for var in 1..5; do

kubectl rollout restart deployment webapp1 > /dev/null 2>&1 &

done

Then executed the script and watched the behavior

. script.sh; watch -n .5 kubectl get po

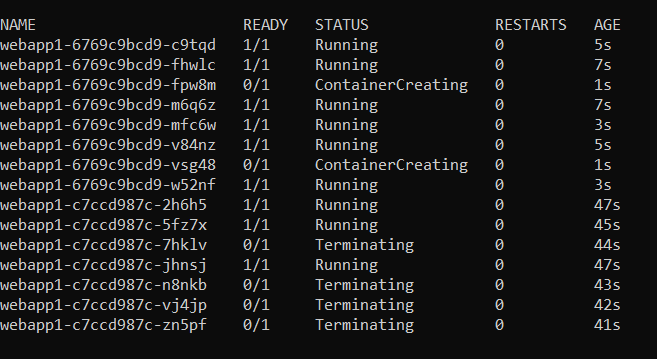

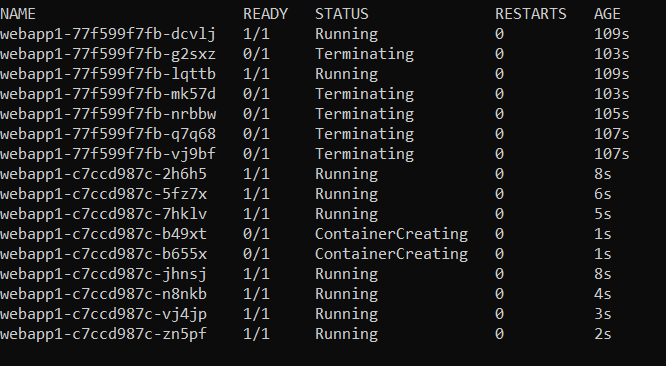

The watch command revealed that the Kubernetes maintained the desired state as commanded by the deployment.yaml. AT no time, fewer than 9 pods were in the Running state. Screenshots were taken few seconds apart

So, from this experiment, I deduce that no matter how many parallel rollout-restarts occur, Kubernetes controller manager is smart enough to still maintain the desired state.

Hence, the expected behavior will be as described in your manifest.