Basically I'm confused right now and can't find anything helpful on stackoverflow or through google search. I've been reading about how computers store differents data types in binary format to better understand C programming and general knowledge about computer science. I think I understand how floating-point numbers work but from what I understood the first bit in front of the decimal point (or binary point idk) isn't included because it is supposedly always 1 since we move the decimal point behind the first bit with a value of 1 from left to right. In that case, since we don't store the first bit, how would we be able to differentiate a floating-point variable storing the value 1.0 from 0.0 .

Ps. don't hesitate to edit this post if needed. English is not my first language.

CodePudding user response:

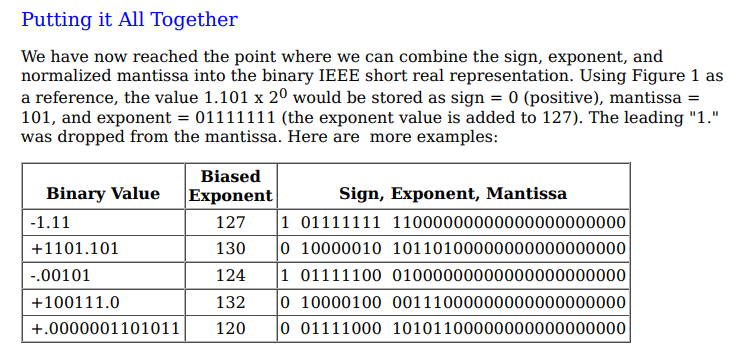

... first bit in front of ... binary point ... isn't included because it is supposedly always 1 ...

Not always.

With common floating point formats, like float32, when the biased exponent is a (0), the significand (erroneously called the mantissa) has a leading 0 and not 1. At that point the biased exponent encodes differently too.

"Zero" is usually encoded as an all zero-bit pattern.

v--- Implied bit

0 11111110 (1) 111_1111_1111_1111_1111_1111 Maximum value (~3.4e38)

0 01111111 (1) 000_0000_0000_0000_0000_0000 1.0

0 00000001 (1) 000_0000_0000_0000_0000_0000 smallest non-zero "normal" (~1.18e-38)

0 00000000 (0) 111_1111_1111_1111_1111_1111 largest "sub-normal" (~1.18e-38)

0 00000000 (0) 000_0000_0000_0000_0000_0001 smallest "sub-normal" (~1.40e-45)

0 00000000 (0) 000_0000_0000_0000_0000_0000 zero

-0.0, when supported is the same as 0.0 with a 1 in the sign bit place.