import numpy as np

from sklearn.metrics import roc_curve

from sklearn.preprocessing import binarize

import matplotlib.pyplot as plt

from sklearn.metrics import confusion_matrix

import warnings

warnings.filterwarnings('ignore')

import pandas as pd

from sklearn.metrics import accuracy_score, recall_score, precision_score

data

y_pred = np.array([0.4, 0.2, 0.3, 0.6, 0.1, 0.3, 0.7, 0.2, 0.3, 0.8, 0.3, 0.9, 0.3, 0.2, 0.2,

0.4, 0.9, 0.4, 0.3, 0.6, 0.7, 0.2, 0.8, 0.2, 0.6, 0.1, 0.1])

y_test =np.array(["No","No","No","Yes","No","No","Yes","No","No","Yes","No","Yes",

"No","No","No", "No","Yes","No","No","No","No","Yes",

"No","Yes","No","No","No"])

main program

I adjust the thresold and save recall and fpr in a list to plot it. addinionally I save in another list the values returned from sklearn metrics to ensure that I m getting the right values.

def recall_fpr(confusion_matrix):

"""Given a confusion matrix will return the recall and teh false positive reate"""

cm = confusion_matrix

Recall = round(cm[0, 0] / (cm[0, 0] cm[0, 1]), 3) # TP /(TP FN)

Precision = round(cm[0, 0] / (cm[0, 0] cm[1, 0]), 3) #TP /(TP FP)

False_Positive_rate = round((1 - Precision), 3)

return Recall, False_Positive_rate

list_recall = []

list_fpr = []

list_recall_sk = []

list_fpr_sk = []

for i in range (1, 10):

y_pred = y_pred.reshape(-1, 1)

y_pred2 = binarize(y_pred, i/10)

y_pred2 = np.where(y_pred2 == 1, 'Yes', 'No')

cm = confusion_matrix(y_test, y_pred2, labels=["Yes", "No"])

Recall, fpr = recall_fpr(cm)

list_recall.append(Recall)

list_fpr.append(fpr)

# I just add that to check I m getting right the results

recall_sk = round(recall_score(y_test, y_pred2, pos_label="Yes"), 3)

list_recall_sk.append(recall_sk)

fpr_sk = round(1 - round(precision_score(y_test, y_pred2, pos_label="Yes"), 3),3)

list_fpr_sk.append(fpr_sk)

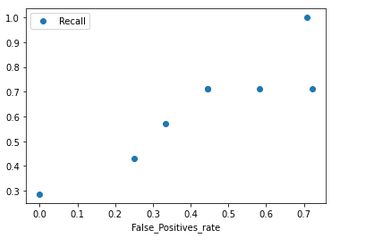

Plot values

df_threshold = pd.DataFrame({"Recall":list_recall, "False_Positives_rate": list_fpr})

df_threshold.plot(x='False_Positives_rate', y='Recall', style='o')

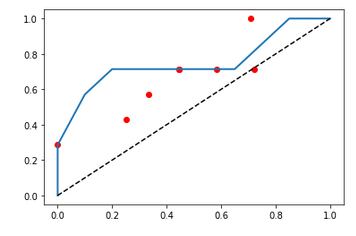

Calculate the metric by sklearn methodes.

fpr_2, tpr_2, thresholds_2 = roc_curve(y_test, y_pred, pos_label = "Yes")

plt.plot(fpr_2, tpr_2, linewidth=2)

plt.plot([0, 1], [0, 1], 'k--' )

ax = plt.subplot(1, 1, 1)

ax.scatter(list_fpr, list_recall, c='red')

plt.show()

Why the values does not match between what I calculate and the sklearn metrics ?

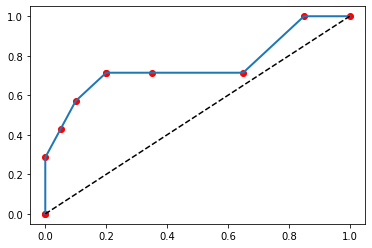

CodePudding user response:

FPR is not 1-precision. The former is FP/(FP TN), the latter is FP/(FP TP).

Correcting the recall_fpr function to have

False_Positive_rate = round(cm[1, 0] / (cm[1, 0] cm[1, 1]), 3) #FP /(FP TN)

gives the correct ROC curve: