SOLVED: see comment by Marco Cerliani. tl;dr Each output must be passed to the model as a separate array.

I have a neural network with two different targets to estimate:

Target #1 is 0-infinity, final single node dense layer uses 'linear' activation function.

Target #2 is 0-1 scaled, final single node dense layer uses uses a 'sigmoid' activation function.

Both outputs are using MAE loss, however MAE for Target #2 is almost as high as for Target #1. As Target #2 is 0-1 and the sigmoid can only give a 0-1 output, I would expect that the loss for Target #2 should not be able to be >1.

Indeed, when I only estimate Target #2 in a single output model I always get a loss <1. The problem arises only when using multiple-outputs.

Is this a bug, or am I doing something wrong?

optimizer = tf.keras.optimizers.Adam(learning_rate=1e-5)

mae_loss = tf.keras.losses.MeanAbsoluteError()

rmse_metric = tf.keras.metrics.RootMeanSquaredError()

inputs = tf.keras.layers.Input(shape=(IMG_SIZE, IMG_SIZE, CHANNELS))

model = tf.keras.applications.vgg16.VGG16(include_top=False, input_tensor=inputs,

weights='imagenet')

# Freeze the pretrained weights if needed

model.trainable = True

# Rebuild top

x = tf.keras.layers.GlobalAveragePooling2D(name='avg_pool')(model.output)

x = tf.keras.layers.BatchNormalization()(x)

top_dropout_rate = 0.0 # adjustable dropout

x = tf.keras.layers.Dropout(top_dropout_rate, name='top_dropout')(x)

x = tf.keras.layers.Dense(512, activation='relu', name='dense_top_1')(x)

output_1 = tf.keras.layers.Dense(1, activation='linear', name='output_1')(x)

output_2 = tf.keras.layers.Dense(1, activation='sigmoid', name='output_2')(x)

model = tf.keras.Model(inputs, [output_1, output_2],

name='VGG16_modified')

model.compile(optimizer=optimizer, loss=mae_loss, metrics=rmse_metric)

model.fit(X_train, y_train, batch_size=16, epochs=epochs, validation_data=[X_val, y_val], verbose=1)

I have also tried to compile explicitly with two separate losses:

model.compile(optimizer=optimizer, loss=[mae_loss, mae_loss], metrics=[rmse_metric, rmse_metric])

Example targets:

[[2.05e 02 7.45e-01]

[1.33e 02 1.46e-01]

[8.00e 01 2.77e-01]

[8.30e 01 4.29e-01]

[9.80e 01 1.50e-01]

[6.10e 01 3.10e-01]

[1.00e 02 4.09e-01]

[2.20e 02 9.17e-01]

[1.20e 02 1.52e-01]]

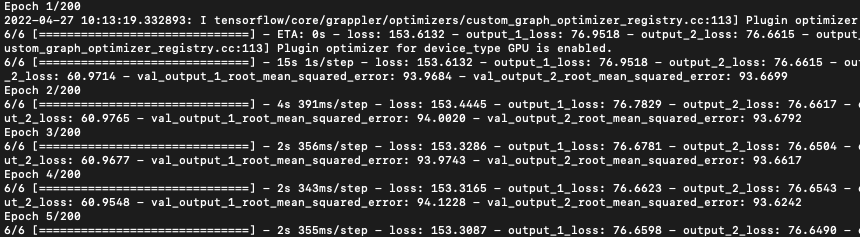

Terminal outputs (partly cropped, but you get the idea (it shouldn't be possible for loss #2 to be >76 given the sigmoid function and above targets):

TensorFlow v.2.8.0

CodePudding user response:

Your model expects 2 targets (output_1 and output_2), while you are using only y_train as a target during model.fit.

You should fit your model passing two separated target in this way:

model.fit(X_train, [y_train[:,[0]],y_train[:,[1]]], ...)