I am trying to build a simple 2 layer network, it has 2 inputs and 1 output, and the code is as follows:

num_input = 2

num_output = 1

# Input

x1 = torch.rand(1, 2)

# Weights

W1 = torch.rand(2,3)

W2 = torch.rand(3,1)

# biases

b1 = torch.rand(1,3)

b2 = torch.rand(1,1)

# Activation function

def f(inp):

inp[inp >= 0] = 1

inp[inp < 0] = 0

return inp

# Predict output

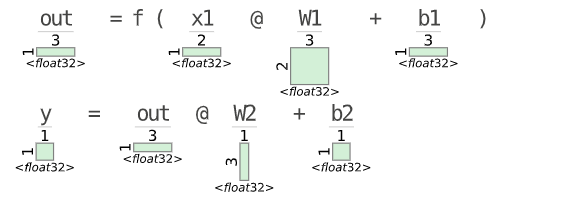

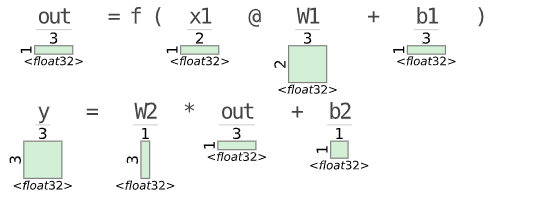

out = f(torch.mm(x1, W1) b1)

y=W2*out b2

print(y)

# Check solution

assert list(y.size()) == [1, num_output], f"Incorrect output size ({y.size()})"

print("nice!")

from this code I always get incorrect output size, can anyone give me a hint, how can I get the correct output size?

CodePudding user response:

y=out@W2 b2

You were doing elementwise multiplication. That was not changing the size of the output as you desired.

Just to be clear python 3.5 and above can use this "@" syntax - which is doing the same thing as torch.mm() - that is matrix multiplication.

Dimensions: (Now)

Now you have (1,2) input multiplied with (2,3) weights and adding (1,3) bias. Shape is (1,3) and then you matrix mutiply with (1,3) and (3,1) output is (1,1) and bias to it making the final output size (1,1).

Dimensions (before)

Side Note:

Also you can use the nn.Linear to do all these operations easily without specifying the weights and biases like that.