I am trying to make a perspective transformation with OpenCV in python. I want to align the image and find the coordinates of top left top right and bottom left and bottom right contours. here is my code so far where I can identify all the contours.

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray,(3,3),0)

edges = cv2.Canny(blur,50,100)

contours, hierarchy = cv2.findContours(edges,cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) #find contours

cv2.drawContours(img,contours,-1,(0,255,0),2)

cv2.imshow('Contours',img)

cv2.waitKey(0)

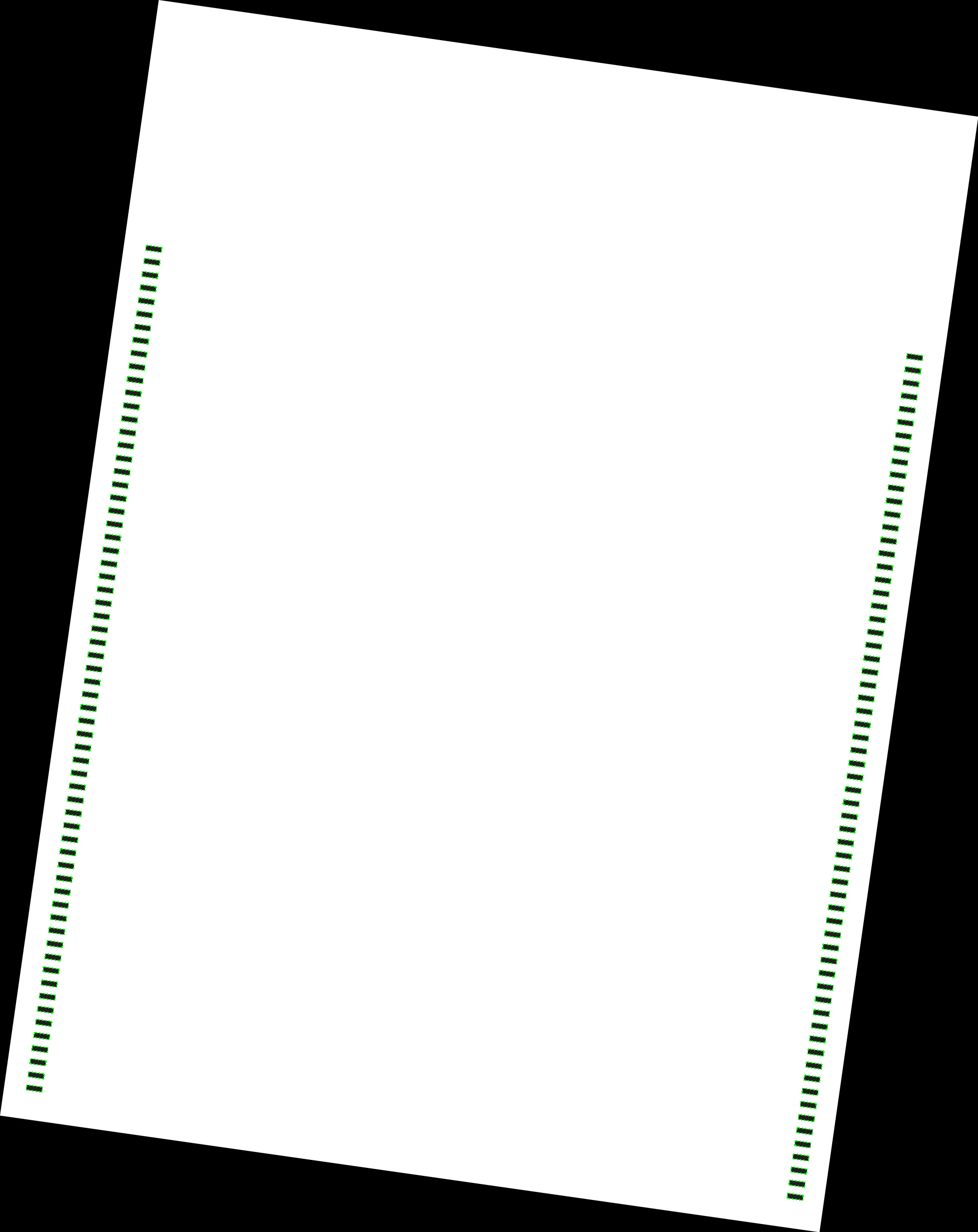

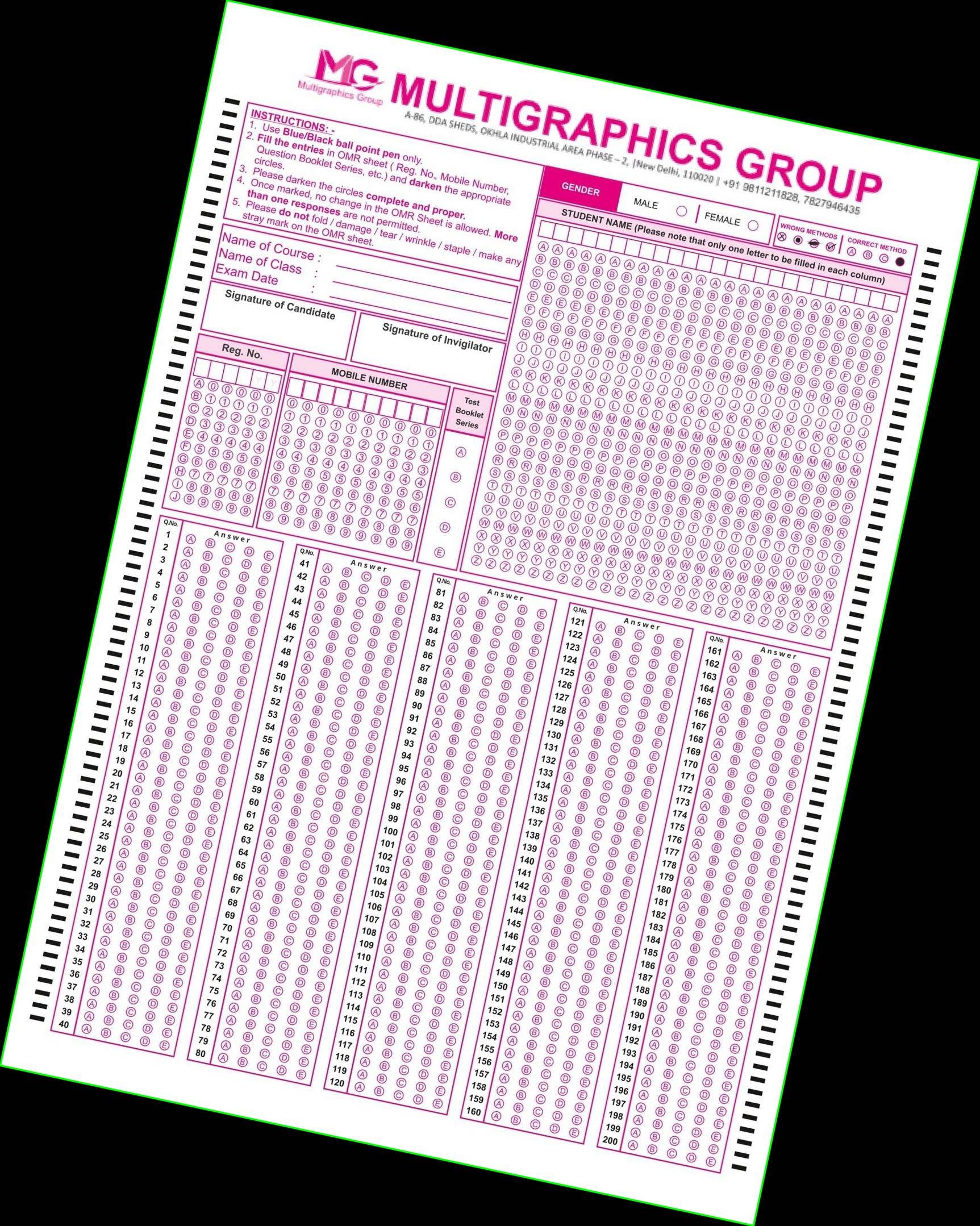

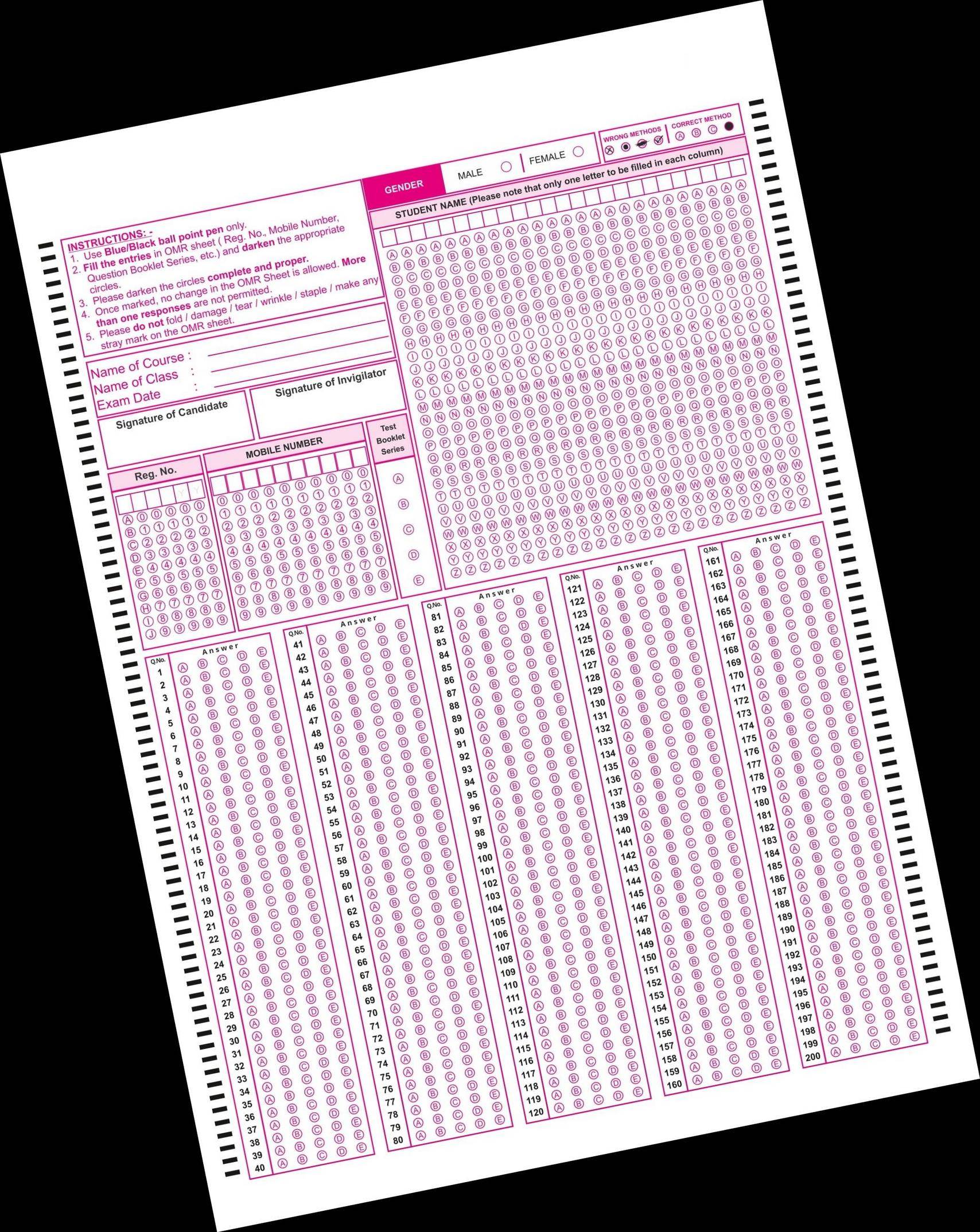

Here is an image of what I get.

I'd be very thankful if you could try to help me solve this problem!

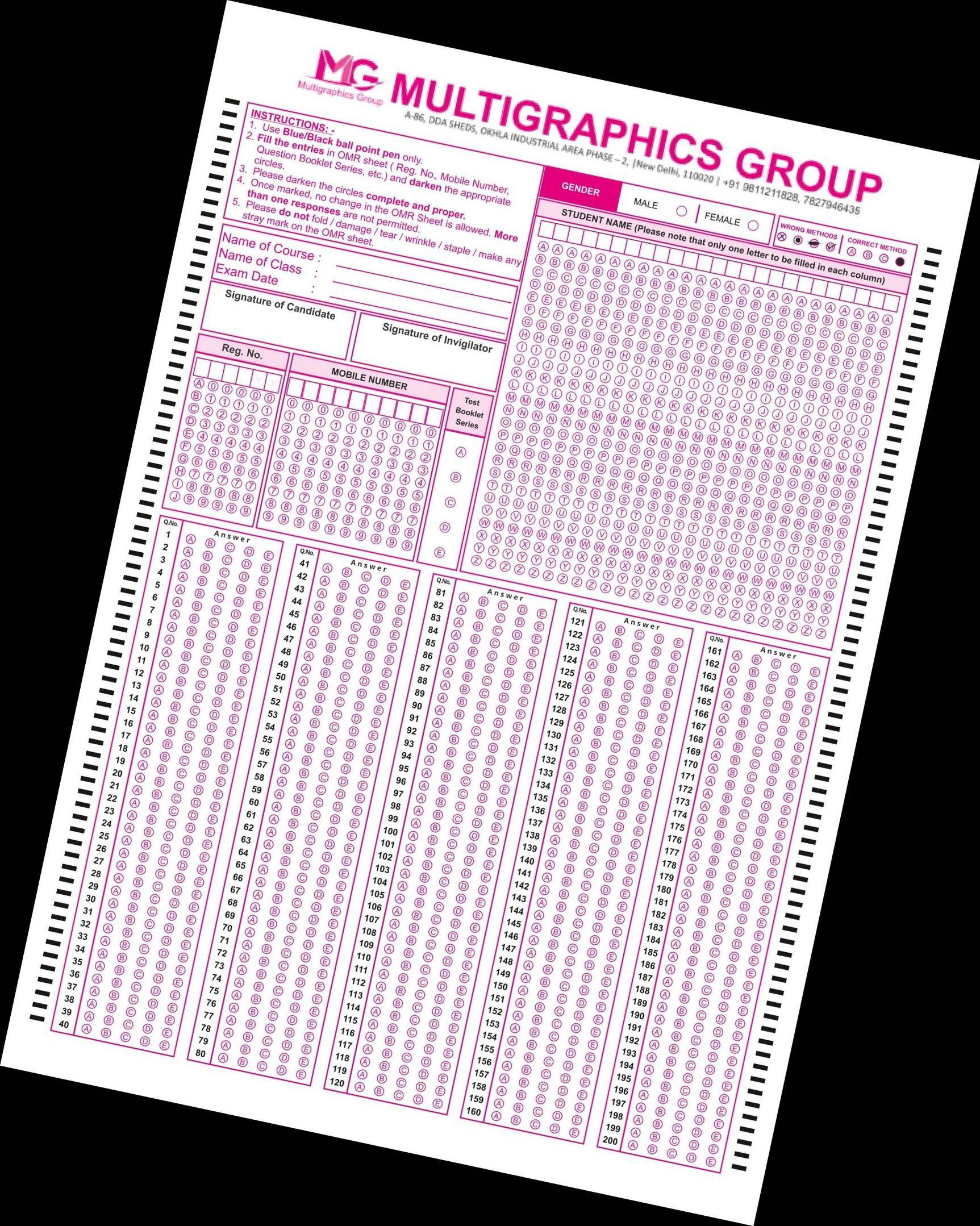

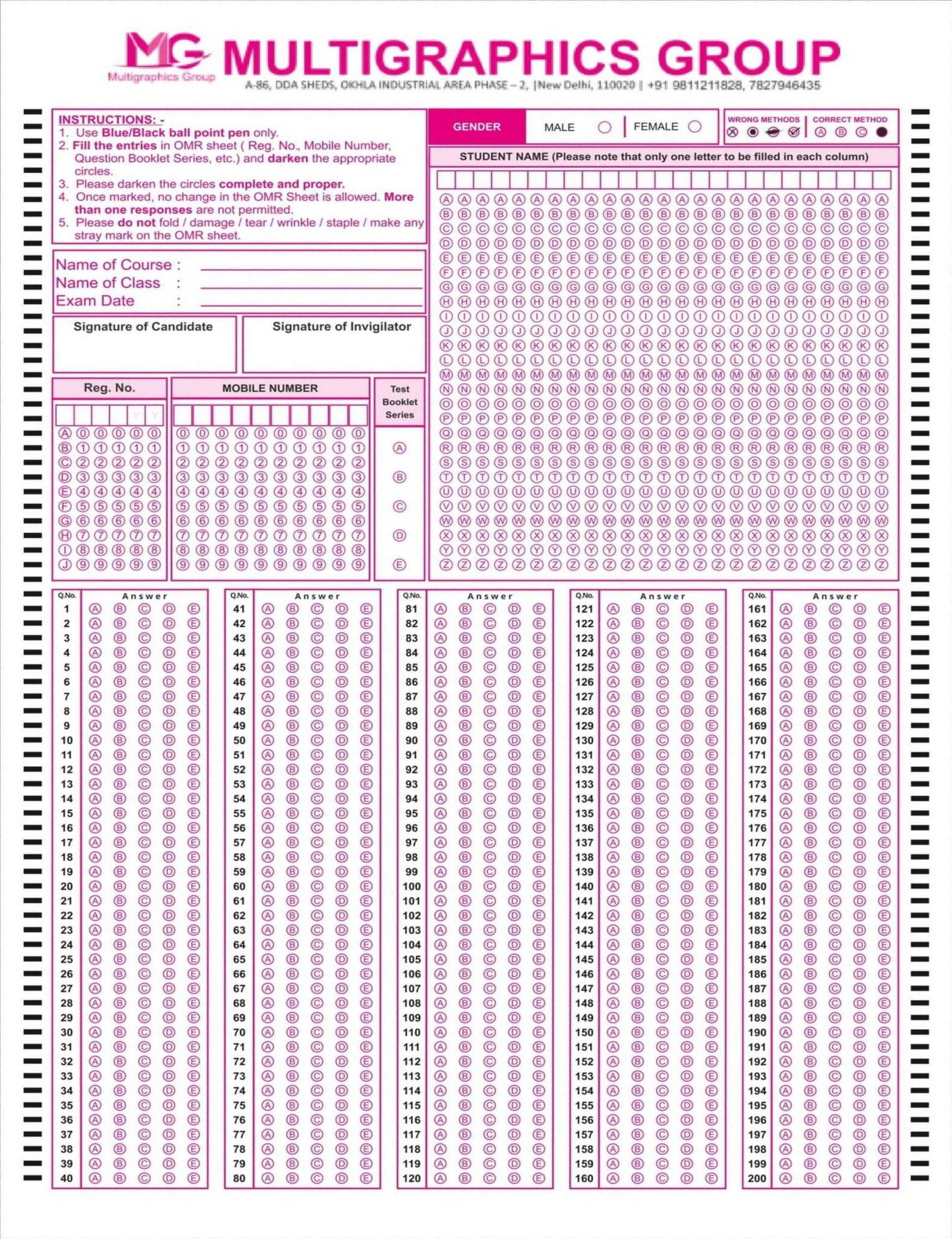

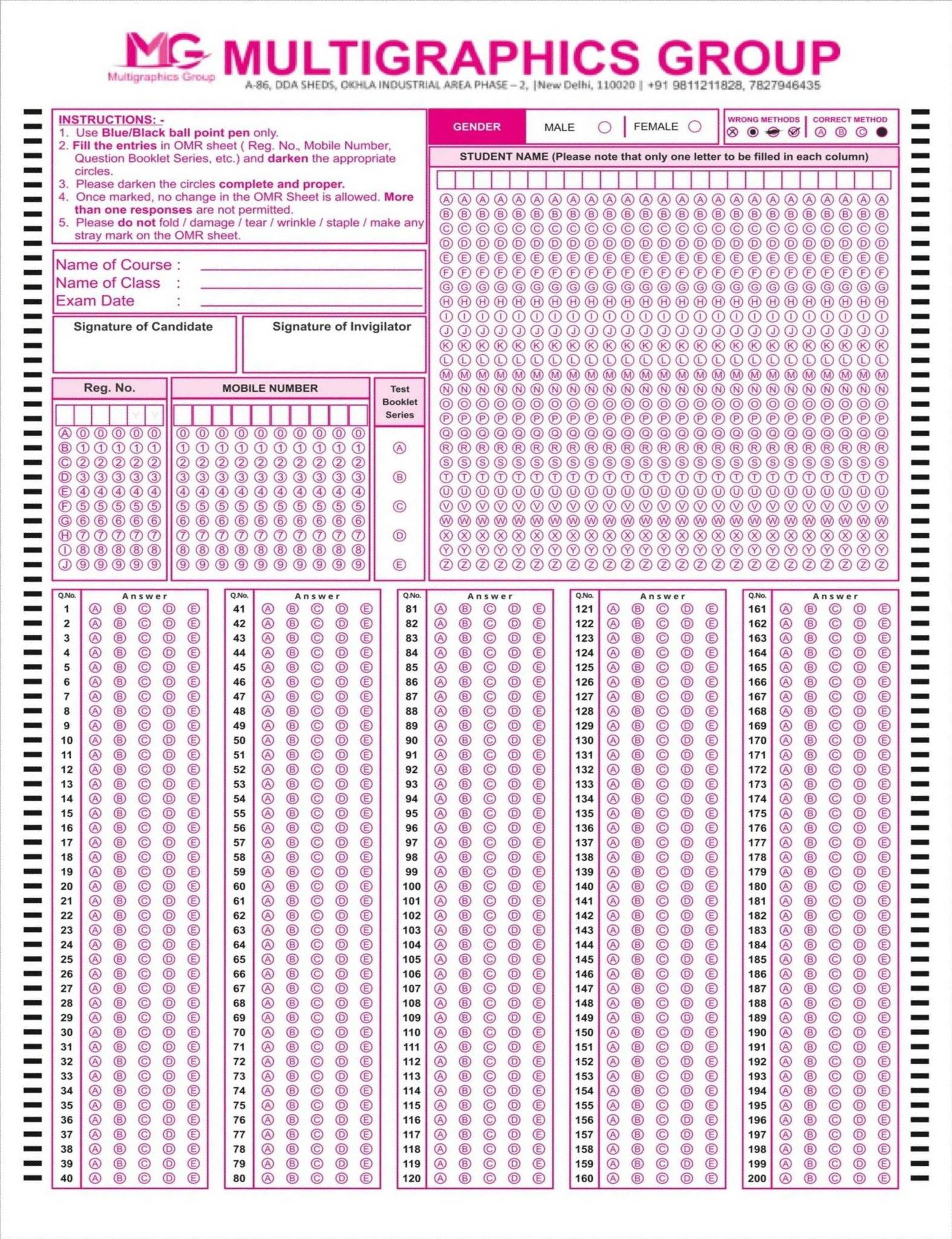

Template:

import cv2

import numpy as np

# read image

img = cv2.imread("omr_test.jpg")

hh, ww = img.shape[:2]

# read template

template = cv2.imread("omr_template.jpg")

ht, wd = template.shape[:2]

# convert img to grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# do otsu threshold on gray image

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY cv2.THRESH_OTSU)[1]

# pad thresh with black to preserve corners when apply morphology

pad = cv2.copyMakeBorder(thresh, 20, 20, 20, 20, borderType=cv2.BORDER_CONSTANT, value=0)

# apply morphology

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (15,15))

morph = cv2.morphologyEx(pad, cv2.MORPH_CLOSE, kernel)

# remove padding

morph = morph[20:hh 20, 20:ww 20]

# get largest external contour

contours = cv2.findContours(morph, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

contours = contours[0] if len(contours) == 2 else contours[1]

big_contour = max(contours, key=cv2.contourArea)

# get perimeter and approximate a polygon

peri = cv2.arcLength(big_contour, True)

corners = cv2.approxPolyDP(big_contour, 0.04 * peri, True)

# draw polygon on input image from detected corners

polygon = img.copy()

cv2.polylines(polygon, [corners], True, (0,255,0), 2, cv2.LINE_AA)

# Alternate: cv2.drawContours(page,[corners],0,(0,0,255),1)

# print the number of found corners and the corner coordinates

# They seem to be listed counter-clockwise from the top most corner

print(len(corners))

print(corners)

# reformat input corners to x,y list

icorners = []

for corner in corners:

pt = [ corner[0][0],corner[0][1] ]

icorners.append(pt)

icorners = np.float32(icorners)

# get corresponding output corners form width and height

ocorners = [ [0,0], [0,ht], [wd,ht], [wd,0] ]

ocorners = np.float32(ocorners)

# get perspective tranformation matrix

M = cv2.getPerspectiveTransform(icorners, ocorners)

# do perspective

warped = cv2.warpPerspective(img, M, (wd, ht))

# write results

cv2.imwrite("omr_test_thresh.jpg", thresh)

cv2.imwrite("omr_test_morph.jpg", morph)

cv2.imwrite("omr_test_polygon.jpg", polygon)

cv2.imwrite("omr_test_warped.jpg", warped)

# display it

cv2.imshow("thresh", thresh)

cv2.imshow("pad", pad)

cv2.imshow("morph", morph)

cv2.imshow("polygon", polygon)

cv2.imshow("warped", warped)

cv2.waitKey(0)

Threshold Image:

Morphology Image:

Polygon Image:

Warped Input:

CodePudding user response:

Here is a correction that solves whether the input image is rotated cw or ccw in Python/OpenCV.

- Read the input

- Read the template (for its dimension)

- Convert the input to gray and threshold

- Pad the threshold (to preserve the corners when apply morphology)

- Apply morphology close

- Remove the padding

- Get the largest external contour

- Get its perimeter and approximate to 4 corners as the input corners for the warping

- Sort the corners by Y and test the first two sorted points for X. If diff=X2-X1 is negative, then the output points need to be list slightly differently.

- Get the output corners for the warping from the dimensions of the template and the diff of the sorted points.

- Get the perspective transformation matrix

- Warp the input to match the template

- Save the results

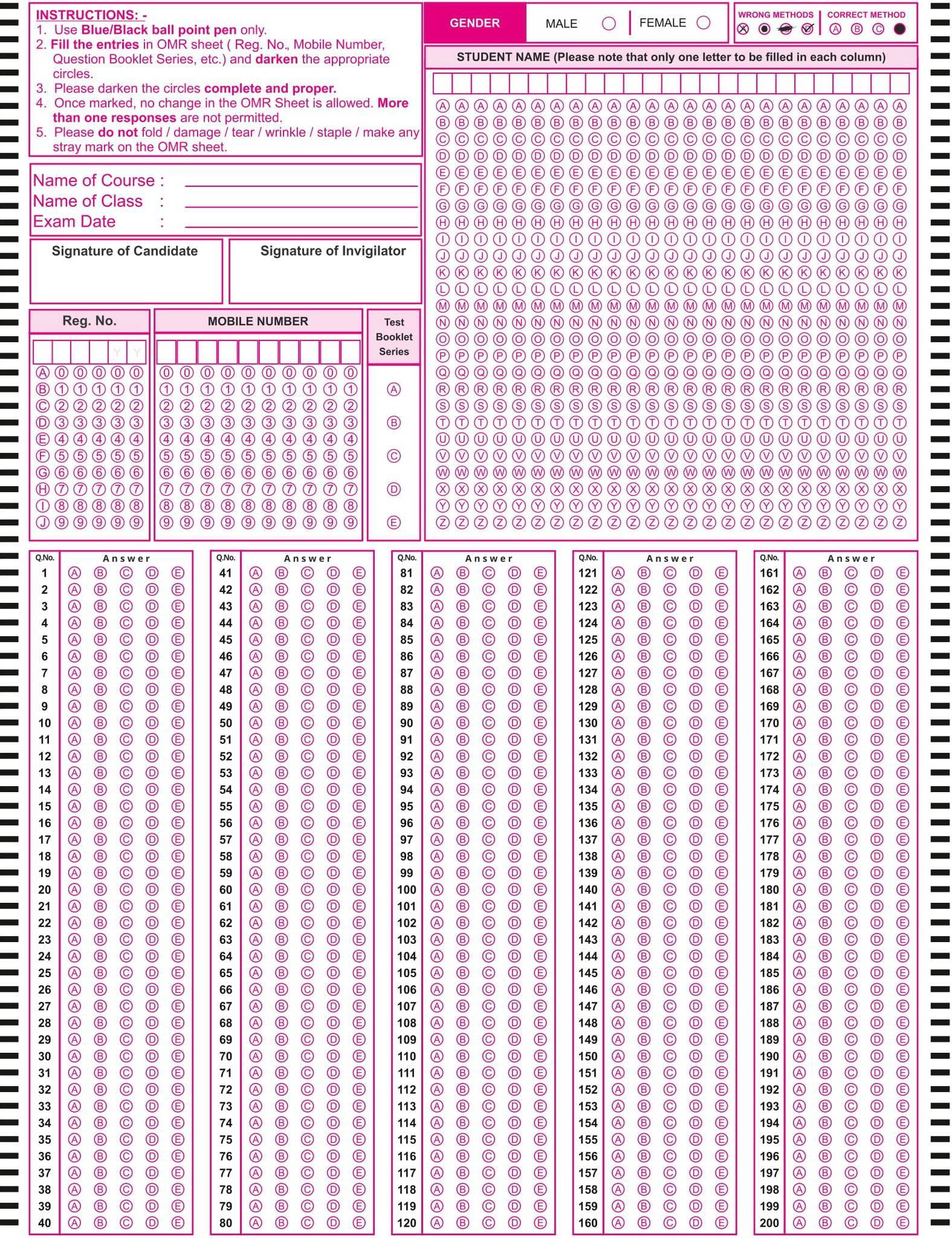

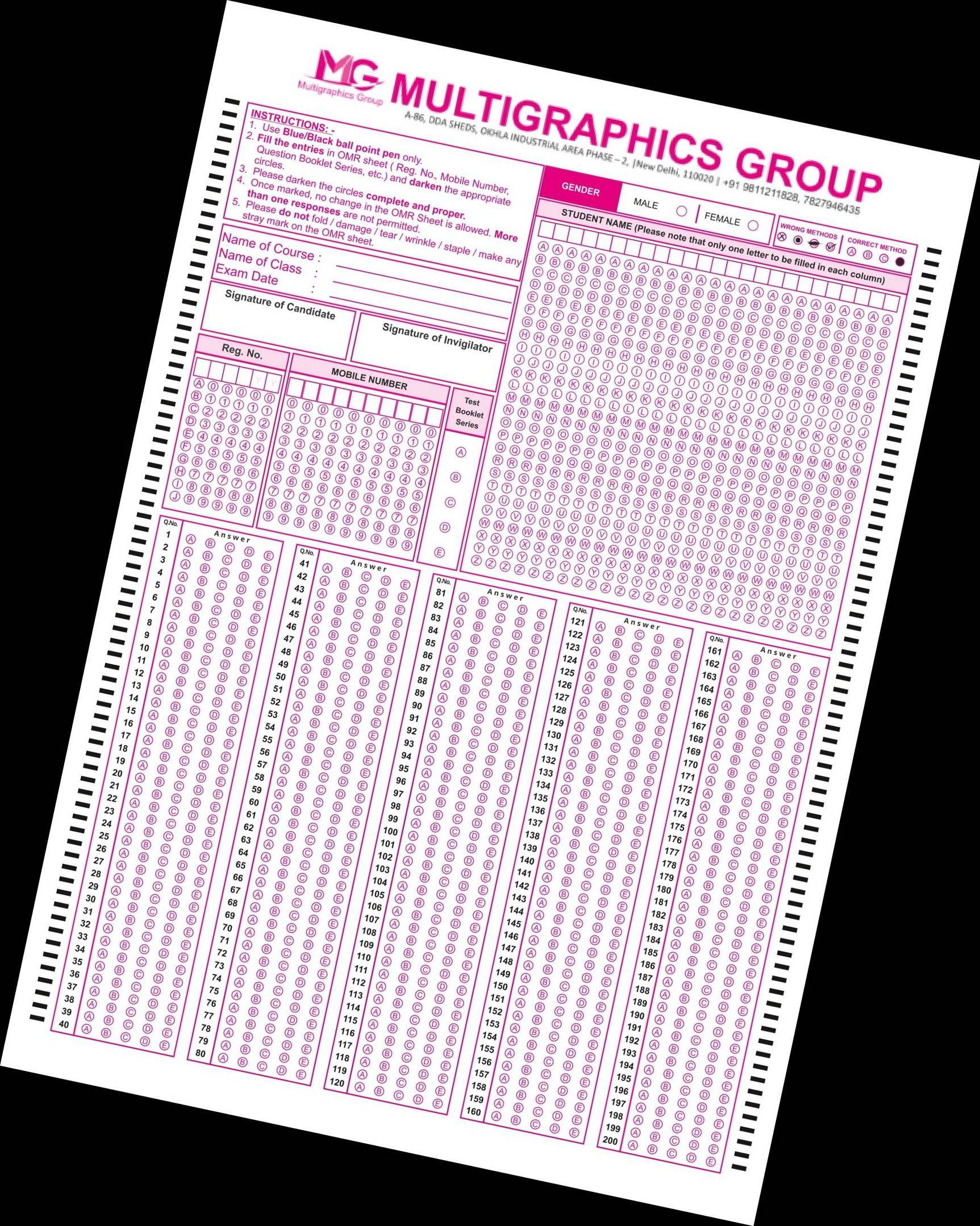

Input 1 (cw rotated):

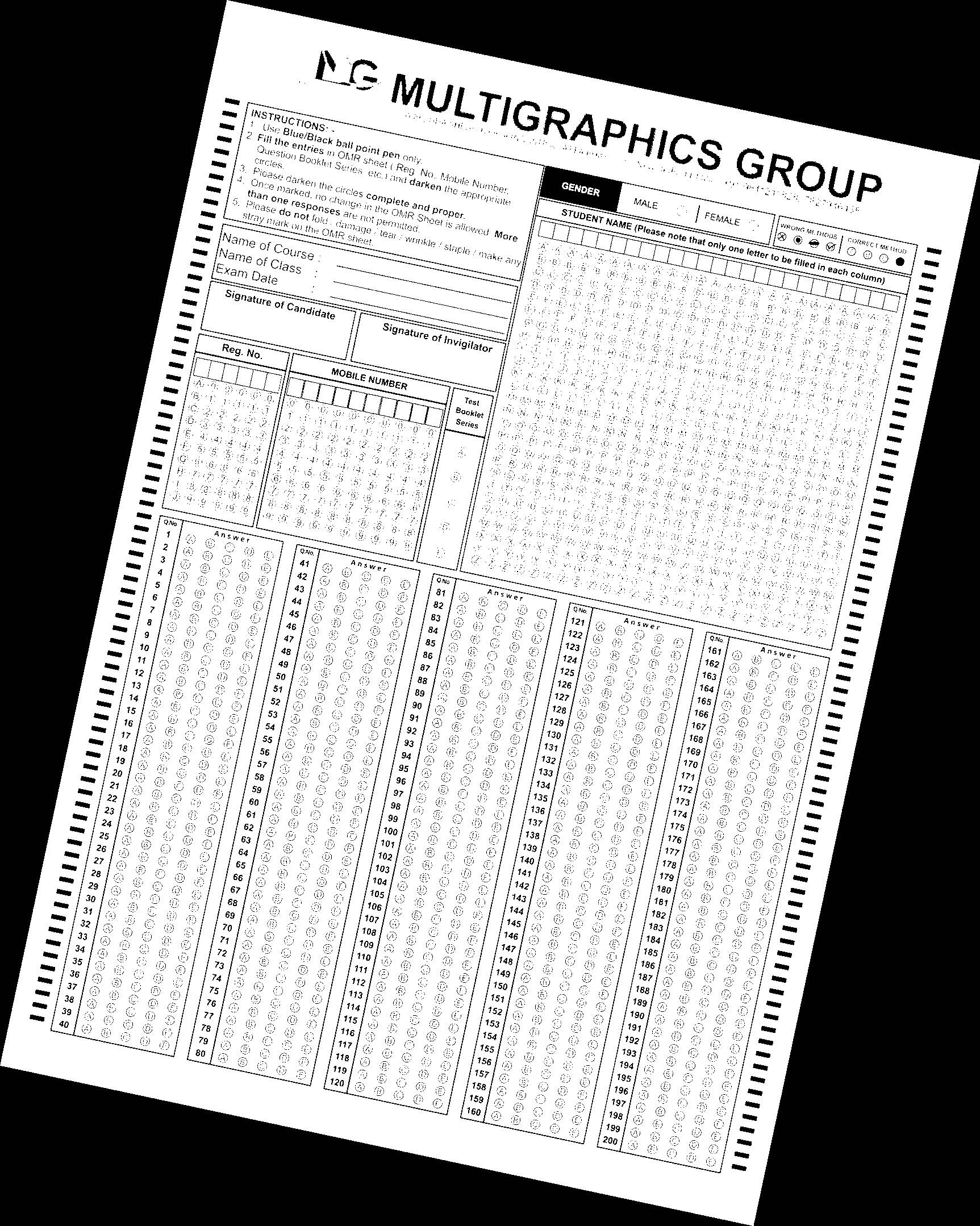

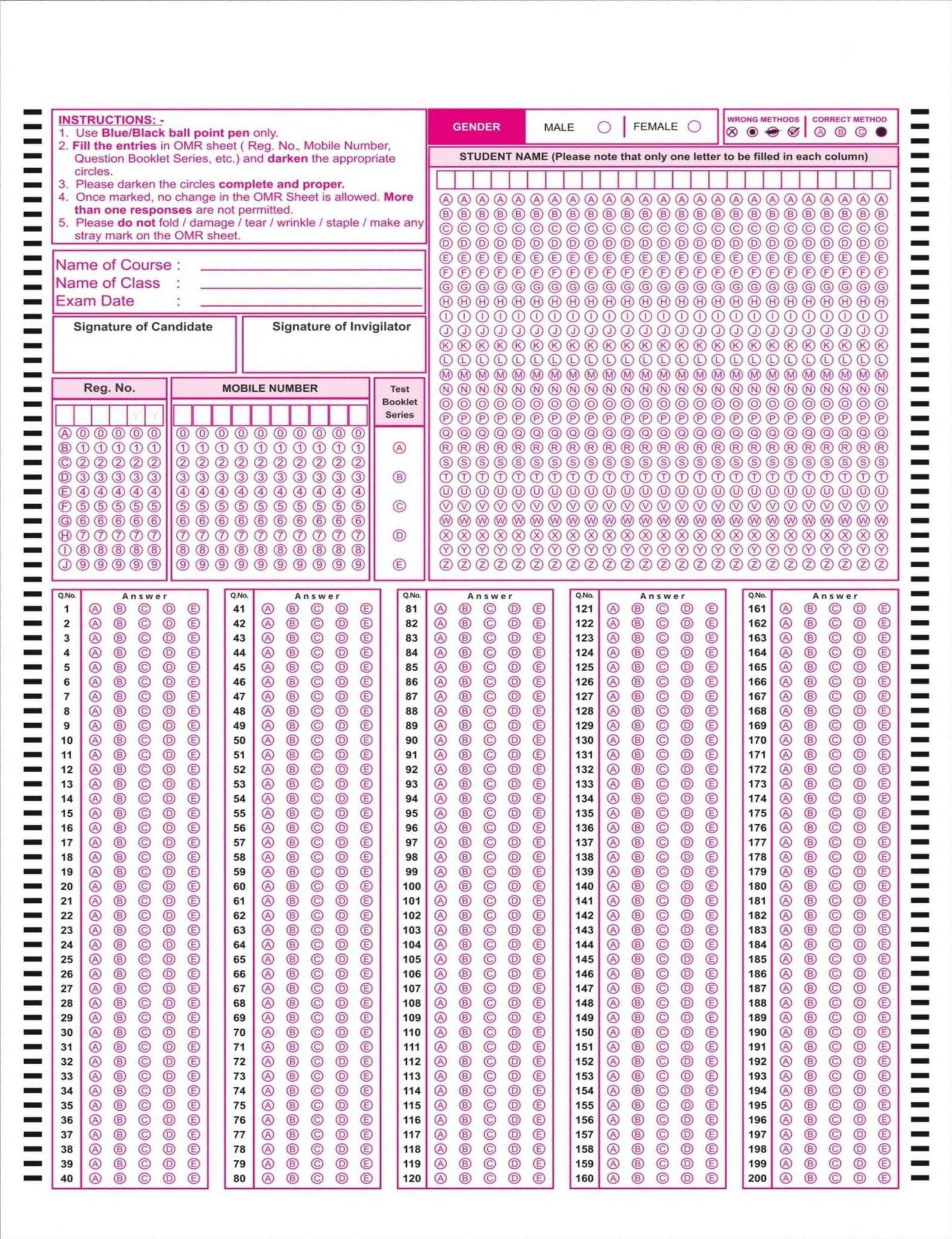

Input 2 (ccw rotated):

import cv2

import numpy as np

# read image

#img = cv2.imread("omr_test.jpg")

img = cv2.imread("omr_test2.jpg")

hh, ww = img.shape[:2]

# read template

template = cv2.imread("omr_template.jpg")

ht, wd = template.shape[:2]

# convert img to grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# do otsu threshold on gray image

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY cv2.THRESH_OTSU)[1]

# pad thresh with black to preserve corners when apply morphology

pad = cv2.copyMakeBorder(thresh, 20, 20, 20, 20, borderType=cv2.BORDER_CONSTANT, value=0)

# apply morphology

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (15,15))

morph = cv2.morphologyEx(pad, cv2.MORPH_CLOSE, kernel)

# remove padding

morph = morph[20:hh 20, 20:ww 20]

# get largest external contour

contours = cv2.findContours(morph, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

contours = contours[0] if len(contours) == 2 else contours[1]

big_contour = max(contours, key=cv2.contourArea)

# get perimeter and approximate a polygon

peri = cv2.arcLength(big_contour, True)

corners = cv2.approxPolyDP(big_contour, 0.04 * peri, True)

# draw polygon on input image from detected corners

polygon = img.copy()

cv2.polylines(polygon, [corners], True, (0,255,0), 2, cv2.LINE_AA)

# print the number of found corners and the corner coordinates

# They seem to be listed counter-clockwise from the top most corner

print(len(corners))

print(corners)

# reformat input corners to x,y list

sortcorners = []

for corner in corners:

pt = [ corner[0][0],corner[0][1] ]

sortcorners.append(pt)

icorners = np.float32(sortcorners)

# sort corners on y

def takeSecond(elem):

return elem[1]

sortcorners.sort(key=takeSecond)

# check if second corner x is left or right of first corner x

x1 = sortcorners[0][0]

x2 = sortcorners[1][0]

diff = x2 - x1

print(x1, x2)

# get corresponding output corners form width and height

if diff >= 0:

ocorners = [ [0,0], [0,ht], [wd,ht], [wd,0] ]

else:

ocorners = [ [wd,0], [0,0], [0,ht], [wd,ht]]

ocorners = np.float32(ocorners)

# get perspective tranformation matrix

M = cv2.getPerspectiveTransform(icorners, ocorners)

# do perspective

warped = cv2.warpPerspective(img, M, (wd, ht))

# write results

cv2.imwrite("omr_test2_thresh.jpg", thresh)

cv2.imwrite("omr_test2_morph.jpg", morph)

cv2.imwrite("omr_test2_polygon.jpg", polygon)

cv2.imwrite("omr_test2_warped.jpg", warped)

# display it

cv2.imshow("thresh", thresh)

cv2.imshow("pad", pad)

cv2.imshow("morph", morph)

cv2.imshow("polygon", polygon)

cv2.imshow("warped", warped)

cv2.waitKey(0)

Result for first input (cw rotated):

Result for second input (ccw rotated):