I have a small program running on x64 calling system function with a parameter long enough which means he will be pushed to function on the stack as I understand.

#include <stdlib.h>

int main(void) {

char command[] = "/bin/sh -c whoami";

system(command);

return EXIT_SUCCESS;

}

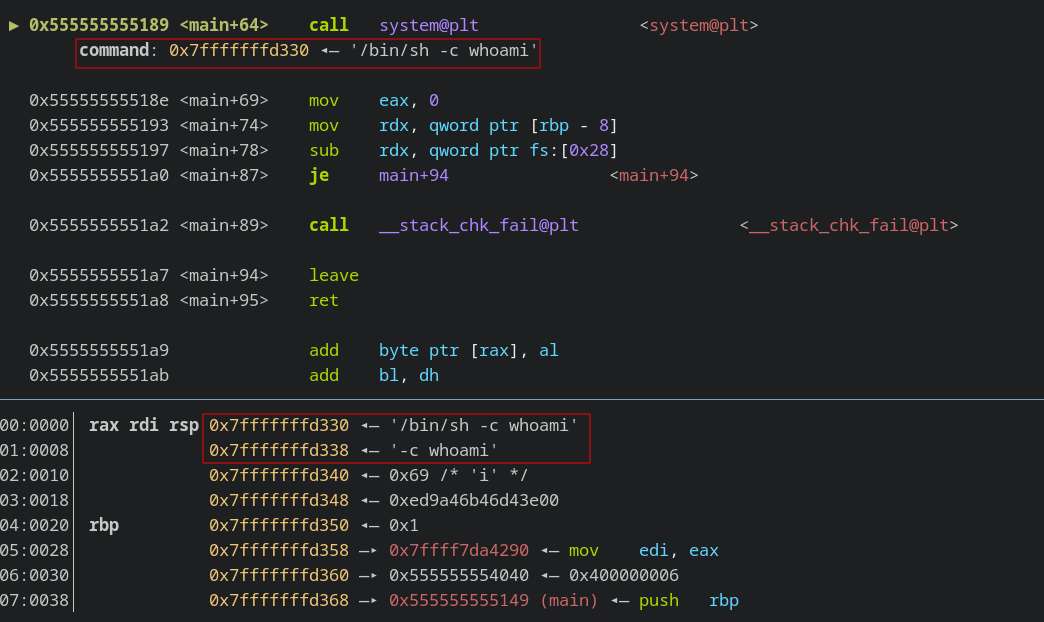

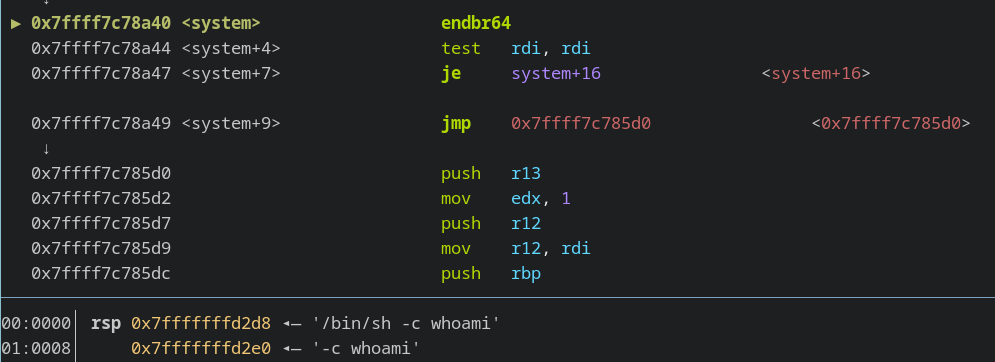

When I check in GDB what is happening I can confirm that my parameter is on the stack on 2 words.

I wonder how does the CPU know that it needs to read 2 words and not continue after. What delimit the function parameter from the rest ?

I am asking this question as I am working on Buffer Overflow and while I have the same situation on the stack, the CPU does only pick one word (/bin/sh ) instead of the 2 words I would like. Outputing sh: line 1: $'Ћ\310\367\377\177': command not found

CodePudding user response:

How does processor know how much to read from the stack for function parameters (x64)

The CPU does not know. By that, I mean it does not receive an instruction that says "retrieve the next argument from the stack, whatever the appropriate size may be." It receives instructions to retrieve data of a specific size from a specific place, and to operate on that data, or put it in a register, or store it in some other place. Those instructions are generated by the compiler, based on the program source code, and they are part of the program binary.

I wonder how does the CPU know that it needs to read 2 words and not continue after. What delimit the function parameter from the rest ?

Nothing delimits one function parameter from the next -- neither on the stack nor generally. Programs do not (generally) figure out such things on the fly by introspecting the data. Instead, functions require parameters to be set up in a particular way, which is governed by a set of conventions called an "Application Binary Interface" (ABI), and they operate on the assumption that the data indeed are set up that way. If those assumptions turn out to be invalid then more or less anything can happen.

I am asking this question as I am working on Buffer Overflow and while I have the same situation on the stack, the CPU does only pick one word (/bin/sh ) instead of the 2 words I would like.

The number of words the function will consume from the stack and the significance it will attribute to them is characteristic of the function, not (generally) of the data on the stack.

CodePudding user response:

Depends on the calling convention implemented for the function. By specifying none, you let the compiler decide, and it can go creative, sometimes even disappearing with any explicit call for the sake of branch prediction optimization, otherwise you can learn precisely what to expect from numerous sources of documentation that specify how those calling conventions are supposed to work.