For a DataFrame I need to convert blank strings ('', ' ', ...) to null values in a set of columns.

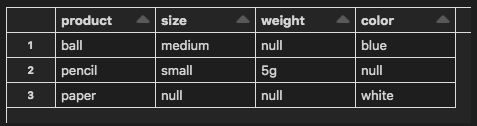

df = spark.createDataFrame([

('ball', 'medium', '', 'blue'),

('pencil', 'small', '5g', ''),

('paper', ' ', ' ', 'white')

], ['product', 'size', 'weight', 'color'])

I can do it for each column one by one, but its too lazy when we have many dataframes and columns.

from pyspark.sql.functions import when, trim, col

df = df \

.withColumn('size', when(trim(col('size')) == '', None).otherwise(col('size'))) \

.withColumn('weight', when(trim(col('weight')) == '', None).otherwise(col('weight'))) \

.withColumn('color', when(trim(col('color')) == '', None).otherwise(col('color')))

How should I do the transformation in a more generic way, avoiding code replication?

CodePudding user response:

What about this neater one :-)?

df.select('*',*[when(trim(df[x])=='',None).otherwise(df[x]).alias(F"{x}_new") for x in df.columns]).show()

Or even better

df.select(*[when(trim(df[x])=='',None).otherwise(df[x]).alias(x) for x in df.columns]).show()

------- ------ ------ -----

|product| size|weight|color|

------- ------ ------ -----

| ball|medium| null| blue|

| pencil| small| 5g| null|

| paper| null| null|white|

------- ------ ------ -----

CodePudding user response:

You can encapsulate in a function and add it to the DataFrame class

from pyspark.sql.functions import when, trim, col

from pyspark.sql.dataframe import DataFrame

def blank_to_null(self, *col_names):

for col_name in col_names:

self = self.withColumn(

col_name,

when(trim(col(col_name)) == '', None) \

.otherwise(col(col_name)))

return self

DataFrame.blank_to_null = blank_to_null

Very simple now

df = df.blank_to_null('size', 'weight', 'color')

A good practice to extend the DataFrame class is to declare an extension function and add it to a custom property

from pyspark.sql.functions import when, trim, col

from pyspark.sql.dataframe import DataFrame

# decorator to attach a function to an attribute

def add_attr(cls):

def decorator(func):

@wraps(func)

def _wrapper(*args, **kwargs):

f = func(*args, **kwargs)

return f

setattr(cls, func.__name__, _wrapper)

return func

return decorator

# DataFrame custom functions (blank_to_null)

def custom(self):

@add_attr(custom)

def blank_to_null(*col_names):

df = self

for col_name in col_names:

df = df.withColumn(

col_name,

when(trim(col(col_name)) == '', None) \

.otherwise(col(col_name)))

return df

return custom

# add new property to the Class pyspark.sql.DataFrame

DataFrame.custom = property(custom)

Still simple to use

df = df.custom.blank_to_null('size', 'weight', 'color')