For a project I want to detect braille dots on a plate. I make a picture on which I make my detection thanks to the connectedComponentsWithStats function. Despite my attempts I can never get a threshold value where all the dots and only them are detected, I have the same problem if I try to use the circle detection. I'm trying to use template matching on the advice of a teacher but I'm also having problems with my detection since the only factor that influences it is the threshold.

import matplotlib.pyplot as plt

img1 = cv.imread(r"traitement\prod.png")

plt.figure(figsize=(40,40))

plt.subplot(3,1,1)

gray_img = cv.cvtColor(img1, cv.COLOR_BGR2GRAY)

test = cv.adaptiveThreshold(gray_img, 255, cv.ADAPTIVE_THRESH_MEAN_C, cv.THRESH_BINARY_INV, 11, 6)

_, _, boxes, _ = cv.connectedComponentsWithStats(test)

boxes = boxes[1:]

filtered_boxes = []

for x,y,w,h,pixels in boxes:

if pixels < 1000 and h < 35 and w < 35 and h > 14 and w > 14 and x > 15 and y > 15:

filtered_boxes.append((x,y,w,h))

for x,y,w,h in filtered_boxes:

W = int(w)/2

H = int(h)/2

#print(w)

cv.circle(img1,(x int(W),y int(H)),2,(0,255,0),20)

cv.imwrite("gray.png",gray_img)

cv.imwrite("test.png",test)

plt.imshow(test)

plt.subplot(3,1,2)

plt.imshow(img1)

import cv2 as cv

import numpy as np

from imutils.object_detection import non_max_suppression

import matplotlib.pyplot as plt

img = cv.imread('traitement/prod.png')

temp_gray = cv.imread('dot.png',0)

W, H = temp.shape[:2]

thresh = 0.6

img_gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

match = cv.matchTemplate(image=img_gray, templ=temp_gray, method=cv.TM_CCOEFF_NORMED)

(y_points, x_points) = np.where(match >= thresh)

boxes = list()

for (x, y) in zip(x_points, y_points):

# update our list of rectangles

boxes.append((x, y, x W, y H))

boxes = non_max_suppression(np.array(boxes))

# loop over the final bounding boxes

for (x1, y1, x2, y2) in boxes:

cv.circle(img,(x1 int(W/2),y1 int(H/2)),2,(255,0,0),15)

plt.figure(figsize=(40,40))

plt.subplot(3,1,1)

plt.imshow(img)

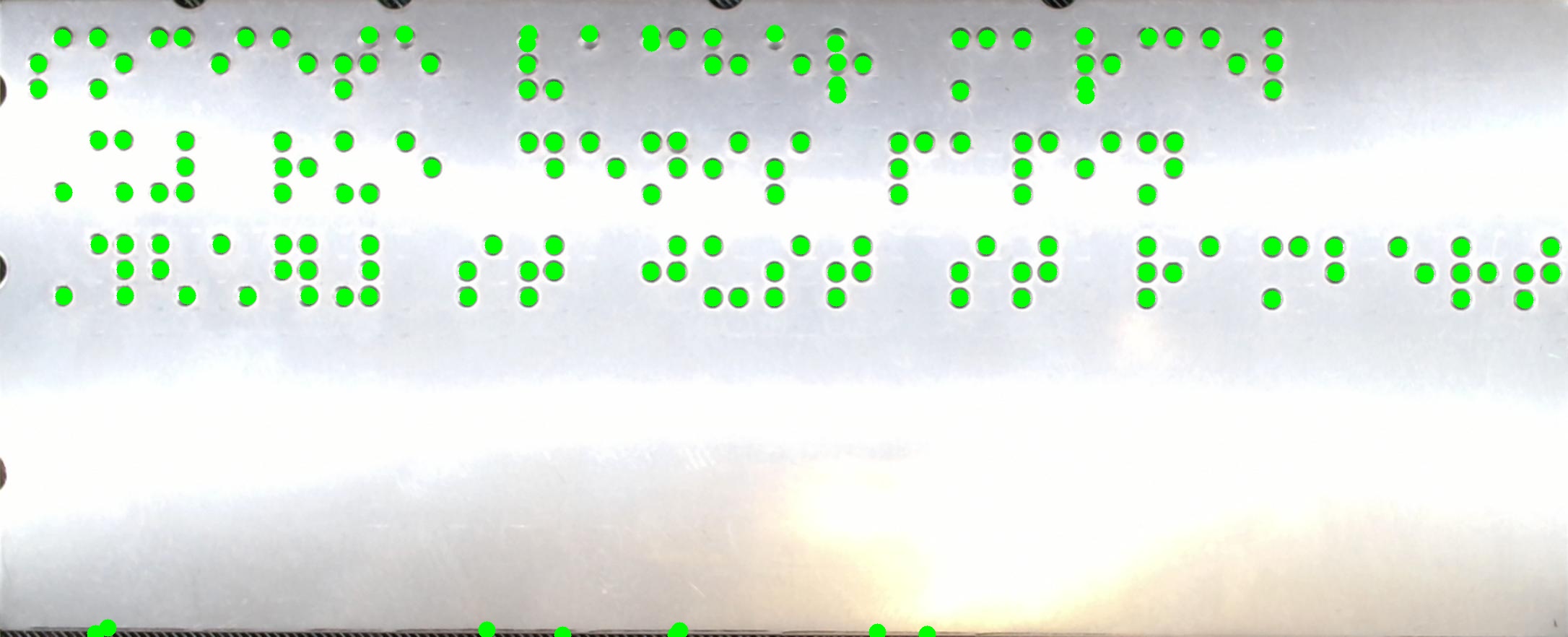

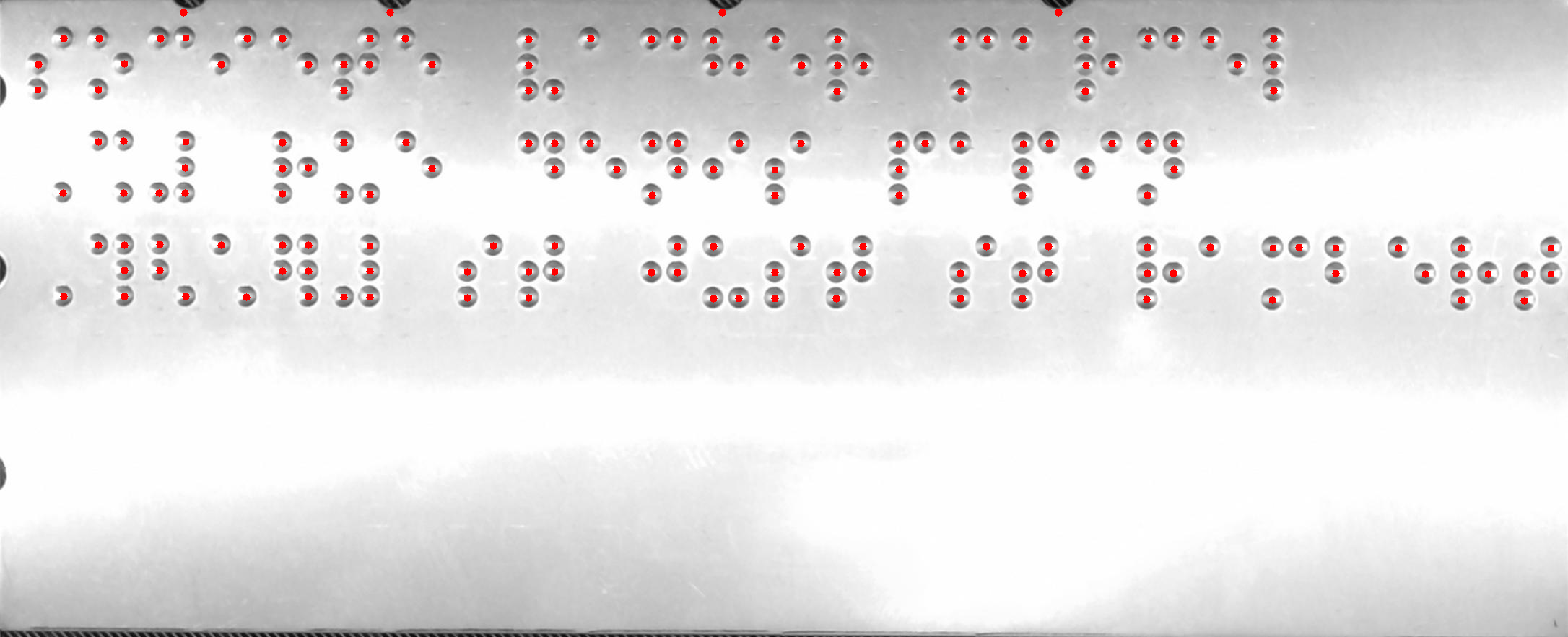

Image with adaptive threshold:

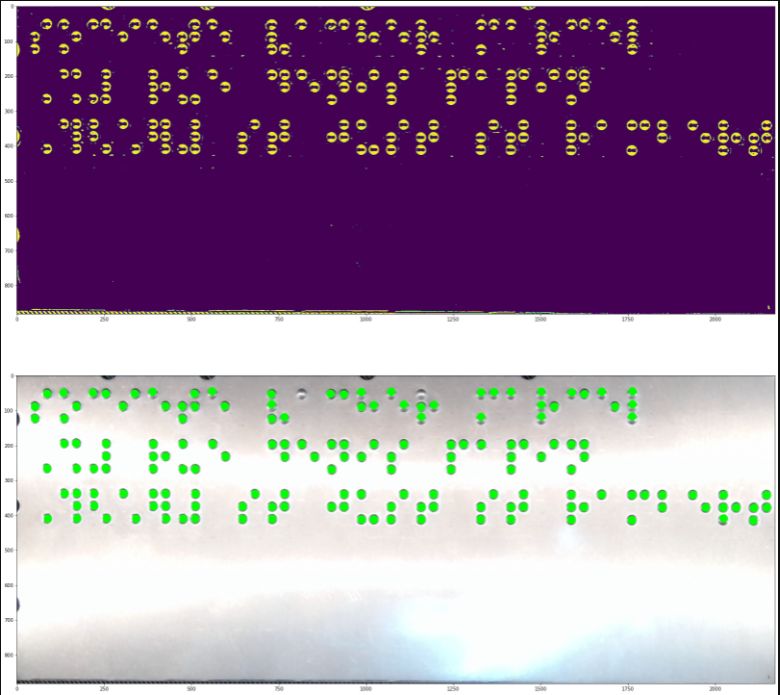

Image with template detection:

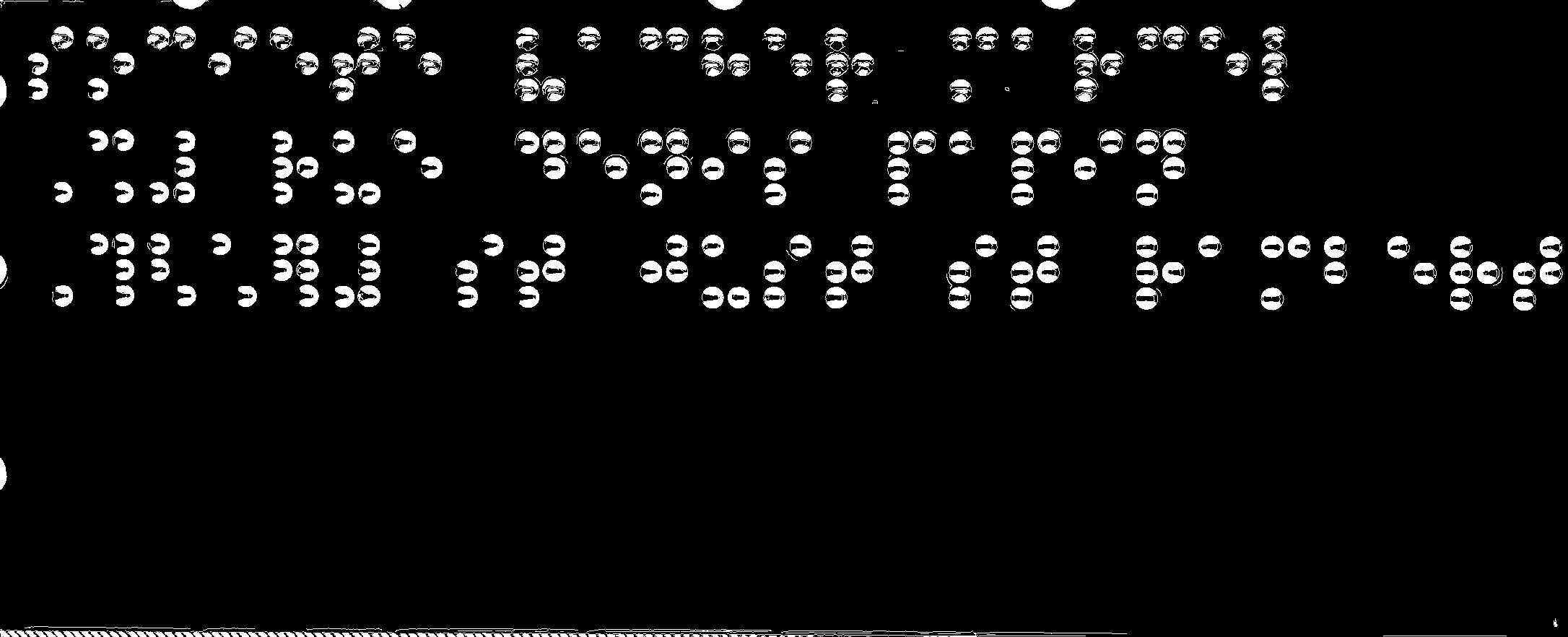

CodePudding user response:

I found a solution that may not be better than your solutions, because I had to overfit few parameters for the given input...

The problem is challenging because the input image was taken under non-uniform illumination conditions (the center part is brighter than the top). Consider taking a better snapshot...

Point of thought:

The dots are ordered in rows, and we are not using that information.

We may get better results if we were using the fact that the dots are ordered in rows.

For overcoming the brightness differences we may subtract the median of the surrounding pixels from each pixel (using large filter radius), and compute the absolute difference:

bg = cv2.medianBlur(gray, 151) # Background fg = cv2.absdiff(gray, bg) # Foreground (use absdiff because the dost are dark but bright at the center).Apply binary threshold (use

THRESH_OTSUfor automatic threshold level):_, thresh = cv2.threshold(fg, 0, 255, cv2.THRESH_OTSU)The result of

threshis not good enough for finding the dots.

We may use the fact that the dots are dark with bright center.

That fact makes an high edges around and inside the dots.

Apply Canny edge detection:edges = cv2.Canny(gray, threshold1=50, threshold2=100)Merge

edgeswiththresh(use binary or):thresh = cv2.bitwise_or(thresh, edges)Find connected components and continue (filter the components by area).

Code sample:

import numpy as np

import cv2

img1 = cv2.imread('prod.jpg')

gray = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY) # Convert to grayscale

bg = cv2.medianBlur(gray, 151) # Compute the background (use a large filter radius for excluding the dots)

fg = cv2.absdiff(gray, bg) # Compute absolute difference

_, thresh = cv2.threshold(fg, 0, 255, cv2.THRESH_OTSU) # Apply binary threshold (THRESH_OTSU applies automatic threshold level)

edges = cv2.Canny(gray, threshold1=50, threshold2=100) # Apply Canny edge detection.

thresh = cv2.bitwise_or(thresh, edges) # Merge edges with thresh

_, _, boxes, _ = cv2.connectedComponentsWithStats(thresh)

boxes = boxes[1:]

filtered_boxes = []

for x, y, w, h, pixels in boxes:

#if pixels < 1000 and h < 35 and w < 35 and h > 14 and w > 14 and x > 15 and y > 15 and pixels > 100:

if pixels < 1000 and x > 15 and y > 15 and pixels > 200:

filtered_boxes.append((x, y, w, h))

for x, y, w, h in filtered_boxes:

W = int(w)/2

H = int(h)/2

cv2.circle(img1, (x int(W), y int(H)), 2, (0, 255, 0), 20)

# Show images for testing

cv2.imshow('bg', bg)

cv2.imshow('fg', fg)

cv2.imshow('gray', gray)

cv2.imshow('edges', edges)

cv2.imshow('thresh', thresh)

cv2.imshow('img1', img1)

cv2.waitKey()

cv2.destroyAllWindows()

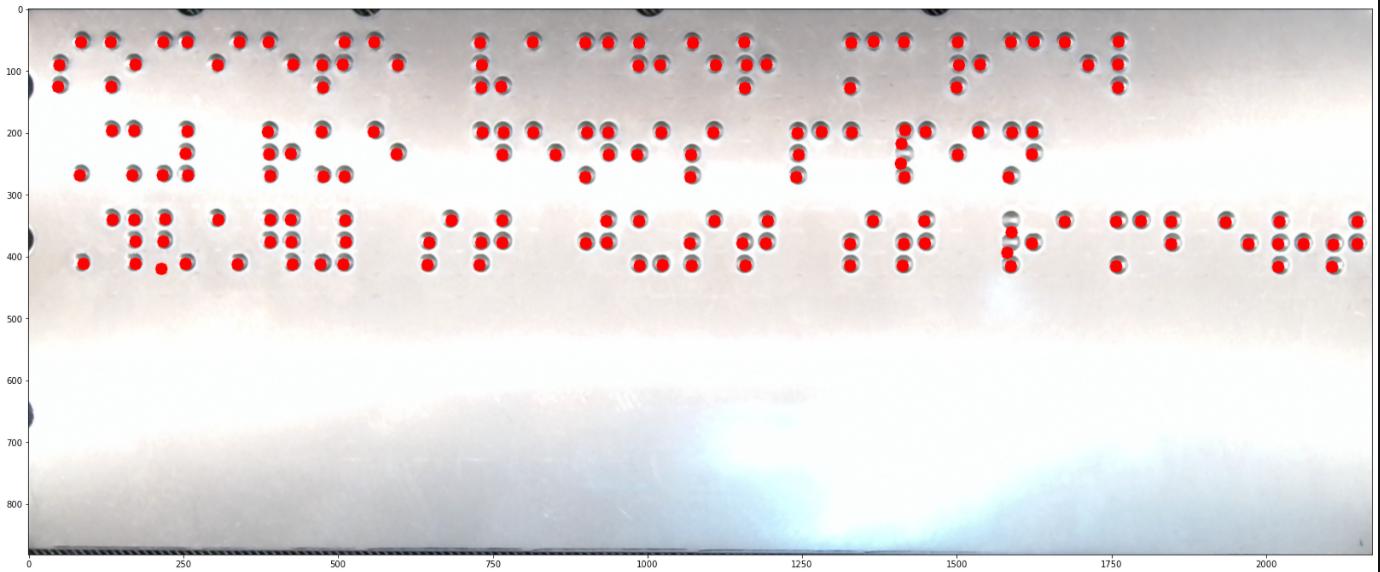

There are few dots that are marked twice.

It is relatively simple to merge the overlapping circles into one circle.

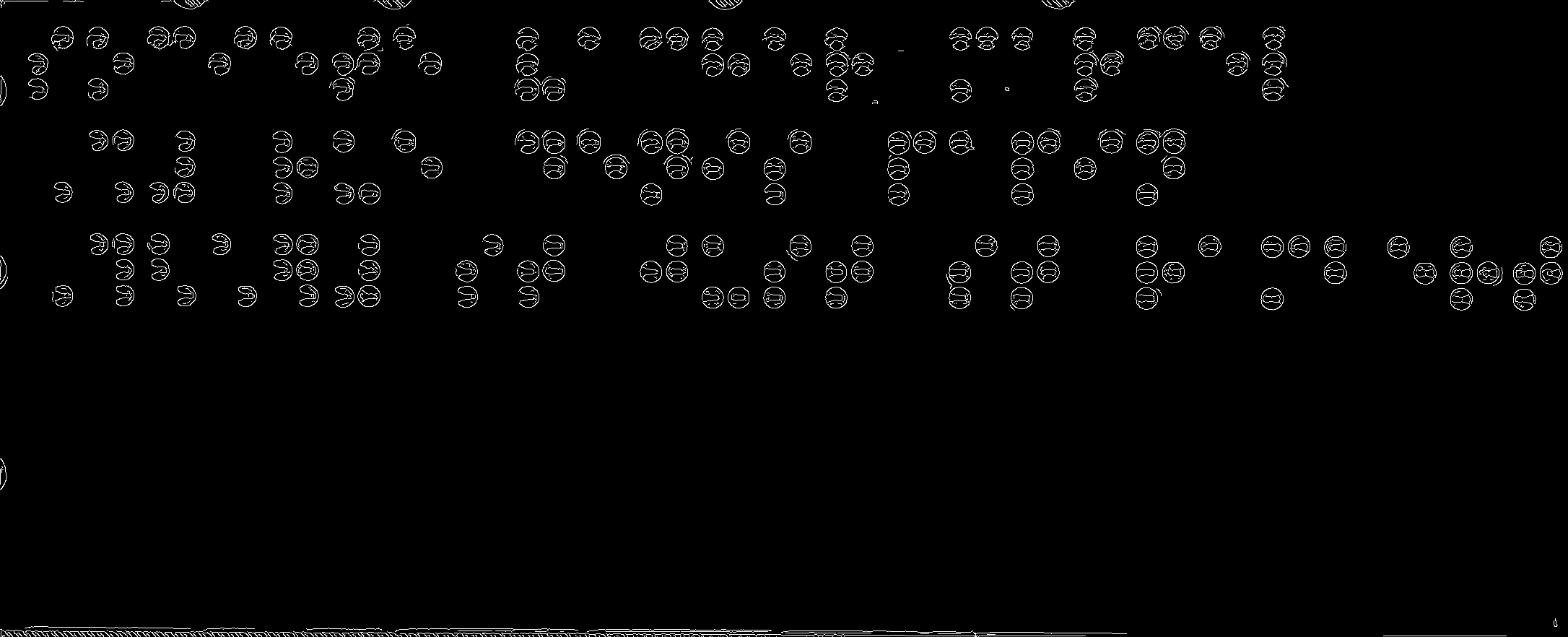

Intermediate results:

thresh (before merging with edges):

Update:

As Jeru Luke commented we may use non-maximum suppression as done in question.

Here is a code sample:

import numpy as np

import cv2

from imutils.object_detection import non_max_suppression

img1 = cv2.imread('prod.jpg')

gray = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY) # Convert to grayscale

bg = cv2.medianBlur(gray, 151) # Compute the background (use a large filter radius for excluding the dots)

fg = cv2.absdiff(gray, bg) # Compute absolute difference

_, thresh = cv2.threshold(fg, 0, 255, cv2.THRESH_OTSU) # Apply binary threshold (THRESH_OTSU applies automatic threshold level)

edges = cv2.Canny(gray, threshold1=50, threshold2=100) # Apply Canny edge detection.

thresh = cv2.bitwise_or(thresh, edges) # Merge edges with thresh

_, _, boxes, _ = cv2.connectedComponentsWithStats(thresh)

boxes = boxes[1:]

filtered_boxes = []

for x, y, w, h, pixels in boxes:

if pixels < 1000 and x > 15 and y > 15 and pixels > 200:

filtered_boxes.append((x, y, x w, y h))

filtered_boxes = non_max_suppression(np.array(filtered_boxes), overlapThresh=0.2)

for x1, y1, x2, y2 in filtered_boxes:

cv2.circle(img1, ((x1 x2)//2, (y1 y2)//2), 2, (0, 255, 0), 20)

CodePudding user response:

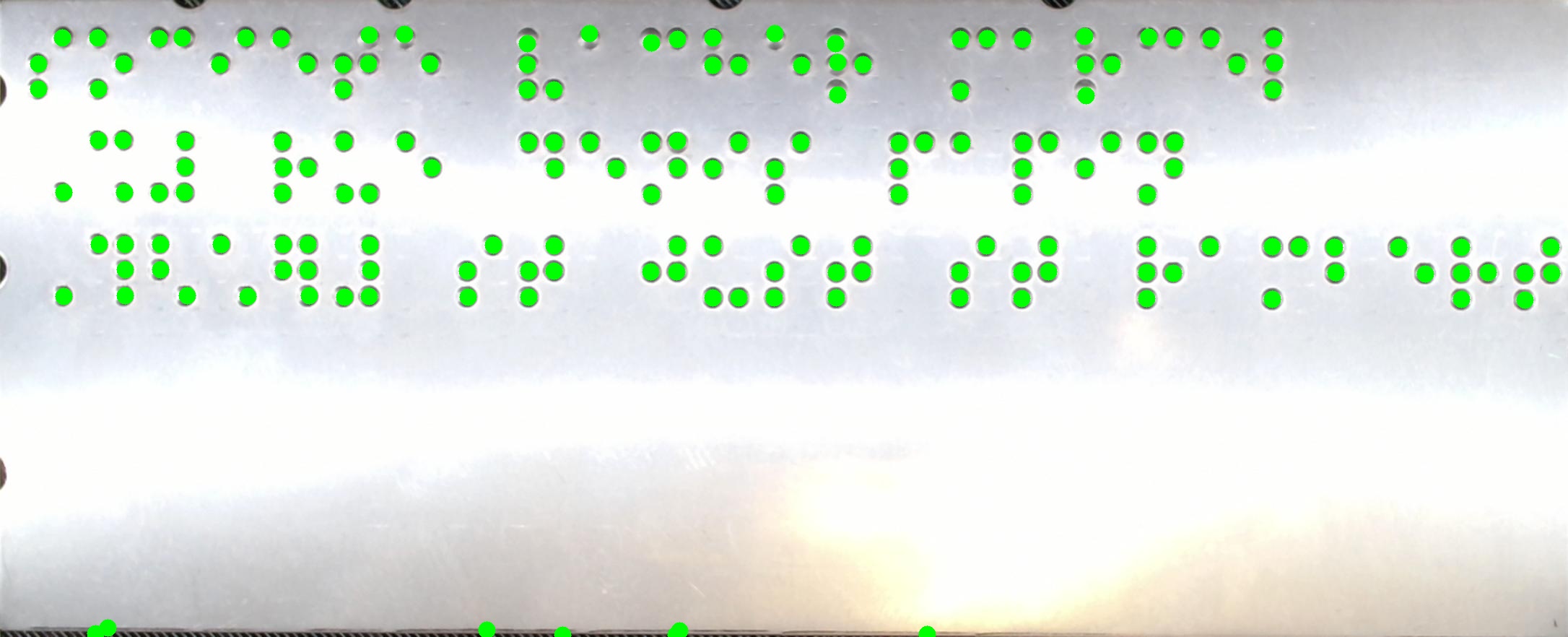

Approach: template matching.

Because the appearance of these dots can't be caught by just thresholding on brightness. These dots have both brighter and darker pixels than the flat surface. At best you'd get fractured components and given how close these dots are, any morphology operations to fix up the fractured components would run the risk of joining adjacent dots.

So here I do template matching. Works well enough, even though the appearance of these dots changes across the image. Brightness is uneven but that's not too much of a problem. TM_CCOEFF subtracts the mean for both patches before correlating.

imutils requires you to come up with bounding boxes for its NMS. Instead I'm using a simple and effective NMS formulation for raster data. Comparing floats for equality is okay here because dilate simply replicates the maximum value across the kernel size. It uses L-inf distance; for euclidean distance or an approximation, the kernel needs to be round.

# Non-maximum suppression for raster data

def non_maximum_suppression(im, radius):

dilated = cv.dilate(im, kernel=None, iterations=radius)

return (im == dilated)

# Read image

im = cv.imread("fK2WOX.jpeg", cv.IMREAD_GRAYSCALE)

# Select template

# (x,y,w,h) = (880, 247, 44, 44)

(x,y,w,h) = cv.selectROI("ROI", 255-im, showCrosshair=False, fromCenter=True) # inverted to see the white rectangle...

cv.destroyWindow("ROI")

print((x,y,w,h))

template = im[y:y h, x:x w]

# find instances

scores = cv.matchTemplate(im, template, method=cv.TM_CCOEFF)

scores = scores / scores.max()

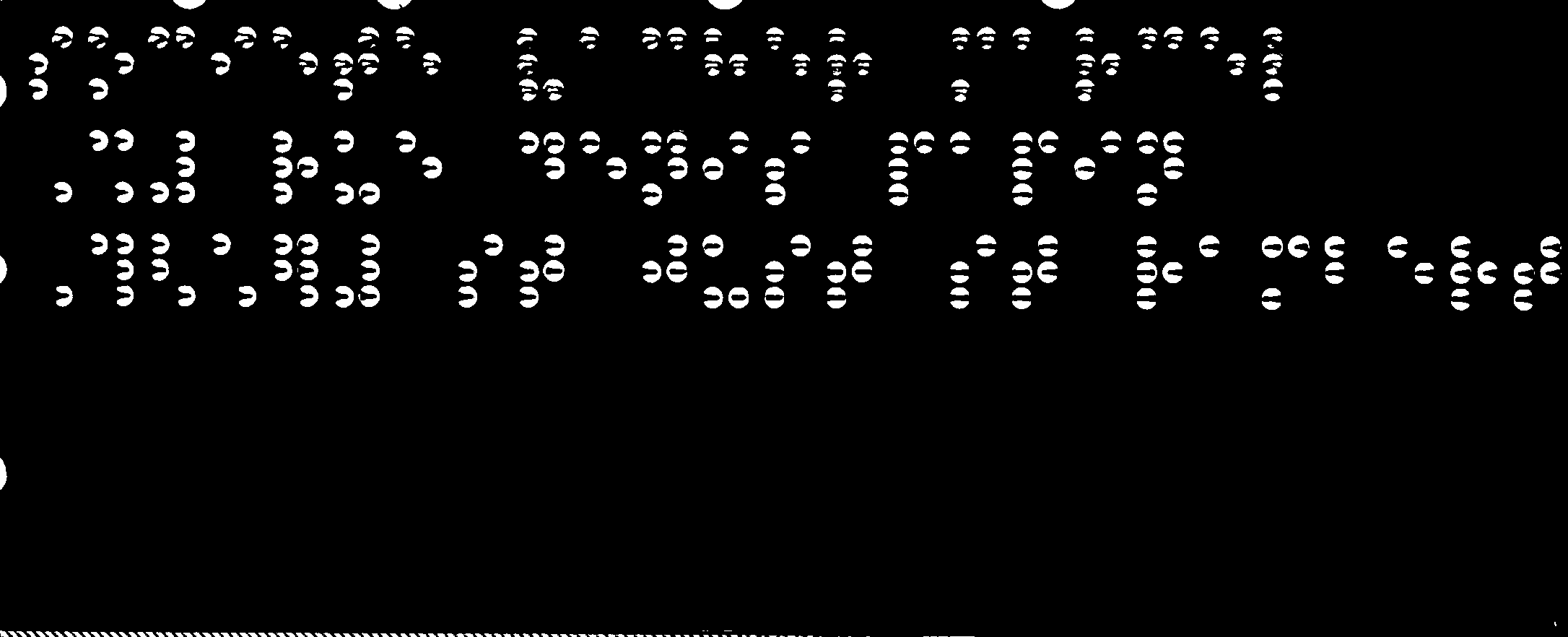

You see that the template creates additional peaks to the top and bottom of each dot. That can't be helped because that's the appearance of these dots, they consist of a bright dash surrounded by two darker blobs. These false peaks can be suppressed with NMS quite easily.

# find peaks of sufficient strength and NMS

threshold = 0.2

nmsradius = 20 # proportional to size of template/dot

nmsmask = (scores >= threshold) & non_maximum_suppression(scores, radius=nmsradius)

coords = cv.findNonZero(nmsmask.astype(np.uint8)).reshape((-1, 2))

coords = (w//2, h//2) # shift coordinates to be center of template

# draw result

canvas = cv.cvtColor(im, cv.COLOR_GRAY2BGR)

for pt in coords:

cv.circle(canvas, pt, radius=5, color=(0,0,255), thickness=cv.FILLED)

There are no overlapping/split detections. You'll have to excuse the false detections around the punched holes near the top. Just crop those out.