I have a below pipeline

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: git-clone-pipeline

spec:

params:

- name: repo-url

type: string

workspaces:

- name: shared-workspace

tasks:

- name: clone-repository

taskRef:

name: git-clone

workspaces:

- name: output

workspace: shared-workspace

params:

- name: url

value: "$(params.repo-url)"

- name: deleteExisting

value: "true"

- name: build

taskRef:

name: gradle

runAfter:

- "clone-repository"

params:

- name: TASKS

value: build

- name: GRADLE_IMAGE

value: docker.io/library/gradle:jdk17-alpine@sha256:dd16ae381eed88d2b33f977b504fb37456e553a1b9c62100b8811e4d8dec99ff

- name: PROJECT_DIR

value: ./discount-api

workspaces:

- name: source

workspace: shared-workspace

And pipeline-run

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

name: run-pipeline

namespace: tekton-pipelines

spec:

serviceAccountName: git-service-account

pipelineRef:

name: git-clone-pipeline

workspaces:

- name: shared-workspace

emptyDir: {}

params:

- name: repo-url

value: [email protected]:anandjaisy/discount.git

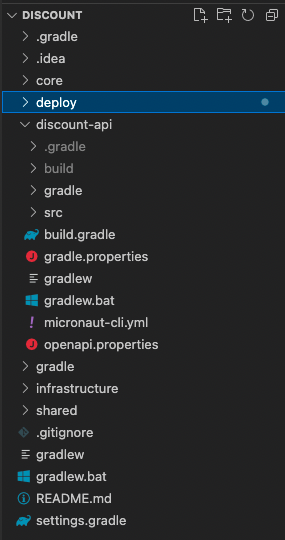

And a project structure directory as

Facing an issue during the pipeline run

2022-06-18T08:17:57.775506334Z Directory '/workspace/source/discount-api' does not contain a Gradle build.

This issue is related to the file not being found. The git-clone task has cloned the code somewhere in the cluster. How do I know where is the code?

kubectl get pods

run-pipeline-build-pod 0/1 Error 0 173m

run-pipeline-fetch-source-pod 0/1 Completed 0 173m

tekton-dashboard-b7b8599c6-wf7b2 1/1 Running 0 12d

tekton-pipelines-controller-674dd45d79-529pc 1/1 Running 0 12d

tekton-pipelines-webhook-86b8b9d87b-qmxzk 1/1 Running 0 12d

tekton-triggers-controller-6d769dddf7-847nt 1/1 Running 0 12d

tekton-triggers-core-interceptors-69c47c4bb7-77bvt 1/1 Running 0 12d

tekton-triggers-webhook-7c4fc7c74-lgm79 1/1 Running 0 12d

If we do logs on POD run-pipeline-build-pod gets an exception as above.

kubectl exec --stdin --tty <Pod-name> -- /bin/bash

The above command is used to go inside the pod, however, in the above case the run-pipeline-fetch-source-pod is completed and we can't apply the above code.

How can I solve this file issue ?

CodePudding user response:

You're using an emptyDir as a workspace. You should use a PVC. You could fix your pipelinerun with something like this:

workspaces:

- name: shared-workspace

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

The workspace is used by your git clone task. Describing the git-clone pod from your pipelinerun, you should find a volume, of type emptyDir. Which is where data is cloned.

Then, using that workspace in another Task, being an emptyDir, in another Pod: you're starting from scratch, there's no data when pod starts. A PVC would allow you to share data in between tasks.

As for debugging: once pods are completed, there's not much to do.

Debugging pipelineruns, if it exits too fast for me to kubectl exec -it a Pod, I would try to change the failing container arguments (editing the corresponding Task), adding some "sleep 86400" or something equivalent, instead of its usual command. Then re-start a pipelinerun.

While in your case, if you already had a PVC as your workspace: it would have been easier to just start a Pod (create a deployment), attaching the PVC created by your pipelinerun, checking what's in there, ...