import requests

import scrapy

from scrapy.http import Request

from bs4 import BeautifulSoup

class TestSpider(scrapy.Spider):

name = 'test'

start_urls = ['https://rejestradwokatow.pl/adwokat/list/strona/1/sta/2,3,9']

custom_settings = {

'CONCURRENT_REQUESTS_PER_DOMAIN': 1,

'DOWNLOAD_DELAY': 1,

'USER_AGENT': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'

}

def parse(self, response):

books = response.xpath("//td[@class='icon_link']//a//@href").extract()

for book in books:

url = response.urljoin(book)

yield Request(url, callback=self.parse_book)

def parse_book(self, response):

detail=response.xpath("//div[@class='line_list_K']")

for i in range(len(detail)):

title=detail[i].xpath("//span[contains(text(), 'Status:')]//div").get()

print(title)

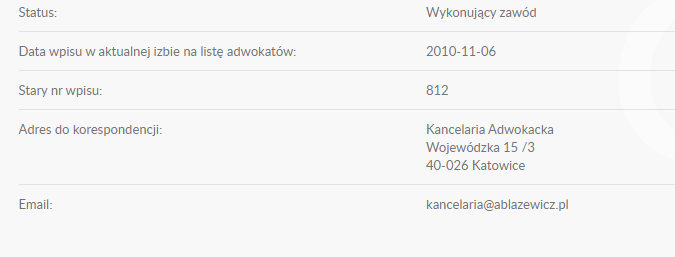

I am trying to grab the data from status and data from email but it give me none this is page link

CodePudding user response:

I will show an example without using Scrappy. I hope you understand and can adapt it to your code. The only difficulty is that the email consists of 2 parts inside the attributes

import requests

from bs4 import BeautifulSoup

url = "https://rejestradwokatow.pl/adwokat/abaewicz-dominik-49965"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'lxml')

status = soup.find('span', string='Status:').findNext('div').getText()

data_ea = soup.find('span', string='Email:').findNext('div').get('data-ea')

data_eb = soup.find('span', string='Email:').findNext('div').get('data-eb')

email = f"{data_ea}@{data_eb}"

print(status, email)

OUTPUT:

Wykonujący zawód [email protected]

CodePudding user response:

Try:

import scrapy

from scrapy.http import Request

from scrapy.crawler import CrawlerProcess

class TestSpider(scrapy.Spider):

name = 'test'

start_urls = ['https://rejestradwokatow.pl/adwokat/list/strona/1/sta/2,3,9']

custom_settings = {

'CONCURRENT_REQUESTS_PER_DOMAIN': 1,

'DOWNLOAD_DELAY': 1,

'USER_AGENT': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'

}

def parse(self, response):

books = response.xpath("//td[@class='icon_link']//a//@href").extract()

for book in books:

url = response.urljoin(book)

yield Request(url, callback=self.parse_book)

def parse_book(self, response):

e1 = response.xpath('(//*[@])[1]//@data-ea')

e1=e1.get() if e1 else None

e2=response.xpath('(//*[@])[1]//@data-eb')

e2=e2.get() if e2 else None

try:

data = e1 '@' e2

yield {

'status':response.xpath('//*[@]/div[1]/div/text()').get(),

'email': data,

'url':response.url

}

except:

pass

if __name__ == "__main__":

process =CrawlerProcess(TestSpider)

process.crawl()

process.start()