I have used Spark 3.1.3 to connect Astra as well as Cassandra local server, but I am getting java.lang.ClassNotFoundException error on spark-submit. I have confirmed that same is happening with spark above 3.x, this code works fine with spark 2.4.2.

Here is my Main.scala:

import com.datastax.spark.connector.{toSparkContextFunctions}

import org.apache.spark.sql.SparkSession

import org.apache.spark.{SparkConf, SparkContext}

object Main {

def main(args: Array[String]) = {

val conf = new SparkConf()

.set("spark.cassandra.connection.config.cloud.path","/path/astradb-secure-connect.zip")

.set("spark.cassandra.auth.username","client-id")

.set("spark.cassandra.auth.password","client-secret")

.setAppName("SparkTest")

val sparkCTX = new SparkContext(conf)

val sparkSess = SparkSession.builder.appName("MyStream").getOrCreate()

sparkCTX.setLogLevel("Error")

println("\n\n\n\n************\n\n\n\n")

val Rdd = sparkCTX.cassandraTable("my_keyspace", "accounts") **// Exact Line of Error**

Rdd.foreach(s => {

println(s)

})

}

}

My build.sbt looks like:

name := "Synchronization"

version := "1.0-SNAPSHOT"

scalaVersion := "2.12.15"

idePackagePrefix := Some("info.myapp.synchronization")

val sparkVersion = "3.1.3"

libraryDependencies = Seq(

"org.apache.spark" %% "spark-core" % sparkVersion,

"org.apache.spark" %% "spark-sql" % sparkVersion,

"org.apache.spark" %% "spark-mllib" % sparkVersion,

"org.apache.spark" %% "spark-streaming" % sparkVersion,

"io.spray" %% "spray-json" % "1.3.6",

"org.scalaj" %% "scalaj-http" % "2.4.2"

)

libraryDependencies = "com.datastax.spark" %% "spark-cassandra-connector" % "3.1.0" % "provided"

libraryDependencies = "com.twitter" % "jsr166e" % "1.1.0"

libraryDependencies = "net.liftweb" %% "lift-json" % "3.4.3"

libraryDependencies = "com.sun.mail" % "javax.mail" % "1.6.2"

libraryDependencies = "com.typesafe.akka" %% "akka-stream" % "2.5.22"

libraryDependencies = "com.github.jurajburian" %% "mailer" % "1.2.4"

The code I am using to run this is:

$ sbt package

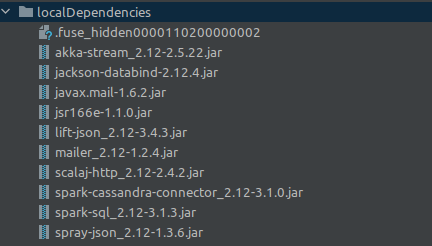

$ spark-submit --class "Main" --jars $(echo localDependencies/*.jar | tr ' ' ',') target/scala-2.12/*jar

My localDependency folder contains jars downloaded from mvnrepository and looks like:

My error goes like:

Exception in thread "main" java.lang.NoClassDefFoundError: com/datastax/spark/connector/CassandraRow

at Main$.main(Main.scala:20)

at Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:951)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1039)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1048)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: com.datastax.spark.connector.CassandraRow

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 14 more

Please help me fix the issue, or please provide the working versions combinations

CodePudding user response:

If you look into dependencies of the connector on Maven Repository, you will notice that it depends on the com.datastax.spark:spark-cassandra-connector-driver_2.12 that is missing in your list. To simplify submission it would be simpler to use spark-cassandra-connector-assembly that includes all necessary classes.

CodePudding user response:

I have found a way around the solution. The solution is to use sbt assembly instead of sbt package and solve merger conflicts. However, if there are any solutions any solution to use sbt package in this way, please add as an answer.

So, here is what I did:

In folder named project under project root directory, there should be a plugins.sbt file.

|project root

|---project

| |----plugins.sbt **// This file**

| |----build.properties

| |----target

| |----project

|---src

|---target

|-----|----scala-2.1x

|-------------|---xxxx.jar **// The built jar file**

|---build.sbt **// Add merger conflict code in here**

|---default.properties

In plugins.sbt add the line:

addSbtPlugin("com.eed3si9n" % "sbt-assembly" % "0.15.0")

Later, in build.sbt in the project root directory, add this line to remove merger conflicts:

assemblyJarName in assembly := "my-built-fatjar-1.0.jar"

assemblyMergeStrategy in assembly := {

case PathList("META-INF", xs @ _*) => MergeStrategy.discard

case x => MergeStrategy.first

}

Note: 1. This merger conflict may be marked as syntax error by the editor. Also, having this merger code may stop the model from getting compiled with sbt package by showing error. But this works with sbt assembly. And the output jar would be present in project root/target/scala-2.1x/my-built-fatjar-1.0.jar .

2. sbt assembly takes several times longer than sbt package to build the jar. Therefore, for development purpose, use sbt ~assembly and this would run the build in continuous mode and the compiler would continuously look for any change in the code and it will update the jar almost instantly with all changes in the source codes.