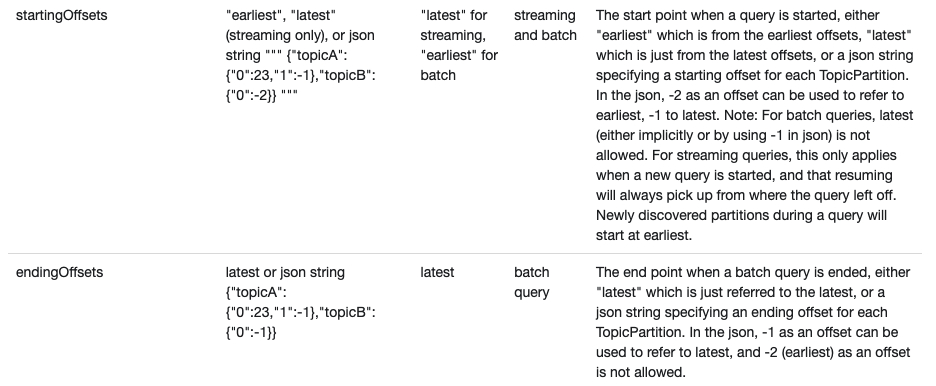

I'm using pyspark 2.4.5 to consume message from Kafka. For batch query, there are 2 options startingOffset and endingOffset can be used to read specific message on Kafka.

I'm quite confused with the example in Spark document:

I don't understand the example for configuration with JSON format:

""" {"topicA":{"0":23,"1":-1},"topicB":{"0":-2}} """

My questions are:

- what does the configuration

"0": 23mean? - This configuration is used for consuming multiple topics, if I read only 1 topic A then what does the configuration look like?

CodePudding user response:

A kafka topic consists of multiple partitions where each partition would have its own offset. So a starting offset of {"topicA":{"0":23,"1":-1},"topicB":{"0":-2}} would mean:

| Topic | Partition | Offset |

|---|---|---|

| topic1 | 0 | 23 |

| topic1 | 1 | -1 |

| topic2 | 1 | -2 |

Meaning for -1 and -2 are already specified in the doc. In case you are not familiar with kafka partitions, there are many good explanations out there like this.

For single topic, it would be simply: {"topicA":{"0":23,"1":-1}}