I have a function that i have created which looks for missing values in a dataframe. Missing values can take on many forms in our dataset and this toy function below handles it My question is around applying this function. I have a list of columns (20 or so) that i would like to apply the same function to. Below is the setup for one column

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

# Create a sample dataset

iris = load_iris()

df = pd.DataFrame(data= np.c_[iris['data'], iris['target']],

columns= iris['feature_names'] ['target'])

df['species'] = pd.Categorical.from_codes(iris.target, iris.target_names)

# Here we replace all values of setosa with 'missing_value'

df = df.applymap(lambda x: 'missing_value' if x == 'setosa' else x)

# Here we want to create a flag for the missing values

def add_missing_value_flags(mydf, column):

# Generate the new column name

new_col = "missing_" column

# Create flags where the data is missing

# that has put in a holder to represent a missing value

mydf[new_col]= np.where(mydf[column] == 'missing_value', True,

np.where(mydf[column] == '', True,

np.where(mydf[column] == 'N/A', True,

np.where(mydf[column] == 'N\A', True,

np.where(mydf[column] == 'NA', True,

np.where(mydf[column] == 'N.A.', True,

np.where(mydf[column] == 'NONE', True,

np.where(mydf[column] == '.', True,

np.where(mydf[column].str.len() == 1, True,

np.where(mydf[column] == '..', True, False))))))))))

return(mydf)

add_missing_value_flags(df, 'species')

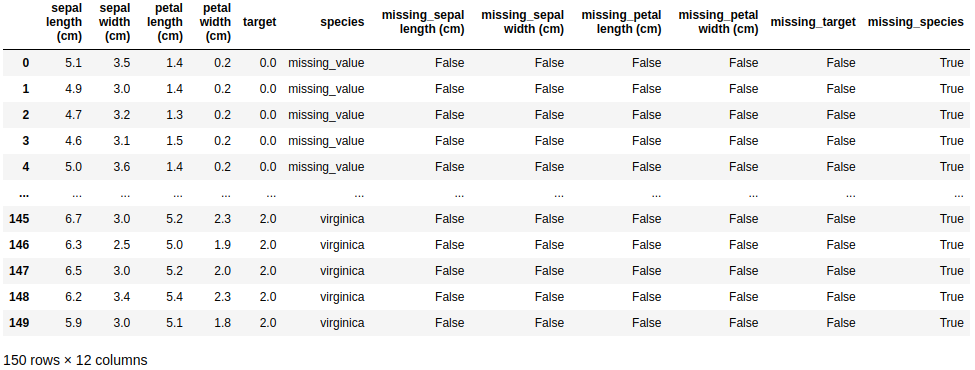

sepal length (cm) sepal width (cm) ... species missing_species

0 5.1 3.5 ... missing_value True

1 4.9 3.0 ... missing_value True

2 4.7 3.2 ... missing_value True

3 4.6 3.1 ... missing_value True

4 5.0 3.6 ... missing_value True

.. ... ... ... ... ...

145 6.7 3.0 ... virginica False

146 6.3 2.5 ... virginica False

147 6.5 3.0 ... virginica False

148 6.2 3.4 ... virginica False

149 5.9 3.0 ... virginica False

Is there a method in python where I can apply my function to the rest of my columns similiar to: mydf[mydf.columns[mydf.columns.str.contains('species|plant|earth')]].apply...

Thank you for your time

CodePudding user response:

# Create a sample dataset

iris = load_iris()

df = pd.DataFrame(data= np.c_[iris['data'], iris['target']],

columns= iris['feature_names'] ['target'])

df['species'] = pd.Categorical.from_codes(iris.target, iris.target_names)

# Here we replace all values of setosa with 'missing_value'

df = df.applymap(lambda x: 'missing_value' if x == 'setosa' else x)

def function_to_apply(series):

if not re.match("missing_", series.name):

new_column_name = "missing_" series.name

new_column_values = series.isin([

'missing_value', '', 'N/A', 'N\A',

'NA', 'N.A.', 'NONE', '.', '..'

])

try:

new_column_values = new_column_values | (series.str.len()==1)

except AttributeError:

pass

df[new_column_name] = new_column_values

return

I wrote function_to_apply in order to modify inplace df and I set the return value = None, so:

df.apply(function_to_apply)

#RETURNS

#sepal length (cm) None

#sepal width (cm) None

#petal length (cm) None

#petal width (cm) None

#target None

#species None

#dtype: object

However, by applying this function, you have added columns to df:

I know is not the cleanest solution, but it works and it is relatively fast.

P.S. you need to import re in addition to the other libraries to run this code.

CodePudding user response:

If you want to apply this function to all your columns you can do that within a list comprehension:

[add_missing_value_flags(df, column) for column in df.columns]

The resulting dataframe (df) will contain the columns you need.

Note that for running this, you will need to adapt your function add_missing_value_flags slightly. Most of the columns in your dataframe do not contain string data. With the following version the list comprehension above will work:

def add_missing_value_flags(mydf, column):

# Generate the new column name

new_col = "missing_" column

# Create flags where the data is missing or there is a shipper name

# that has put in a holder to represent a missing value

# convert values to strings before running string comparison

filtered_df = mydf[column].astype(str)

mydf[new_col]= np.where(filtered_df == 'missing_value', True,

np.where(filtered_df == '', True,

np.where(filtered_df == 'N/A', True,

np.where(filtered_df == 'N\A', True,

np.where(filtered_df == 'NA', True,

np.where(filtered_df == 'N.A.', True,

np.where(filtered_df == 'NONE', True,

np.where(filtered_df == '.', True,

np.where(filtered_df.str.len() == 1, True,

np.where(filtered_df == '..', True, False))))))))))

return(mydf)