I need to use partition by clause in two columns and found the rownumber. Also, I need to extract only the row which has rownumber= 1.

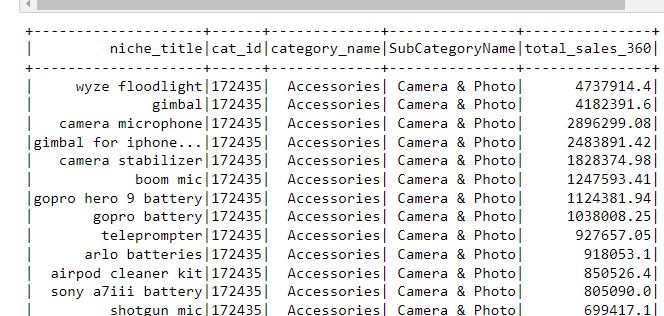

I have df3 dataframe which holds these data:

I am trying to use partition by clause using two columns "category_name,SubCategoryName" and ordering them by totalsales descending:

from pyspark.sql.window import Window

from pyspark.sql.functions import row_number

windowSpec = Window.partitionBy("category_name,SubCategoryName").orderBy("total_sales_360 desc")

df3.withColumn("row_number",row_number().over(windowSpec)).show(truncate=False)

I am getting error while trying to see the df3 after using partition by.

CodePudding user response:

change .partitionBy("category_name,SubCategoryName") to

.partitionBy("category_name", "SubCategoryName")