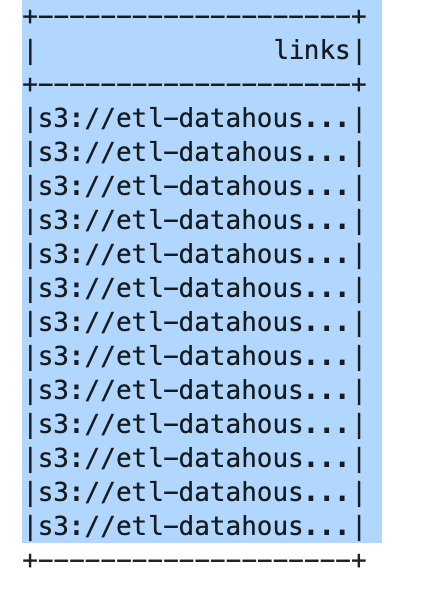

I have a column with s3 file paths, I want to read all those paths, concatenate it later in PySpark

CodePudding user response:

You can get the paths as a list using map and collect. Iterate over that list to read the paths and append the resulting spark dataframes into another list. Use the second list (which is a list of spark dataframes) to union all the dataframes.

# get all paths in a list

list_of_paths = data_sdf.rdd.map(lambda r: r.links).collect()

# read all paths and store the df in a list as element

list_of_sdf = []

for path in list_of_paths:

list_of_sdf.append(spark.read.parquet(path))

# check using list_of_sdf[0].show() or list_of_sdf[1].printSchema()

# run union on all of the stored dataframes

import pyspark

final_sdf = reduce(pyspark.sql.dataframe.DataFrame.unionByName, list_of_sdf)

Use the final_sdf dataframe to write to a new parquet file.

CodePudding user response:

You can supply multiple paths to the Spark parquet read function. So, assuming these are paths to parquet files that you want to read into one DataFrame, you can do something like:

list_of_paths = [r.links for links_df.select("links").collect()]

aggregate_df = spark.read.parquet(*list_of_paths)