I have set up Grafana, Prometheus and loki (2.6.1) as follows on my kubernetes (1.21) cluster:

helm upgrade --install promtail grafana/promtail -n monitoring -f monitoring/promtail.yaml

helm upgrade --install prom prometheus-community/kube-prometheus-stack -n monitoring --values monitoring/prom.yaml

helm upgrade --install loki grafana/loki -n monitoring --values monitoring/loki.yaml

with:

# monitoring/loki.yaml

loki:

schemaConfig:

configs:

- from: 2020-09-07

store: boltdb-shipper

object_store: s3

schema: v11

index:

prefix: loki_index_

period: 24h

storageConfig:

aws:

s3: s3://eu-west-3/cluster-loki-logs

boltdb_shipper:

shared_store: filesystem

active_index_directory: /var/loki/index

cache_location: /var/loki/cache

cache_ttl: 168h

# monitoring/promtail.yaml

config:

serverPort: 80

clients:

- url: http://loki:3100/loki/api/v1/push

# monitoring/prom.yaml

prometheus:

prometheusSpec:

serviceMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelector: {}

serviceMonitorNamespaceSelector:

matchLabels:

monitored: "true"

grafana:

sidecar:

datasources:

defaultDatasourceEnabled: true

additionalDataSources:

- name: Loki

type: loki

url: http://loki.monitoring:3100

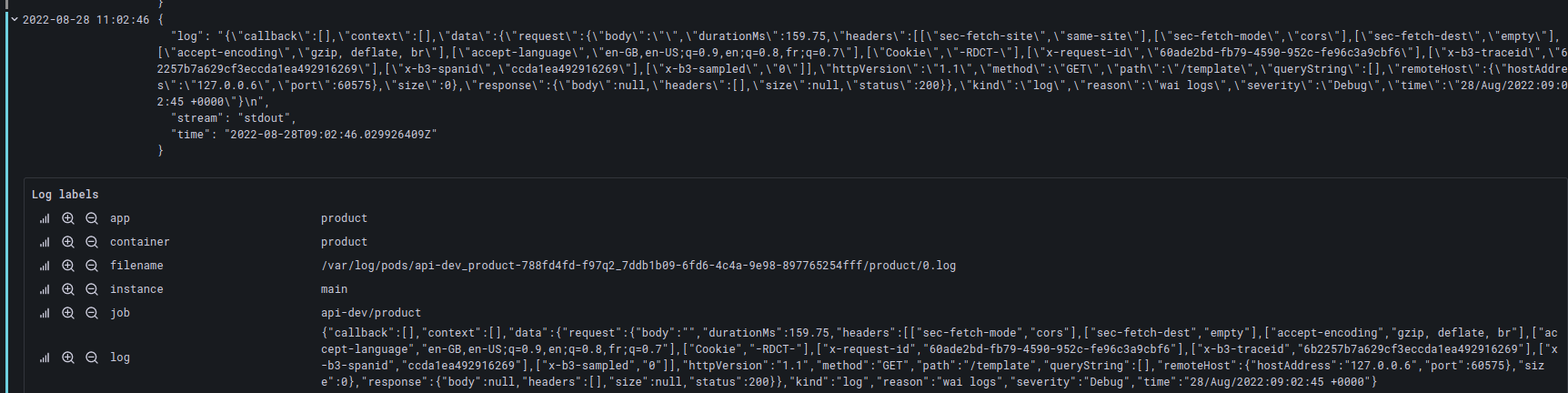

I get data from my containers, but, whenever I have a container logging in json format, I can't get access to the nested fields:

{app="product", namespace="api-dev"} | unpack | json

Yields:

My aim is, for example, to filter by log.severity

CodePudding user response:

Actually, following this answer, it occurs to be a promtail scraping issue.

The current (promtail-6.3.1 / 2.6.1) helm chart default is to have cri as pipeline's stage, which expects this kind of logs:

"2019-04-30T02:12:41.8443515Z stdout xx message"

I should have use docker, which expects json, consequently, my promtail.yaml changed to:

config:

serverPort: 80

clients:

- url: http://loki:3100/loki/api/v1/push

snippets:

pipelineStages:

- docker: {}