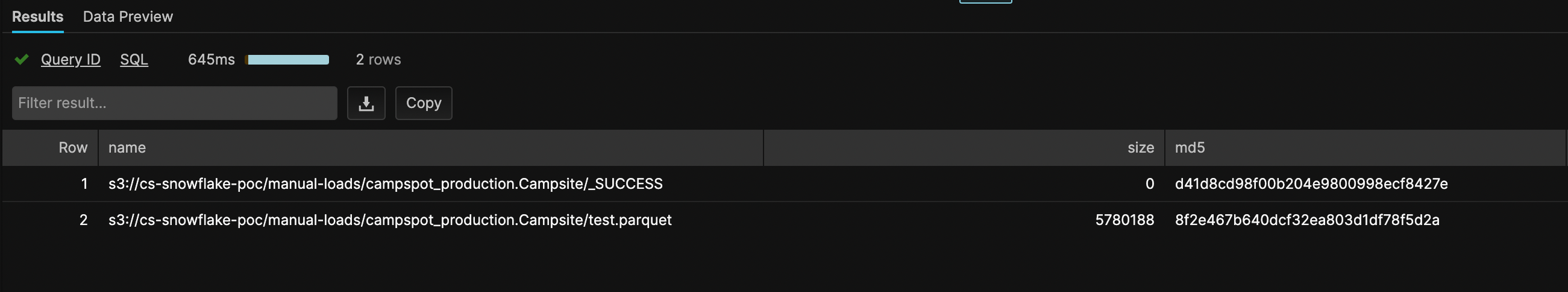

I'm working on moving some Parquet files in S3 over to Snowflake. The Storage Integration & External Stage were created just fine, and when I run the list @mystage command I can see the file that I want to check out in S3 so I know it exists & that my connection works.

When I go to do any kind of querying on the columns or table metadata, nothing is returned.

I'm following the basic documentation from this

create stage manual_test_stage

url = 's3://cs-snowflake-poc/manual-loads/'

storage_integration = cs_snowflake_poc_s3;

create or replace file format parquet_format

type = 'parquet';

select *

from table(

infer_schema(

location=>'@manual_test_stage/campspot_production.Campsite/test.parquet',

file_format=>'parquet_format'

)

);

-- neither query works

select *

from table(

infer_schema(

location=>'@manual_test_stage',

file_format=>'parquet_format'

)

);

-- end goal is to use this to just create the table for me by automatically reading the schema, but i can't do that when select array_agg returns an empty array.

create table mytable

using template (

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@manual_test_stage/campspot_production.Campsite/test.parquet',

file_format=>'my_parquet_format'

)

));

Tried all sorts of things: I recreated the stage to not include a / at the end, I tried like 5 different file format options in case that was screwing it up, and I loaded the parquet into Python to make sure it wasn't some issue with the actual file.

If anyone can help show me what I'm missing to get this to work it'd be much appreciated !

CodePudding user response:

INFER_SCHEMA allows to define FILES parameter.

select *

from table(

infer_schema(

location=>'@manual_test_stage/campspot_production.Campsite',

file_format=>'parquet_format',

files=>'test.parquet'

)

);

Additionally checking the direct access is recommeded to confirm it is not a permission issue:

SELECT t.$1

FROM "@manual_test_stage/campspot_production.Campsite/test.parquet"

(file_format=>'parquet_format') AS t;