I'm trying to run the MoveNet Pose Estimation model on a video but for some reason my keypoints are very inaccurate. I assume this does not have anything to do with the predictions itself but with how I calculate the points and paint then using my estimation. However I cannot find where these inaccuracies come from.

import tensorflow as tf

import numpy as np

from matplotlib import pyplot as plt

import cv2

interpreter = tf.lite.Interpreter(model_path='lite-model_movenet_singlepose_lightning_3.tflite')

interpreter.allocate_tensors()

def draw_keypoints(frame, keypoints, confidence_threshold):

y, x, c = frame.shape

shaped = np.squeeze(np.multiply(keypoints, [y,x,1]))

for kp in shaped:

ky, kx, kp_conf = kp

if kp_conf > confidence_threshold:

cv2.circle(frame, (int(kx), int(ky)), 4, (0,255,0), -1)

cap = cv2.VideoCapture("pushup-stock-compressed.mp4")

while cap.isOpened():

ret, frame = cap.read()

# Reshape image

img = frame.copy()

img = tf.image.resize_with_pad(np.expand_dims(img, axis=0), 192,192)

input_image = tf.cast(img, dtype=tf.float32)

# Setup input and output

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Make predictions

interpreter.set_tensor(input_details[0]['index'], np.array(input_image))

interpreter.invoke()

keypoints_with_scores = interpreter.get_tensor(output_details[0]['index'])

# Rendering

draw_keypoints(frame, keypoints_with_scores, 0.4)

cv2.imshow('MoveNet Lightning', frame)

if cv2.waitKey(10) & 0xFF==ord('q'):

break

cap.release()

cv2.destroyAllWindows()

CodePudding user response:

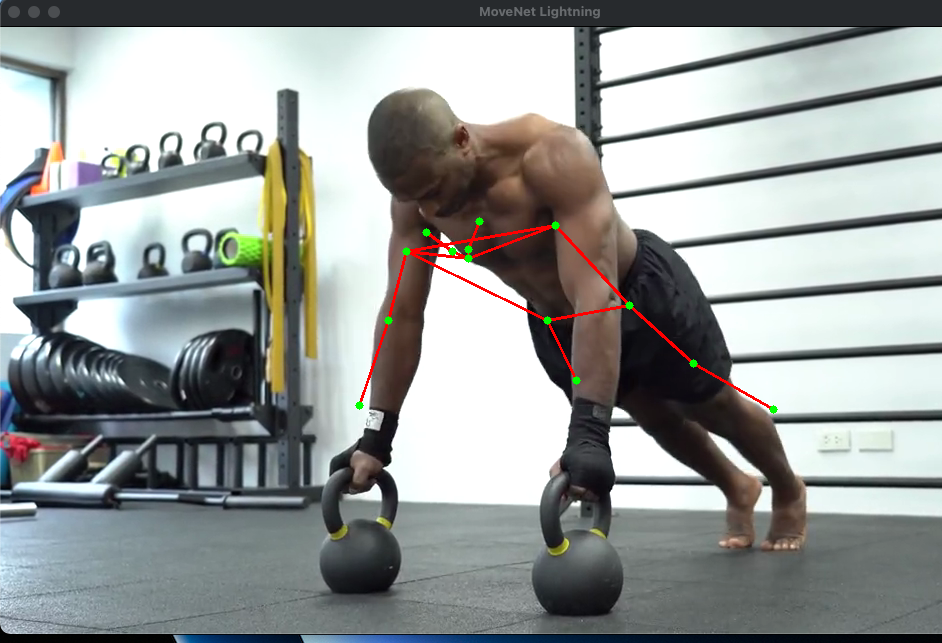

That example contains some incorrect predictions from the network (look at the right leg).

Now apply inverse affine transform to these keypoints on the original image:

As we can see the keypoints are drawn at the same positions as on the resized padded image.

Complete example:

import tensorflow as tf

import numpy as np

import cv2

interpreter = tf.lite.Interpreter(

model_path="lite-model_movenet_singlepose_lightning_3.tflite"

)

interpreter.allocate_tensors()

def draw_keypoints(frame, keypoints, confidence_threshold):

for kp in keypoints:

ky, kx, kp_conf = kp

cv2.circle(frame, (int(kx), int(ky)), 4, (0, 255, 0), -1)

def get_affine_transform_to_fixed_sizes_with_padding(size, new_sizes):

width, height = new_sizes

scale = min(height / float(size[1]), width / float(size[0]))

M = np.float32([[scale, 0, 0], [0, scale, 0]])

M[0][2] = (width - scale * size[0]) / 2

M[1][2] = (height - scale * size[1]) / 2

return M

frame = cv2.imread("gym.png")

# Reshape image

img = frame.copy()

img = tf.image.resize_with_pad(np.expand_dims(img, axis=0), 192, 192)

input_image = tf.cast(img, dtype=tf.float32)

# Setup input and output

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Make predictions

interpreter.set_tensor(input_details[0]["index"], np.array(input_image))

interpreter.invoke()

keypoints_with_scores = interpreter.get_tensor(output_details[0]["index"])[0, 0]

img_resized = np.array(input_image).astype(np.uint8)[0]

keypoints_for_resized = keypoints_with_scores.copy()

keypoints_for_resized[:, 0] *= img_resized.shape[1]

keypoints_for_resized[:, 1] *= img_resized.shape[0]

draw_keypoints(img_resized, keypoints_for_resized, 0.4)

cv2.imwrite("image_with_keypoints_resized.png", img_resized)

orig_w, orig_h = frame.shape[:2]

M = get_affine_transform_to_fixed_sizes_with_padding((orig_w, orig_h), (192, 192))

# M has shape 2x3 but we need square matrix when finding an inverse

M = np.vstack((M, [0, 0, 1]))

M_inv = np.linalg.inv(M)[:2]

xy_keypoints = keypoints_with_scores[:, :2] * 192

xy_keypoints = cv2.transform(np.array([xy_keypoints]), M_inv)[0]

keypoints_with_scores = np.hstack((xy_keypoints, keypoints_with_scores[:, 2:]))

# Rendering

draw_keypoints(frame, keypoints_with_scores, 0.4)

cv2.imwrite("image_with_keypoints_original.png", frame)