I have the following sampled data frame from a million rows. It'll show value counts of anomalous rows, a dataframe with only anomalous rows, and the plot.

Input data:

df_sample = pd.DataFrame({

'AbsoluteTopImpressionPercentage': [0.0, 1.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 0.75, 1.0, 1.0, 0.0, 0.0, 0.0, 1.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.5, 0.0, 0.5, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 1.0, 0.0, 0.0, 0.5, 0.0, 0.0, 0.0, 1.0, 1.0],

'AdFormat': ['TEXT', 'TEXT', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'TEXT', 'TEXT', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'TEXT', 'UNKNOWN', 'TEXT', 'TEXT', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'TEXT', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'TEXT', 'UNKNOWN', 'UNKNOWN', 'TEXT', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'UNKNOWN', 'TEXT', 'UNKNOWN', 'TEXT', 'UNKNOWN', 'UNKNOWN'],

'AdNetworkType1': ['SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH'],

'AdNetworkType2': ['SEARCH', 'SEARCH', 'SEARCH', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH', 'SEARCH_PARTNERS', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH_PARTNERS', 'SEARCH_PARTNERS', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH', 'SEARCH'],

'AllConversionRate': [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0],

'Clicks': [0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 4, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 10, 0, 1, 0, 0, 1, 0],

'ConversionRate': [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0],

'Ctr': [0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 0.2105, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 0.3333, 1.0, 0.25, 0.0, 0.5, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2326, 0.0, 1.0, 0.0, 0.0, 1.0, 0.0],

'Date': ['2021-04-09 00:00:00 00:00', '2021-04-19 00:00:00 00:00', '2022-08-24 00:00:00 00:00', '2021-07-25 00:00:00 00:00', '2022-07-29 00:00:00 00:00', '2022-05-27 00:00:00 00:00', '2022-05-18 00:00:00 00:00', '2022-07-24 00:00:00 00:00', '2021-02-05 00:00:00 00:00', '2021-04-30 00:00:00 00:00', '2021-07-11 00:00:00 00:00', '2021-05-24 00:00:00 00:00', '2022-05-22 00:00:00 00:00', '2021-07-01 00:00:00 00:00', '2021-07-11 00:00:00 00:00', '2022-03-24 00:00:00 00:00', '2021-05-12 00:00:00 00:00', '2022-06-14 00:00:00 00:00', '2021-04-27 00:00:00 00:00', '2021-01-29 00:00:00 00:00', '2022-09-08 00:00:00 00:00', '2021-06-07 00:00:00 00:00', '2022-05-28 00:00:00 00:00', '2022-03-26 00:00:00 00:00', '2021-04-09 00:00:00 00:00', '2022-05-22 00:00:00 00:00', '2021-05-28 00:00:00 00:00', '2022-05-22 00:00:00 00:00', '2021-07-06 00:00:00 00:00', '2021-07-14 00:00:00 00:00', '2021-06-24 00:00:00 00:00', '2021-03-24 00:00:00 00:00', '2021-06-03 00:00:00 00:00', '2022-05-30 00:00:00 00:00', '2021-03-15 00:00:00 00:00', '2022-08-05 00:00:00 00:00', '2021-07-06 00:00:00 00:00', '2022-03-30 00:00:00 00:00', '2022-09-07 00:00:00 00:00', '2021-05-27 00:00:00 00:00', '2021-06-04 00:00:00 00:00', '2022-04-16 00:00:00 00:00', '2022-05-22 00:00:00 00:00', '2021-07-08 00:00:00 00:00', '2022-05-26 00:00:00 00:00', '2021-02-09 00:00:00 00:00', '2022-04-27 00:00:00 00:00', '2021-05-06 00:00:00 00:00', '2021-06-29 00:00:00 00:00', '2022-06-01 00:00:00 00:00'],

'Anomaly': [0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0]

})

My code:

df_sample = df_sample.sort_values(by='Date')

df_sample['Date'] = df_sample['Date'].apply(pd.to_datetime)

print(df_sample.Anomaly.value_counts())

print('')

display(df_sample.loc[df_sample['Anomaly'] == 1])

colors=[]

for val in df_sample['Anomaly']:

if val == 1:

colors.append('red')

else:

colors.append('blue')

# or

#colors = pd.cut(df['Sum'].tolist(), [-np.inf, 10, 20, np.inf],

# labels=['green', 'orange', 'red'])

#ax = df['Sum'].plot(kind='barh',color=colors)

fig, axs = plt.subplots(figsize=(22, 12))

df_sample.groupby(df_sample['Date'].dt.date)["Clicks"].sum().plot(kind='barh', ax=axs, color=colors)

plt.xlabel("Clicks")

plt.ylabel("Dates")

every_nth = 7

for n, label in enumerate(axs.yaxis.get_ticklabels()):

if n % every_nth != 0:

label.set_visible(False)

Output:

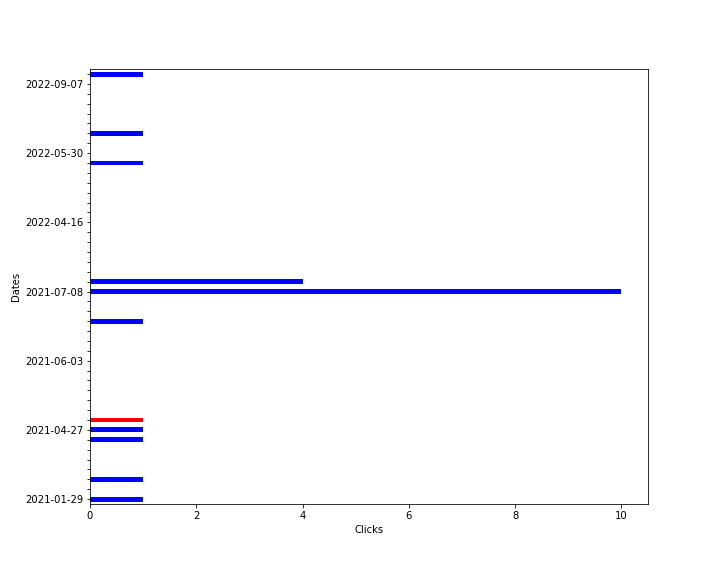

Now in the output, I want those df_sample.Anomaly rows with value == 1 to be shown in the graph horizontal bars as red instead of blue. Any idea?

CodePudding user response:

Without changing your code too much, you need to create the list of colors on your grouped data which you will plot later.

The line, where I create out, I used max as aggregation for the column Anomaly. In your example data for each group of Anomaly there is only only 0's or only 1's. If these values differ you can decide here what you want to do (e.g take first or last, sum them up, min or max)

Then build the list of colors based on the new data in out['Anomaly'] and plot it based on out['Clicks']

df_sample['Date'] = pd.to_datetime(df_sample['Date'])

df_sample = df_sample.sort_values(by='Date')

out = df_sample.groupby(df_sample['Date'].dt.date).agg({'Clicks' : 'sum', 'Anomaly': 'max'})

print(out)

#collect colors based on that data

colors = ['red' if val==1 else 'blue' for val in out['Anomaly']]

fig, axs = plt.subplots(figsize=(10,8))

out['Clicks'].plot(kind='barh', ax=axs, color=colors)

axs.set_xlabel("Clicks")

axs.set_ylabel("Dates")

every_nth = 7

for n, label in enumerate(axs.yaxis.get_ticklabels()):

if n % every_nth != 0:

label.set_visible(False)