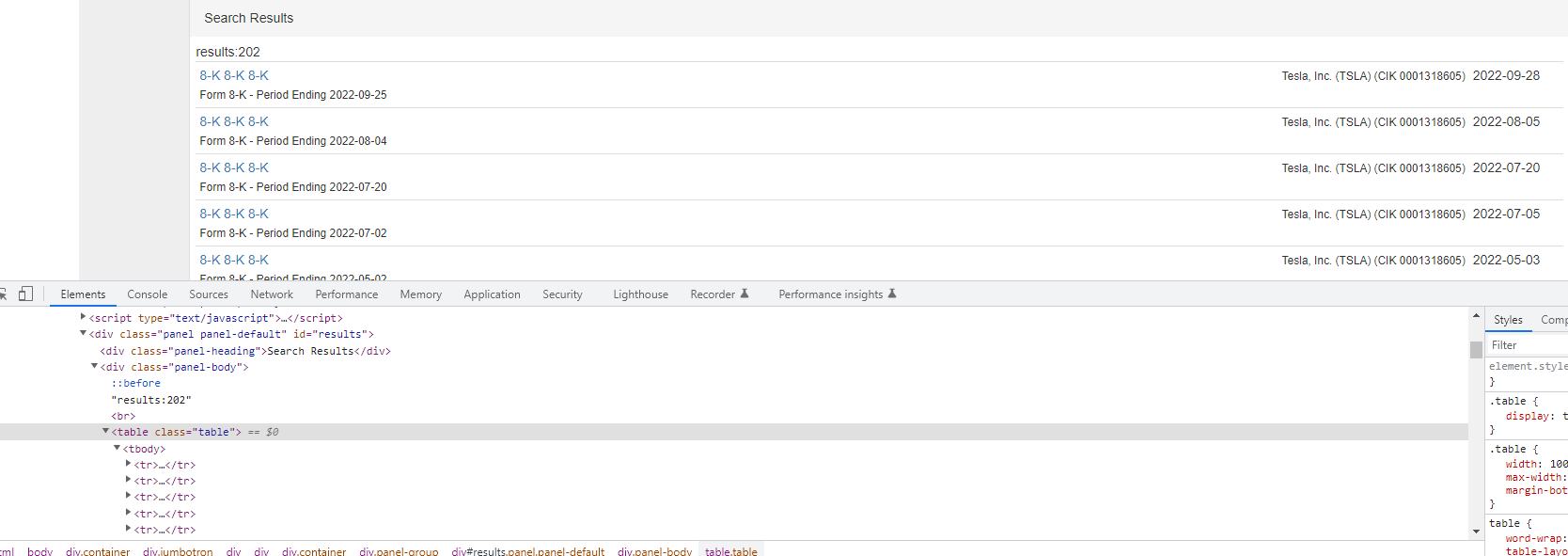

i would like to copy links and dates associated with earnings/other reports(8k,10q) respectively for a specific stock. i have tried looping through the results table class but i am getting timeout error while using selenium. Any help would be greatly appreciated.Thank you!

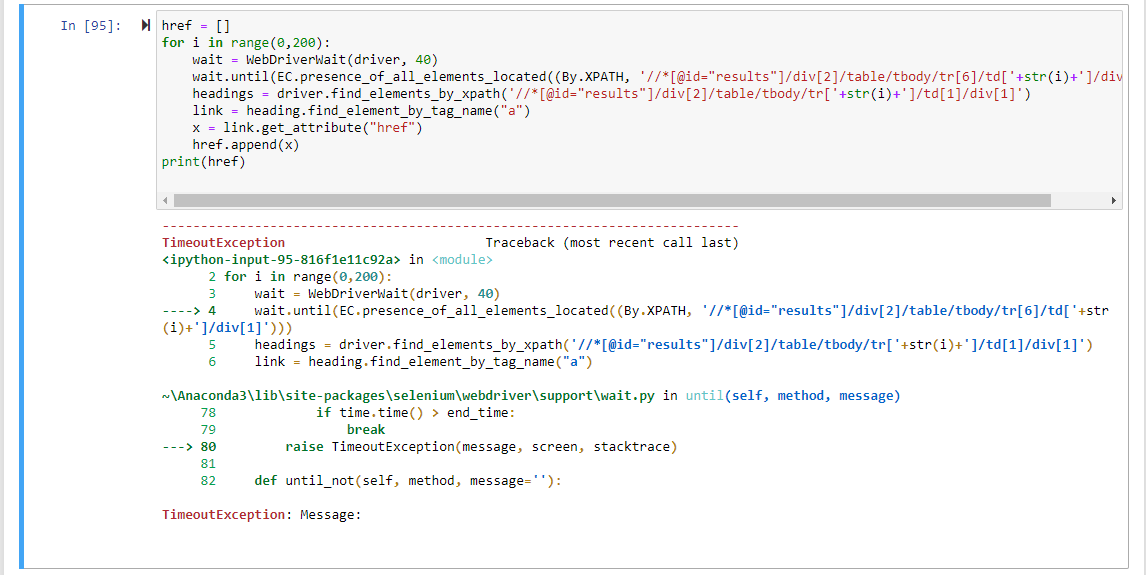

approach 2) tired to loop through all the individual results xpath of the table:

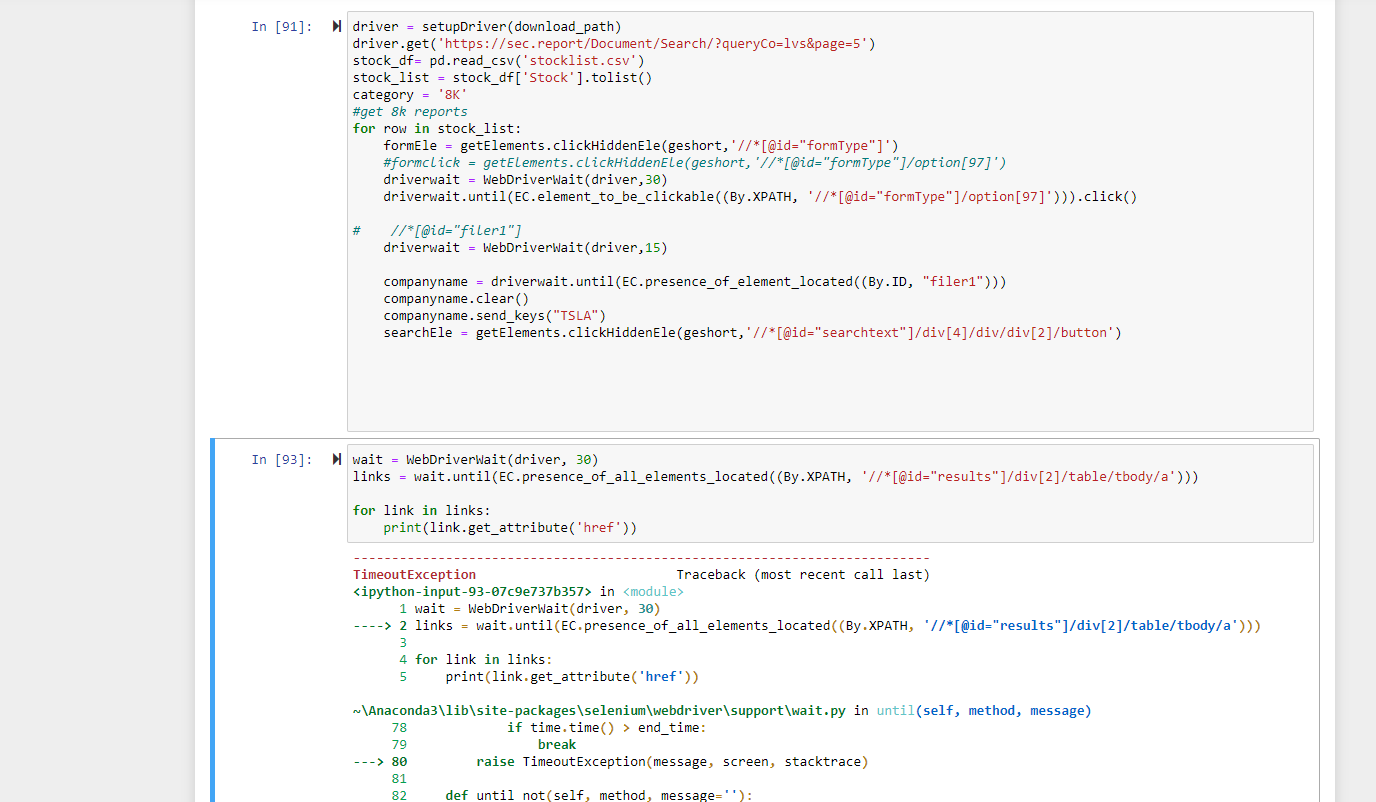

#approach1

driver = setupDriver(download_path)

driver.get('https://sec.report/Document/Search/?queryCo=lvs&page=5')

formEle = getElements.clickHiddenEle(geshort,'//*[@id="formType"]')

#formclick = getElements.clickHiddenEle(geshort,'//*[@id="formType"]/option[97]')

driverwait = WebDriverWait(driver,30)

driverwait.until(EC.element_to_be_clickable((By.XPATH, '//*[@id="formType"]/option[97]'))).click()

# //*[@id="filer1"]

driverwait = WebDriverWait(driver,15)

companyname = driverwait.until(EC.presence_of_element_located((By.ID, "filer1")))

companyname.clear()

companyname.send_keys("TSLA")

searchEle = getElements.clickHiddenEle(geshort,'//*[@id="searchtext"]/div[4]/div/div[2]/button')

wait = WebDriverWait(driver, 30)

links = wait.until(EC.presence_of_all_elements_located((By.XPATH, '//*[@id="results"]/div[2]/table/tbody/a')))

for link in links:

print(link.get_attribute('href'))

#approach 2 code

driver = setupDriver(download_path)

driver.get('https://sec.report/Document/Search/?queryCo=lvs&page=5')

formEle = getElements.clickHiddenEle(geshort,'//*[@id="formType"]')

#formclick = getElements.clickHiddenEle(geshort,'//*[@id="formType"]/option[97]')

driverwait = WebDriverWait(driver,30)

driverwait.until(EC.element_to_be_clickable((By.XPATH, '//*[@id="formType"]/option[97]'))).click()

# //*[@id="filer1"]

driverwait = WebDriverWait(driver,15)

companyname = driverwait.until(EC.presence_of_element_located((By.ID, "filer1")))

companyname.clear()

companyname.send_keys("TSLA")

searchEle = getElements.clickHiddenEle(geshort,'//*[@id="searchtext"]/div[4]/div/div[2]/button')

href = []

for i in range(0,200):

wait = WebDriverWait(driver, 40)

wait.until(EC.presence_of_all_elements_located((By.XPATH, '//*[@id="results"]/div[2]/table/tbody/tr[6]/td[' str(i) ']/div[1]')))

headings = driver.find_elements_by_xpath('//*[@id="results"]/div[2]/table/tbody/tr[' str(i) ']/td[1]/div[1]')

link = heading.find_element_by_tag_name("a")

x = link.get_attribute("href")

href.append(x)

print(href)

CodePudding user response:

Hoping that OP's next question will contain code, not images, and a minimal reproducible example, here is one solution to his conundrum. As stated in comments, Selenium should be the last resort when web scraping - it is a tool meant for testing, not web scraping. The following solution will extract dates, form names, form descriptions and form urls from the 11 pages worth of data concerning LVS:

from bs4 import BeautifulSoup as bs

import requests

from tqdm import tqdm

import pandas as pd

headers = {'User-Agent': 'Sample Company Name AdminContact@<sample company domain>.com'}

s = requests.Session()

s.headers.update(headers)

big_list = []

for x in tqdm(range(1, 12)):

r = s.get(f'https://sec.report/Document/Search/?queryCo=lvs&page={x}')

soup = bs(r.text, 'html.parser')

data_rows = soup.select('table.table tr')

for row in data_rows:

form_title = row.select('a')[0].get_text(strip=True)

form_url = row.select('a')[0].get('href')

form_desc = row.select('small')[0].get_text(strip=True)

form_date = row.select('td')[-1].get_text(strip=True)

big_list.append((form_date, form_title, form_desc, form_url))

df = pd.DataFrame(big_list, columns = ['Date', 'Title', 'Description', 'Url'])

print(df)

Result:

Date Title Description Url

0 2022-09-14 8-K 8-K 8-K Form 8-K - Period Ending 2022-09-14 https://sec.report/Document/0001300514-22-000101/#lvs-20220914.htm

1 2022-08-29 40-APP/A 40-APP/A 40-APP/A Form 40-APP https://sec.report/Document/0001193125-22-231550/#d351601d40appa.htm

2 2022-07-22 10-Q 10-Q 10-Q Form 10-Q - Period Ending 2022-06-30 https://sec.report/Document/0001300514-22-000094/#lvs-20220630.htm

3 2022-07-20 8-K 8-K 8-K Form 8-K - Period Ending 2022-07-20 https://sec.report/Document/0001300514-22-000088/#lvs-20220720.htm

4 2022-07-11 8-K 8-K 8-K Form 8-K - Period Ending 2022-07-11 https://sec.report/Document/0001300514-22-000084/#lvs-20220711.htm

... ... ... ... ...

1076 2004-11-22 S-1/A S-1/A S-1/A Form S-1 https://sec.report/Document/0001047469-04-034893/#a2143958zs-1a.htm

1077 2004-10-25 S-1/A S-1/A S-1/A Form S-1 https://sec.report/Document/0001047469-04-031910/#a2143958zs-1a.htm

1078 2004-10-20 8-K 8-K 8-K Form 8-K - Period Ending 2004-09-30 https://sec.report/Document/0001047469-04-031637/#a2145253z8-k.htm

1079 2004-10-08 UPLOAD LETTER Form UPLOAD https://sec.report/Document/0000000000-04-032407/#filename1.txt

1080 2004-09-03 S-1 S-1 S-1 Form S-1 https://sec.report/Document/0001047469-04-028031/#a2142433zs-1.htm

1081 rows × 4 columns

You can extract other stuffs, like company name & person name (I imagine that would be the person filing the form).

Docs for BeautifulSoup: https://beautiful-soup-4.readthedocs.io/en/latest/index.html Also, requests documentation: https://requests.readthedocs.io/en/latest/ For TQDM, visit https://pypi.org/project/tqdm/ And for pandas: https://pandas.pydata.org/pandas-docs/stable/index.html