I have a text file that needs to be read line by line and converted into a data frame with the 4 following columns ['CustomerID', 'Rating', 'Date', 'Movie ID']

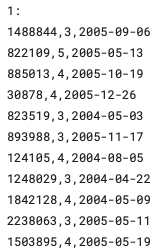

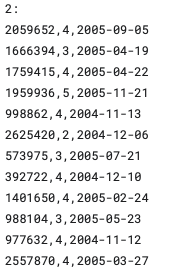

There are 17,770 movie ID's and each text file has the following format

Movie ID:

Customer ID, Rating, Date

Customer ID, Rating, Date

. . .

Movie ID:

Customer ID, Rating, Date

Customer ID, Rating, Date

. . .

All the way up to the 17,770th movie ID in ascending order

See images below for snip of text files....

This is the code I have so far:

import re

import pandas as pd

with open('/Users/dorisveronicaavedikian/Desktop/Final Semester Fall 2022/CYBI 6378/archive/combined_data_1.txt',encoding='latin-1') as f:

for line in f:

result = re.search(r"^(\d ),(\d ),(\d{4}-\d{2}-\d{2})/gm", line)

if re.search(r"(^\d ):", line) is not None:

movie_id = re.search(r"(^\d ):", line).group(1)

elif result:

customerid = result.group(1)

rating = result.group(2)

date = result.group(3)

else:

continue

data_list = [customerid, rating, date, movie_id]

df1 = pd.DataFrame(data_list)

df1.to_csv(r'/Users/dorisveronicaavedikian/Desktop/Final Semester Fall 2022/CYBI 6378/archive/combineddata1.csv')

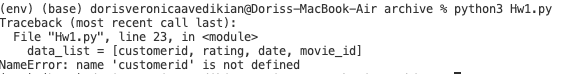

Im getting the following error:

How do I fix this error???

Thanks in advance!!

CodePudding user response:

here is one way to do it

# read the csv file using read_csv, using ":" as a separator

# since there is only one colon ":" per movie, you end up with a row for movie following by rows for the rest of the data.

df=pd.read_csv(r'c:\csv.csv', sep=':', header=None, names=['col1', 'col2'])

# when there is no comma in a row, means its only a movie id,

# so we populate the movieid column and downfill for all rows

df['MovieId'] = df['col1'].mask(df['col1'].str.contains(',')).ffill()

# split the data into CusotmerId, rating and date

df[['CustomerID','Rating','Date']] = df['col1'].str.split(',',expand=True)

# drop the unwanted columns and rows

df2=df[df['col1'].ne(df['MovieId'])].drop(columns=['col1','col2'])

df2

# sample created from the data you shared above as image

MovieId CustomerID Rating Date

1 1 1488844 3 2005-09-06

2 1 822109 5 2005-05-13

3 1 885013 4 2005-10-19

4 1 30878 4 2005-12-26

5 1 823519 3 2004-05-03

6 1 893988 3 2005-11-17

7 1 124105 4 2004-08-05

8 1 1248629 3 2004-04-22

9 1 1842128 4 2004-05-09

10 1 2238063 3 2005-05-11

11 1 1503895 4 2005-05-19

13 2 1288844 3 2005-09-06

14 2 832109 5 2005-05-13

CodePudding user response:

You can parse that structure quite easily (without regex, using a few lines of very readable vanilla Python) and build a dictionary while reading the data file. You can then convert the dictionary to a DataFrame in one go.

import pandas as pd

df = {'MovieID':[], 'CustomerID':[], 'Rating':[], 'Date':[]}

with open('data.txt', 'r') as f:

for line in f:

line = line.strip()

if line: #skip empty lines

if line.endswith(':'): #MovieID

movie_id = line[:-1]

else:

customer_id, rating, date = line.split(',')

df['MovieID'].append(movie_id)

df['CustomerID'].append(customer_id)

df['Rating'].append(rating)

df['Date'].append(date)

df = pd.DataFrame(df)

print(df)

MovieID CustomerID Rating Date

0 1 1488844 3 2005-09-06

1 1 822109 5 2005-05-13

2 1 885013 4 2005-10-19

3 1 30878 4 2005-12-26

4 2 823519 3 2004-05-03

5 2 893988 3 2005-11-17

6 2 124105 4 2004-08-05

7 2 1248629 3 2004-04-22

8 2 1842128 4 2004-05-09

9 3 2238063 3 2005-05-11

10 3 1503895 4 2005-05-19

11 3 1288844 3 2005-09-06

12 3 832109 5 2005-05-13

It hardly gets easier than this.

CodePudding user response:

An error in a regular expression

You've got the NameError because of /gm in the regular expression you use to identify result.

I suppose that /gm was coppied here by mistake. In other languages this could be GLOBAL and MULTILINE match modifiers, which by the way are not needed in this case. But in the python re module they are just three character. As far as you have no line with /gm inside, your result was allways None, so the elif result: ... block was never executed and variables customerid, rating, date were not initialized.

An error in working with variables

If you remove /gm from the first matching, you'll have another problem: the variables customerid, rating, date, movie_id are just strings, so the resulting data frame will reflect only the last record of the source file.

To avoid this we have to work with them as with a list-like structure. For example, in the code below, they are keys in the data dictionary, each referring to a separate list:

file_name = ...

data = {'movie_id': [], 'customerid': [], 'rating': [], 'date': []}

with open(file_name, encoding='latin-1') as f:

for line in f:

result = re.search(r"^(\d ),(\d ),(\d{4}-\d{2}-\d{2})", line)

if re.search(r"(^\d ):", line) is not None:

movie_id = re.search(r"(^\d ):", line).group(1)

elif result:

data['movie_id'].append(movie_id)

data['customerid'].append(result.group(1))

data['rating'].append(result.group(2))

data['date'].append(result.group(3))

else:

continue

df = pd.DataFrame(data)

Code with test data

import re

import pandas as pd

data = '''\

1:

1488844,3,2005-09-06

822109,5,2005-05-13

885013,4,2005-10-19

30878,4,2005-12-26

2:

823519,3,2004-05-03

893988,3,2005-11-17

124105,4,2004-08-05

1248629,3,2004-04-22

1842128,4,2004-05-09

3:

2238063,3,2005-05-11

1503895,4,2005-05-19

1288844,3,2005-09-06

832109,5,2005-05-13

'''

file_name = "data.txt"

with open(file_name, 'tw', encoding='latin-1') as f:

f.write(data)

data = {'movie_id': [], 'customerid': [], 'rating': [], 'date': []}

with open(file_name, encoding='latin-1') as f:

for line in f:

result = re.search(r"^(\d ),(\d ),(\d{4}-\d{2}-\d{2})", line)

if re.search(r"(^\d ):", line) is not None:

movie_id = re.search(r"(^\d ):", line).group(1)

elif result:

data['movie_id'].append(movie_id)

data['customerid'].append(result.group(1))

data['rating'].append(result.group(2))

data['date'].append(result.group(3))

else:

continue

df = pd.DataFrame(data)

df.to_csv(file_name[:-3] 'csv', index=False)

An alternative

df = pd.read_csv(file_name, names = ['customerid', 'rating', 'date'])

df.insert(0, 'movie_id', pd.NA)

isnot_movie_id = ~df['customerid'].str.endswith(':')

df['movie_id'] = df['customerid'].mask(isnot_movie_id).ffill().str[:-1]

df = df.dropna().reset_index(drop=True)