How to convert the following json object:

{"IDpol": [406.0, 407.0, 412.0], "ClaimNb": [1, 1, 1], "Exposure": [0.77, 0.07, 0.84], "Area": ["C", "C", "E"], "VehPower": [9, 9, 7], "VehAge": [0, 0, 3], "DrivAge": [29, 29, 40], "BonusMalus": [72, 72, 54], "VehBrand": ["B12", "B12", "B12"], "VehGas": ["Regular", "Regular", "Regular"], "Density": [360, 360, 5746], "Region": ["R91", "R91", "R11"]}

In the below given csv using azure data factory

IDpol,ClaimNb,Exposure,Area,VehPower,VehAge,DrivAge,BonusMalus,VehBrand,VehGas,Density,Region

406.0 , 1 , 0.77 , C , 9 , 0 , 29 , 72 , B12 ,Regular,360 , R91

407.0 , 1 , 0.07 , C , 9 , 0 , 29 , 72 , B12 ,Regular,360 ,R91

412.0 , 1 , 0.84 , E , 7 , 3 , 40 , 54 , B12 , Regular,5746, R11

CodePudding user response:

I reproduced this and able to get the desired result as follows.

For this first use dataflow to get all arrays as one array of JSON. Store this result as JSON and then use copy activity to get the csv file of individual columns.

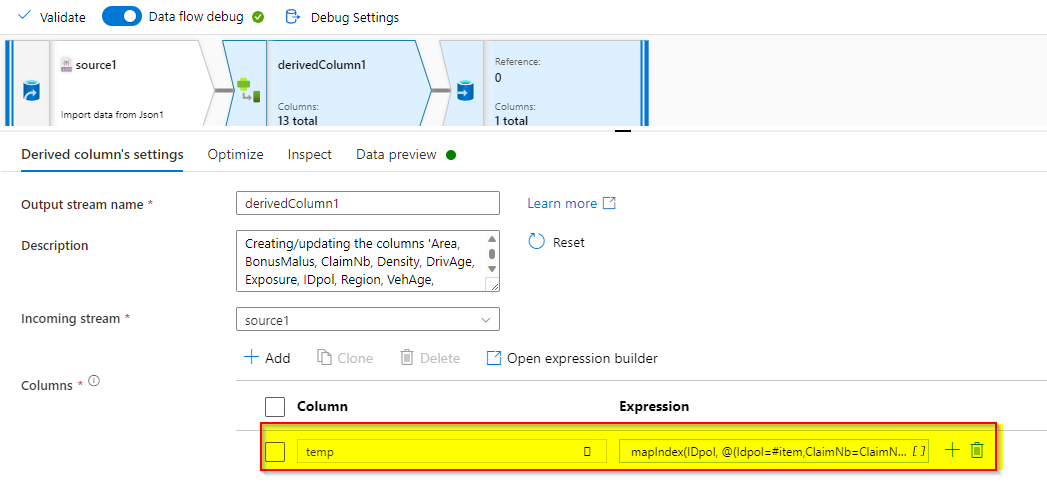

Use Derived column transformation after source.

create another temporary column and give the below dynamic expression.

mapIndex(IDpol, @(Idpol=#item,ClaimNb=ClaimNb[#index],Area=Area[#index],BonusMalus=BonusMalus[#index],DrivAge=DrivAge[#index],Exposure=Exposure[#index],Region=Region[#index],VehAge=VehAge[#index],VehBrand=VehBrand[#index],VehPower=VehPower[#index],VehGas=VehGas[#index],Density=Density[#index]))

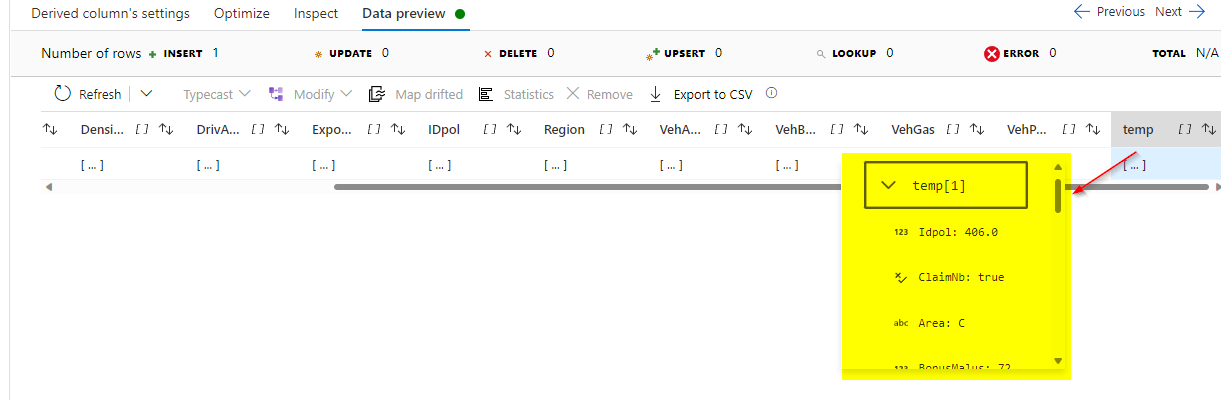

As length of all arrays are same, the above will give the result as follows.

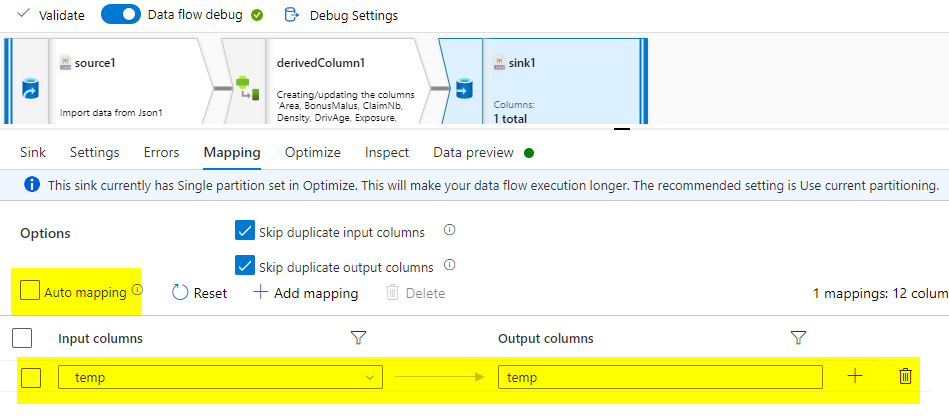

Store this in JSON file. In sink settings give output to single file and specify the file name.

You can remove the extra columns in sink mapping as we only want temp column.

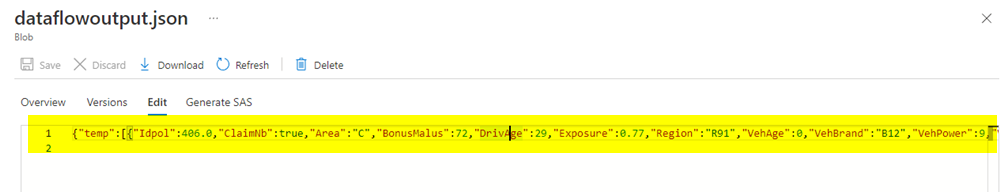

You can see the dataflow output JSON after Executing this dataflow in pipeline.

Now, use copy activity with the above JSON as source file and CSV as sink.

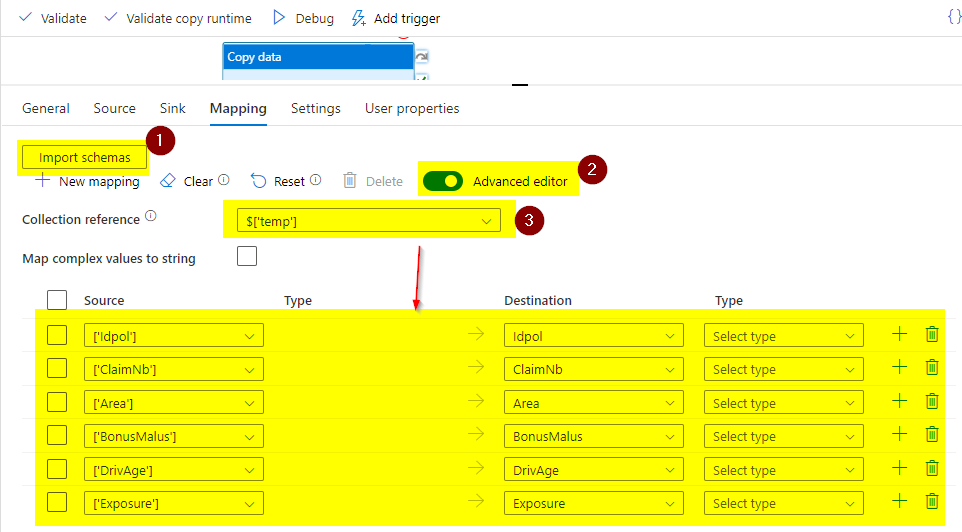

Go to Mapping and click on import mapping. Use Advanced editor and Collection reference to do the correct mapping.

You can mention the type also if you want.

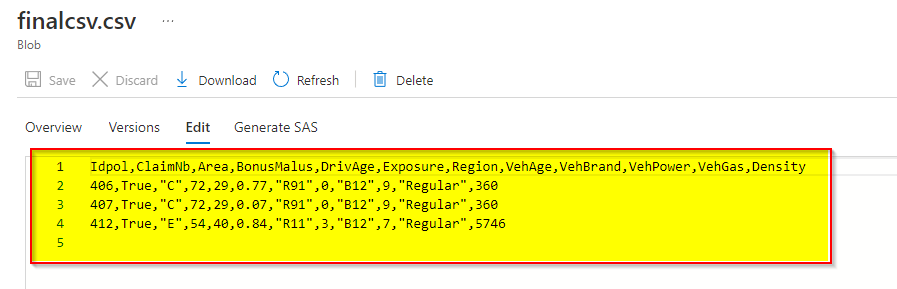

Execute this copy activity and you can get the result CSV like below.