I have a SQL query that I am trying to convert into pyspark the query looks like this

UPDATE EMPLOYEE_TABLE SET A_CONVERSION_TEMPLATE.[STATUS] = "OTHER/MENTIONED" WHERE (((EMPLOYEE_TABLE.[HOLIDAY_TAKEN]) Is Null));

Second Query:

UPDATE EMPLOYEE_TABLE SET EMPLOYEE_TABLE.[STATUS] = "OTHER/MENTIONED" WHERE (((EMPLOYEE_TABLE.STATUS) Is Null));

Third Query with two conditions

UPDATE EMPLOYEE_TABLE SET EMPLOYEE_TABLE.[LANGUAGE] = "ENGLISH",

WHERE (((EMPLOYEE_TABLE.LANGUAGE[])<>"ENGLISH") AND ((EMPLOYEE_TABLE.FLAGYESNO)="Y"));

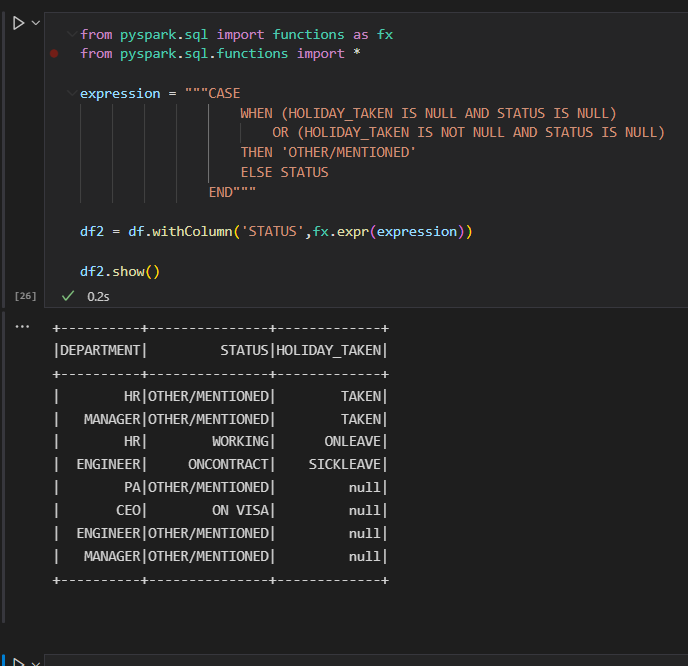

The Pyspark queries I am trying are I have combined the first and second queries because in both we have to update the same column. The query I am using is

df=df.withColumn('STATUS', F.when( (col('STATUS')==''),'OTHER/MENTIONED').

otherwise(when( (col('HOLIDAY_TAKEN]')==''), 'OTHER/MENTIONED').

otherwise('NULL')))

When I am running my df it is updating everything to null in my 'STATUS' Column even the values which were already present it is changing it to 'NULL'

For the third query, I am getting no idea.

Please let me know if need other information.

Attaching the Output for more understanding